longhorn: [BUG] Error when upgrading from 1.1.2 to 1.2.0 - Operation cannot be fulfilled on volumes.longhorn.io \"pvc-edf41777-589d-4806-baca-b91d0a6c0d3c\": the object has been modified; please apply your changes to the latest version and try again

Describe the bug When upgrading from 1.1.2 to 1.2.0 with preexisting volumes using Helm, I receive this error in the manager pod:

time="2021-08-31T22:26:19Z" level=error msg="Upgrade failed: upgrade CRD failed: upgrade from v1.1.0 to v1.2.0: upgrade recurring jobs failed: upgrade from v1.1.0 to v1.2.0: translate volume recurringJobs to volume labels failed: failed to update pvc-edf41777-589d-4806-baca-b91d0a6c0d3c volume: Operation cannot be fulfilled on volumes.longhorn.io \"pvc-edf41777-589d-4806-baca-b91d0a6c0d3c\": the object has been modified; please apply your changes to the latest version and try again"

time="2021-08-31T22:26:19Z" level=info msg="Upgrade leader lost: showman"

time="2021-08-31T22:26:19Z" level=fatal msg="Error starting manager: upgrade CRD failed: upgrade from v1.1.0 to v1.2.0: upgrade recurring jobs failed: upgrade from v1.1.0 to v1.2.0: translate volume recurringJobs to volume labels failed: failed to update pvc-edf41777-589d-4806-baca-b91d0a6c0d3c volume: Operation cannot be fulfilled on volumes.longhorn.io \"pvc-edf41777-589d-4806-baca-b91d0a6c0d3c\": the object has been modified; please apply your changes to the latest version and try again"

It seems this is localized to a single node in the cluster (showman). Most of the volumes have migrated engines already.

I have tried deleting the offending manager pod, but have not taken any other troubleshooting steps.

To Reproduce Steps to reproduce the behavior:

- Create a Longhorn cluster via Helm

- Create PVs

- (assumed) Create backup and snapshot tasks for the PVs

- Upgrade to 1.2.0

- Observe the error on one of the nodes during/after upgrading

Expected behavior Upgrading from 1.1.2 to 1.2.0 when using Helm should result in backup jobs being created successfully for all volumes.

Log

longhorn-support-bundle_5be709ef-a54d-46ea-971f-a9f7c4fb4b26_2021-08-31T22-55-49Z.zip

Environment:

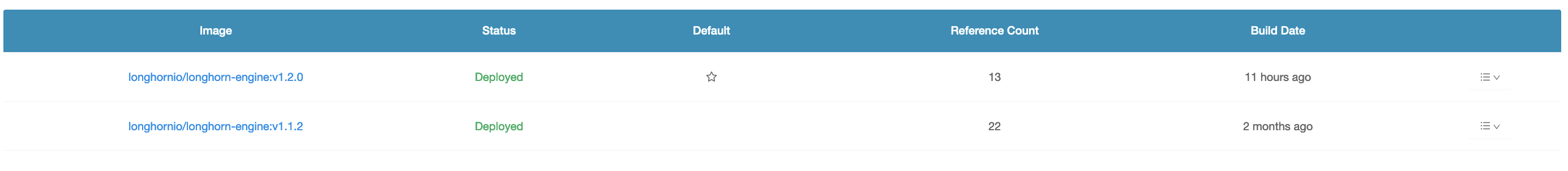

Longhorn version: 1.2.0 (partial upgrade from 1.1.2)

Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Helm

Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version: kubeadm

Kubernetes version: 1.19.9

Number of management node in the cluster: 3

Number of worker node in the cluster: 4

Node config

OS type and version: Ubuntu 20.04.1 LTS

CPU per node: 8

Memory per node: 16GB

Disk type(e.g. SSD/NVMe): NVMe

Network bandwidth between the nodes: 2.5Gbit

Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal): Baremetal

Number of Longhorn volumes in the cluster: 23

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Comments: 25 (16 by maintainers)

Is there a particular reason this got removed from 1.2.1 milestone, @yasker? Right now, it takes several hours for my cluster to become fully functional again whenever I have to restart some of its nodes. And I haven’t seen any workaround being mentioned. Or do I just misinterpret the information here?

It seems that we are doing unnecessary volume CR update here. More specifically, it seems that we still update the volume even if it already has correct label. As a result, we increase the chance of hitting the error

the object has been modified; please apply your changes to the latest version and try againcc @c3y1huang

Validation: PASSED

Upgrading from v1.1.1, v1.1.2 to latest branch included the fix the RecurringJob CRDs will be created.

Please be noted, I can only reproduce the recurring jobs CRD upgrading problem as below,

Recurring Job does not exist Screenshot for the reference

Yes, I’m going to watch to confirm it gets restored to the right number of replicas but it looks like everything’s nominal now.