longhorn: [BUG] Engine Image, randomly readiness-probe process stuck using 1 core entirely

Describe the bug

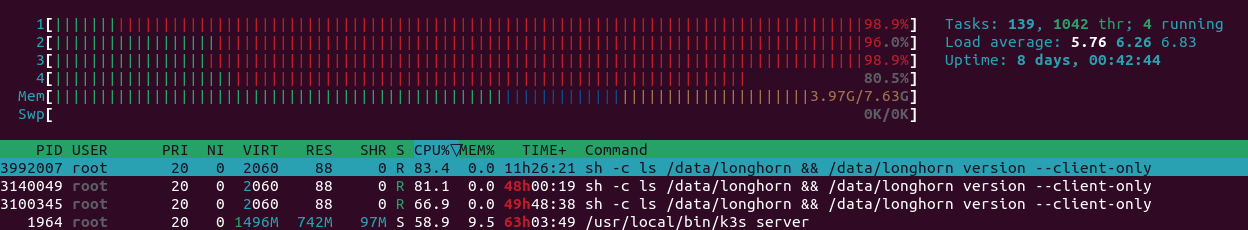

A few weeks ago I recognized an issue with a process using 1 core of the host entirely. Using htop I was able to identify the process to be sh -c ls /data/longhorn && /data/longhorn version --client-only which is the readiness probe of the engine image according to the pod’s manifest:

image: longhornio/longhorn-engine:v1.2.2 imagePullPolicy: IfNotPresent name: engine-image-ei-d4c780c6 readinessProbe:

exec:

command:

- sh

- -c

- ls /data/longhorn && /data/longhorn version --client-only

failureThreshold: 3

initialDelaySeconds: 5

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 4

I was not able to kill the process, just by rebooting the node I was able to get rid of it. I observed this behavior for a few weeks now and it seems that this process randomly gets stuck on some nodes. At the moment on one node I have 3 of those processes running and taking 3 cores to 100%. It is very annoying to restart the nodes each time such a process pops up and gets stuck.

To Reproduce

Steps to reproduce the behavior:

As this issue occurs randomly I cannot really reproduce the issue. Additionally I cannot find anything in the logs.

Expected behavior

The readiness probe process should execute and exit.

Log or Support bundle

If applicable, add the Longhorn managers’ log or support bundle when the issue happens. You can generate a Support Bundle using the link at the footer of the Longhorn UI.

Environment

- Longhorn version: 1.2.2

- Installation method (e.g. Rancher Catalog App/Helm/Kubectl): Helm

- Kubernetes distro (e.g. RKE/K3s/EKS/OpenShift) and version: K3s

- Number of management node in the cluster: 3

- Number of worker node in the cluster: 3

- Node config

- OS type and version: Ubuntu 21.10 arm64

- CPU per node: 1 CPU, 4 Cores

- Memory per node: 8GB

- Disk type(e.g. SSD/NVMe): USB 3 Flash

- Network bandwidth between the nodes: 1 GBit/s

- Underlying Infrastructure (e.g. on AWS/GCE, EKS/GKE, VMWare/KVM, Baremetal): Raspberry Pi 4B 8GB

- Number of Longhorn volumes in the cluster: 23

Additional context

It would be interesting if someone else has the same issue and if it is probably a K3s/arm64/Raspberry Pi related issue.

About this issue

- Original URL

- State: open

- Created 2 years ago

- Reactions: 1

- Comments: 18 (9 by maintainers)

Yes, it cannot be killed, even with Furthermore I cannot see anything in the kern.log e.g. during kill-attempt.

Furthermore I cannot see anything in the kern.log e.g. during kill-attempt.

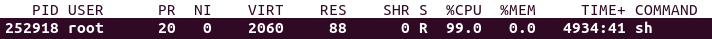

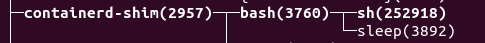

kill -9 <pid>. I just tried to kill the process that exists at the moment and it still remains. It is not a zombie process, but marked as running:I also checked the process tree and (as expected) it is derived from containerd-shim: Here the process’ details:

Here the process’ details:

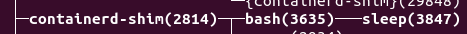

On a “healthy” node this looks like the following:

Hm, I’m not sure if this is really an issue related to longhorn. A few minutes ago I recognized a postgres process on the node where my PostgreSQL pod was running. The process uses 1 core entirely. The container itself is using 100 % of the configured cpu limit of 1. Recreating the pod helped that way that the new pod uses the expected amount of cpu. But the process still persists on the node, htop still shows it and I’m not able to kill it. I think again only a node restart can help me out.

I will try to find out if that’s probably an issue with k3s or something else.

@shuo-wu No stuck process on node03:

I do not think it is related to leaked sockets. This night I observed a DeadlineExceeded on node04, currently 1 process is stuck at 100% for one core:

The current socket count on node04 is still 548.

Actually, I encountered a similar issue in another user’s environment. The investigation is still in progress. For that case, there are tons of exec probe context deadline exceeded errors (besides this engine image readiness probe) in the kubelet logs. One ticket in Kubernetes side may be related to this bug. Can you check it and see if this issue is really caused by the socket leak?