pytorch-lightning: Multi GPU training is stuck

🐛 Bug

To Reproduce

I am experienceing the same problem.

Except that it does not work in ‘dp’ setting as well as in ‘ddp’ setting’

I ran the cifar-10 example with multiple gpus

import os

import torch

import torch.nn as nn

import torch.nn.functional as F

import torchvision

from pl_bolts.datamodules import CIFAR10DataModule

from pl_bolts.transforms.dataset_normalizations import cifar10_normalization

from pytorch_lightning import LightningModule, seed_everything, Trainer

from pytorch_lightning.callbacks import LearningRateMonitor

from pytorch_lightning.loggers import TensorBoardLogger

from torch.optim.lr_scheduler import OneCycleLR

from torch.optim.swa_utils import AveragedModel, update_bn

from torchmetrics.functional import accuracy

seed_everything(7)

PATH_DATASETS = os.environ.get('PATH_DATASETS', '.')

AVAIL_GPUS = torch.cuda.device_count()

BATCH_SIZE = 256 if AVAIL_GPUS else 64

NUM_WORKERS = int(os.cpu_count() / 2)

train_transforms = torchvision.transforms.Compose([

torchvision.transforms.RandomCrop(32, padding=4),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

cifar10_normalization(),

])

test_transforms = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

cifar10_normalization(),

])

cifar10_dm = CIFAR10DataModule(

data_dir=PATH_DATASETS,

batch_size=BATCH_SIZE,

num_workers=NUM_WORKERS,

train_transforms=train_transforms,

test_transforms=test_transforms,

val_transforms=test_transforms,

)

def create_model():

model = torchvision.models.resnet18(pretrained=False, num_classes=10)

model.conv1 = nn.Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

model.maxpool = nn.Identity()

return model

class LitResnet(LightningModule):

def __init__(self, lr=0.05):

super().__init__()

self.save_hyperparameters()

self.model = create_model()

def forward(self, x):

out = self.model(x)

return F.log_softmax(out, dim=1)

def training_step(self, batch, batch_idx):

x, y = batch

logits = self(x)

loss = F.nll_loss(logits, y)

self.log('train_loss', loss)

return loss

def evaluate(self, batch, stage=None):

x, y = batch

logits = self(x)

loss = F.nll_loss(logits, y)

preds = torch.argmax(logits, dim=1)

acc = accuracy(preds, y)

if stage:

self.log(f'{stage}_loss', loss, prog_bar=True)

self.log(f'{stage}_acc', acc, prog_bar=True)

def validation_step(self, batch, batch_idx):

self.evaluate(batch, 'val')

def test_step(self, batch, batch_idx):

self.evaluate(batch, 'test')

def configure_optimizers(self):

optimizer = torch.optim.SGD(

self.parameters(),

lr=self.hparams.lr,

momentum=0.9,

weight_decay=5e-4,

)

steps_per_epoch = 45000 // BATCH_SIZE

scheduler_dict = {

'scheduler': OneCycleLR(

optimizer,

0.1,

epochs=self.trainer.max_epochs,

steps_per_epoch=steps_per_epoch,

),

'interval': 'step',

}

return {'optimizer': optimizer, 'lr_scheduler': scheduler_dict}

model = LitResnet(lr=0.05)

model.datamodule = cifar10_dm

trainer = Trainer(

progress_bar_refresh_rate=10,

max_epochs=30,

gpus=AVAIL_GPUS,

accelerator='dp',

logger=TensorBoardLogger('lightning_logs/', name='resnet'),

callbacks=[LearningRateMonitor(logging_interval='step')],

)

trainer.fit(model, cifar10_dm)

trainer.test(model, datamodule=cifar10_dm)

The output is hanged after working for just one step of training_step(one batch for each gpu).

Also, even if I press Ctrl+C multiple times, it does not halt. So I had to kill the process by looking up in htop.

I also tried the “boring mode” so it does not seems to be a general pytorch/pytorch-lightining problem but rather a problem with multi-gpus. –>

Environment

- CUDA: - GPU: - Tesla V100-SXM2-32GB - Tesla V100-SXM2-32GB - Tesla V100-SXM2-32GB - Tesla V100-SXM2-32GB - available: True - version: 11.1

- Packages: - numpy: 1.21.2 - pyTorch_debug: False - pyTorch_version: 1.8.1+cu111 - pytorch-lightning: 1.4.4 - tqdm: 4.62.2

- System: - OS: Linux - architecture: - 64bit - - processor: x86_64 - python: 3.8.10 - version: #146-Ubuntu SMP Tue Apr 13 01:11:19 UTC 2021

Additional context

Also, If I run the boring model with multi gpus, NCCL_DEBUG=INFO, it gives the message like this

PU available: True, used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0,1,2,3]

/home/jovyan/user/jinwon/simsiam_mixup/lib/python3.8/site-packages/pytorch_lightning/trainer/data_loading.py:105: UserWarning: The dataloader, train dataloader, does not have many workers which may be a bottleneck. Consider increasing the value of the num_workers argument(try 48 which is the number of cpus on this machine) in theDataLoaderinit to improve performance. rank_zero_warn( /home/jovyan/user/jinwon/simsiam_mixup/lib/python3.8/site-packages/pytorch_lightning/trainer/data_loading.py:322: UserWarning: The number of training samples (1) is smaller than the logging interval Trainer(log_every_n_steps=50). Set a lower value for log_every_n_steps if you want to see logs for the training epoch. rank_zero_warn( /home/jovyan/user/jinwon/simsiam_mixup/lib/python3.8/site-packages/pytorch_lightning/trainer/data_loading.py:105: UserWarning: The dataloader, val dataloader 0, does not have many workers which may be a bottleneck. Consider increasing the value of thenum_workers argument (try 48 which is the number of cpus on this machine) in the DataLoader init to improve performance.

rank_zero_warn(

Epoch 0: 0%| | 0/2 [00:00<00:00, 5077.85it/s]

sait3-0:30577:30633 [0] NCCL INFO Bootstrap : Using [0]eth0:172.96.136.150<0>

sait3-0:30577:30633 [0] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

sait3-0:30577:30633 [0] misc/ibvwrap.cc:63 NCCL WARN Failed to open libibverbs.so[.1] sait3-0:30577:30633 [0] NCCL INFO NET/Socket : Using [0]eth0:172.96.136.150<0> sait3-0:30577:30633 [0] NCCL INFO Using network Socket NCCL version 2.7.8+cuda11.1

And it gets stuck after this. Also, Ctrl+C does not work and I have to kill it manually.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 4

- Comments: 17 (9 by maintainers)

@tchaton Currently experiencing this as well. I’ve updated and I had the impression the issue was solved. But after a few runs I got the same error. Any tips to debug this?

EDIT: In my case it works for

dpbut it doesn’t work forddpandddp_spawn. The model is not the one reported above but a more complex one using Transformers.EDIT-2: Disabling 16-bit precision training and setting number of workers to 0 doesn’t help either. EDIT-3: I’ve implemented a few debugging methods to understand where the model hangs. So I’ve implemented:

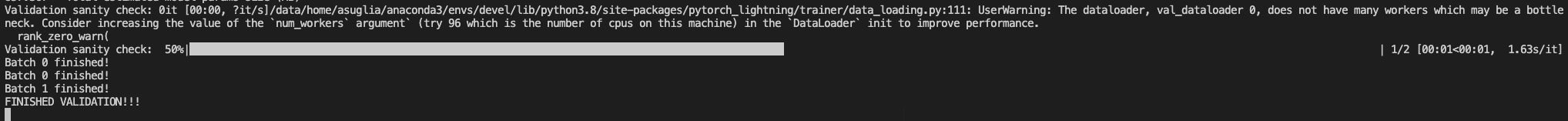

This is what happens:

EDIT-4: I can’t really seem to debug the issue because there seem to be a source of randomness in the script. Sometimes I can go past

on_sanity_check_end()and sometimes I can’t. @tchaton any news?Hi there, sorry for the late reply. Let me clarify what I believe is the issue here and how I managed to solve this problem in my specific use case.

I debugged my code following the checklist below:

--profiler simpleCLI option and check whether yourget_train_batch()is fast enough (below 1s).__getitem__and this was causing the workers to go out of sync.Datasetconstructor make sure that this is reasonably fast. In multi-node execution, this could easily make the workers go out of sync if one machine is faster than the other in loading the data.Again, these are specific debugging steps that I now follow to make sure that my code is ready for multi-gpu training. Not sure if PL can do anything to detect some of these edge cases.

Oh ok. So the problem reported here may not actually be about hanging/stuck processes. If the processes are doing actual work then that’s definitely a different approach. Thanks for clarifying.

1-4 are for any pytorch code so yes, definitely applies to PL as well, one has to be aware of data loading bottlenecks and tune the num_workers parameter, that’s for sure. Seems extremely hard to come up with a formula here for PL to detect such bottlenecks. But suggestions are welcome.

self.log(..., sync_dist=True). It should be done only when absolutely necessary. I believe we need a remark in our docs that this will impact execution speed.I’m still getting this intermittently. I think my issues are only present when I log metrics and sync them.