kubernetes: /stats/summary endpoints failing with "Context Deadline Exceeded" on Windows nodes with very high CPU usage

What happened: Windows nodes running with high (>90%) CPU usage are having calls to /stats/summary time out with “Context Deadline Exceeded”.

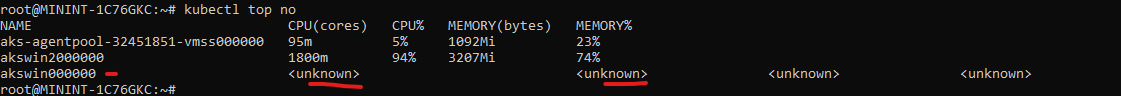

When this happens metrics-server cannot gather accurate CPU/memory usage for the node or pods running on the node which results in

- HPA not functioning

kubectl top node/podreturning <unknown> instead of values

What you expected to happen: Windows nodes should be able to run with high CPU utilization without negatively impacting Kubernetes functionality

How to reproduce it (as minimally and precisely as possible):

- Create a cluster with Windows Server 2019 nodes

- Schedule CPU intensive workloads into cluster

- Scale workload to put node into high CPU state

- Query node metrics with

kubectl top node

Anything else we need to know?: This issue appears to be easier to reproduce on 1.18.x nodes vs 1.16.x or 1.17.x nodes

Ex:

metrics-server logs show E1020 10:58:24.539304 1 manager.go:111] unable to fully collect metrics: unable to fully scrape metrics from source kubelet_summary:akswin000000: unable to fetch metrics from Kubelet akswin000000 (10.240.0.115): Get https://10.240.0.115:10250/stats/summary?only_cpu_and_memory=true: context deadline exceeded

Kubelet logs show

E1002 00:26:59.894818 4680 remote_runtime.go:495] ListContainerStats with filter &ContainerStatsFilter{Id:,PodSandboxId:,LabelSelector:map[string]string{},}

from runtime service failed: rpc error: code = Unknown desc = operation timeout: context deadline exceeded

E1002 00:43:44.801208 4680 handler.go:321] HTTP InternalServerError serving /stats/summary: Internal Error: failed to list pod stats:

failed to list all container stats: rpc error: code = Unknown desc = operation timeout: context deadline exceeded

and

E1002 00:46:31.844828 4680 remote_runtime.go:495] ListContainerStats with filter &ContainerStatsFilter{Id:,PodSandboxId:,LabelSelector:map[string]string{},}

from runtime service failed: rpc error: code = Unknown desc = operation timeout: context deadline exceeded

Environment:

- Kubernetes version (use

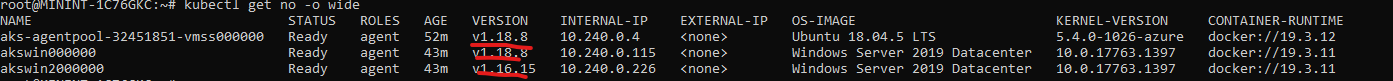

kubectl version): 1.18.8 - Cloud provider or hardware configuration: AKS / Azure

- OS (e.g:

cat /etc/os-release): WIndows Server 2019 - Kernel (e.g.

uname -a): - Install tools:

- Network plugin and version (if this is a network-related bug):

- Others: Docker EE version 19.3.11

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 6

- Comments: 21 (18 by maintainers)

docker/containerd should probably have a flag that allows them to run as high priory as well but would likely be different issues in the respective projects

I was able to reproduce

context deadline exceededon 1.18.8 during a scale operation:kubectl scale --replicas 9 -n default deployment iis-2019-burn-cpuwhere it fully subscribes all CPU’s in Allocatable:Metric server logs:

I do not see “context deadline” in kubelet but I did find some logs assocated:

Once the pods are scheduled the node remains at 100% cpu and I no longer see errors but

kubectl top nodesis not accurate:on the node (run after the pod deployment stabilized)