kubernetes: race condition detected during the scheduling with preemption

What happened: race condition detected during the scheduling with preemption

What you expected to happen: the preemptor with high priority should be scheduled successfully.

How to reproduce it (as minimally and precisely as possible): In the case of preemption, with the below assumption,

-

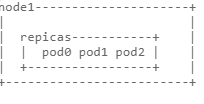

node1 has the replication controller with couple of pods running, and consider to be the candidate node for the preemption,

-

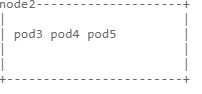

node2 also has some pods running on it but will not be considered to be the candidate node due to either priority or topology constraints,

the scheduling sequence very likely will hit the race condition like below,

step1: preemptor pod (pod6) is coming and found no fit node to schedule

step2: error is recorded and the pod6 is added into the unschedulableQ

step3: preemption is happen on node1 and pod1 is deleted

step4: pod1 is added to activeQ

step5: field of NominatedNodeName is patched to pod6

step6: next scheduling cycle is started and the pod1 is only pod in the activeQ

step7: going to schedule pod in the activeQ (pod1) and the candidate node is node1

step8: event of OnUpdate of the preemptor pod (pod6) is captured and pod6 is moved from unschedulableQ to activeQ

step9: pod1 is scheduled on the node1

step10: next scheduling cycle is started and the pod6 is the only pod in the activeQ

step11: preemption is happened again and pod2 is removed this time.

step12: error is recorded again and the pod is moved to podBackoffQ, and the nominatedNode is still node1

step13: pod condition is not changed, nominatedNode is not changed, so the pod is not updated, pod6 is still in the podBackoffQ.

step14: pod6 needs to wait for backoff time expired before getting moved from podBackoffQ to activeQ

step15: pod2 is scheduled on node1.

looping forever from here !!! along with pod deletion and preemption on node1. … …

The root cause is step8 OnUpdate event is not guaranteed to be finished before step7, they are handled asynchronously.

PR: https://github.com/kubernetes/kubernetes/pull/93179 is happened to partially addressed this issue by updating the nominatedNode to nil in step13, the pod is moved from podBackoffQ to activeQ by the change, this makes it possible to break the infinite loop since high priority preemptor got a chance to be added into the activeQ before the next scheduling cycle is started.

The final solution per my understanding is we need to add a check here, maybe hold for an while util we found the preemptor is added into activeQ

Anything else we need to know?:

Environment:

- Kubernetes version (use

kubectl version): - Cloud provider or hardware configuration:

- OS (e.g:

cat /etc/os-release): - Kernel (e.g.

uname -a): - Install tools:

- Network plugin and version (if this is a network-related bug):

- Others:

About this issue

- Original URL

- State: open

- Created 4 years ago

- Comments: 53 (49 by maintainers)

This issue was filed many years ago, it was an issue with default scheduler at that time. There were many optimization / refactoring around the preemption during the period, so this issue properly not stand at the moment.

I will find time to check this issue again, some labs maybe, and close it if this is not issue anymore.

@ermirry As to your issue, I am not clear how your scheduler is implemented and the manifests you are using, we need more evidence.

In the example, we see that spreading constraints are matching pods that don’t themselves have the same spreading constraint. Is this something we actually should support? Specially when different priorities are involved.

I’m more inclined to document this as a limitation, rather than trying to check for other pods’ rules internally in the scheduler plugin.

But we can still fix the “enter queue”->“issue deletions” order.

oops, sorry, I talk about the same thing ahg-g already talked.

I designed the case that preemptor will fit after the victim pod is got removed.

pls let me do more investigation, properly in my next day. 😃

pod1 was deleted, and so it will not be put back into the active queue. The replication controller will receive the delete event and create a new pod pod1.1 to replace it.

It is true though that an add event to pod1.1 could be received and added to the active queue while preemptor pod is still in backoff, but that is not as fast as saying “pod1 is added to activeQ”.

That shouldn’t happen, nominated pods are taken into account when evaluating filters: https://github.com/kubernetes/kubernetes/blob/8d74486a6a1a8fb2246bd89faf1746393135a463/pkg/scheduler/core/generic_scheduler.go#L469