kubernetes: PVCProtectionController can be slow for larger clusters

References

- https://github.com/kubernetes/kubernetes/issues/76703

- https://github.com/kubernetes/kubernetes/issues/75980

- https://github.com/kubernetes/kubernetes/pull/80492

- https://github.com/kubernetes/design-proposals-archive/blob/main/storage/postpone-pvc-deletion-if-used-in-a-pod.md

Overview

As described here PVC protection controller uses logic that postpones deletion of PVC in case it is used by Pod. When the Pod is deleted and PVC is marked for deletion as well (by setting deletionTimestamp) the PVC protection controllers ensures that all references to the PVC has been deleted and no Pod is using it.

PVC protection controller processes PVC one by one checking if there is still a Pod that has any reference to it. If not, the finalizer is removed and PVC is deleted from the cluster.

The problem

Some time ago the issue was filled that described race condition in PVC protection controller. Basically the logic used and relied only on Pod’s informer cache which could be potentially outdated and PVC protection controller could deleted PVC that it’s still being used (has been recently attached). There was also MR that fixed it.

The problem with the new logic is that it has really slow/inefficient performance which is in order of O(m * n) (where m is the number of PVCs to be processed and n is the number of Pods in the cluster). Someone even pointed it out in the MR but it wasn’t sufficiently measured/analyzed. For active larger cluster 1k+ where there is a lot of Pods/PVCs being created and deleted the PVC protection controller is too slow to process everything. This causes large number of PVCs still being in the cluster what can slow down listing and scheduling but also puts unnecessary pressure on the API server and etcd.

What happens under the hood is that when the Pod is quickly removed from the cluster and PVC is marked for deletion we always will go past the informer check and make another API LIST call: https://github.com/kubernetes/kubernetes/blob/808c8f42d55c520e45eab93200768607cefc1451/pkg/controller/volume/pvcprotection/pvc_protection_controller.go#L212-L226

This means that for each PVC we will do full-blown LIST call that returns all Pods in given namespace. It’s easy to foresee that this logic will not be fast enough to handle active namespaces with 1k+ Pods/PVCs.

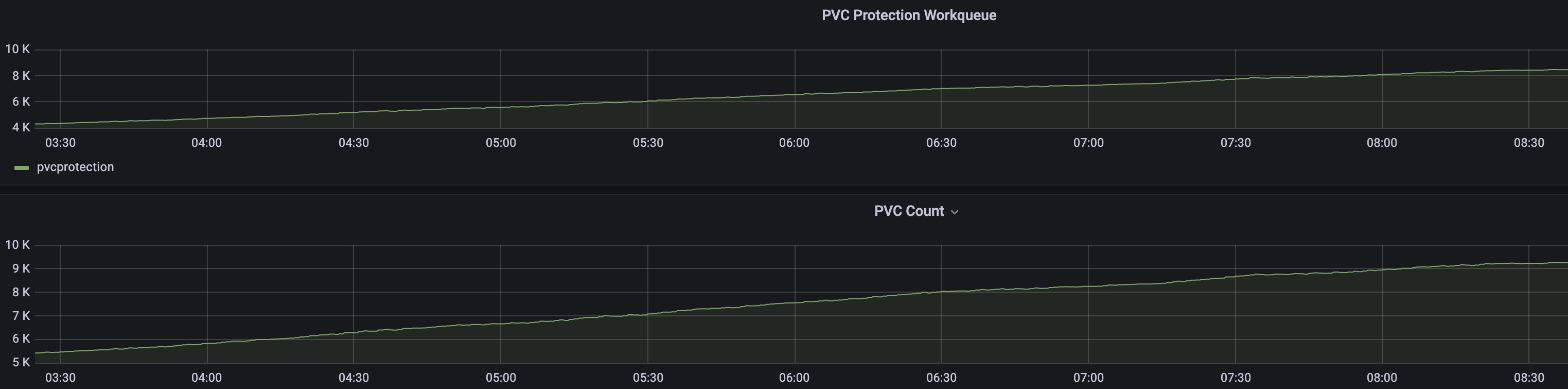

Here is the PVC protection controller’s work queue and the PVC count over 5 hours of active creation/deletion that tests this hypothesis. What happens here is that PVC protection controller is slow to delete the finalizer so the work queue and number of PVCs in the cluster only increases.

This can easily blow up the cluster if no solution is implemented as a remedy.

The (temporary) solutions

There are couple of ways to go around this problem:

- Reduce the size of the namespace. When we list and process Pods/PVCs we operate on single namespace. If we reduce the size of the namespace the PVC protection controller will have an easier work.

- Implement component that will automatically delete protection finalizer for PVCs which for sure will not be used. Basically if you know that the PVCs you are creating will not be reused you can simply automatically delete the finalizer without relying on the PVC protection controller.

- Turn off protection finalizer (see https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/#storageobjectinuseprotection).

Probably there is more (I’m happy to hear if you have suggestions).

But all of proposed solution don’t address the original problem and only make it less probable.

The (considered) solutions

I’ve been thinking on addressing the logic problem but I couldn’t come up with any good idea:

- I initially considered making PVC protection controller multi-threaded. But this doesn’t reduce the overall performance issues and in some cases it could even make things worse. It would probably help in most of the cases but the underlying problem would still remain.

- Then I considered batching. Currently we process one PVC at a time. We could lower number of Pod LIST calls by processing N number of PVCs and executing single Pods LIST call. So instead of having “1 PVC -> 1 Pod LIST call” we could have eg. “20 PVC -> 1 Pod LIST call”. This seems like a interesting approach but it would require changing the whole logic of the controller.

About this issue

- Original URL

- State: open

- Created 2 years ago

- Reactions: 2

- Comments: 25 (15 by maintainers)

In fact the solution proposed by me in https://github.com/kubernetes/kubernetes/issues/109282#issuecomment-1263207774 isn’t correct as the resourceVersion in LIST call and in watch can differ much if there are no changes to a given resource type, e.g.:

Yes, this is the idea.

Potentially in “4. once the informer reaches the expected state we use it to list and check all the pods.” we can simply use index instead of iterating all the pods to make it more performant.

If the concern is that "what happens if the after point “3.” someone adds the pod using PVC, but this is somewhat consistent with LIST pods approach – we check state at some point and if it changes after the list call starts we won’t see it. I presume that there is some mechanism that prevents PVCs waiting for deletion to be bound to the pods that prevents creating new pods after PVC is deleted, so this should be OK.