kubernetes: Pod volume mounting failing even after PV is bound and attached to pod

kubectl version: Client Version: version.Info{Major:“1”, Minor:“5”, GitVersion:“v1.5.1”, GitCommit:“82450d03cb057bab0950214ef122b67c83fb11df”, GitTreeState:“clean”, BuildDate:“2016-12-14T00:57:05Z”, GoVersion:“go1.7.4”, Compiler:“gc”, Platform:“darwin/amd64”} Server Version: version.Info{Major:“1”, Minor:“5”, GitVersion:“v1.5.4+coreos.0”, GitCommit:“97c11b097b1a2b194f1eddca8ce5468fcc83331c”, GitTreeState:“clean”, BuildDate:“2017-03-08T23:54:21Z”, GoVersion:“go1.7.4”, Compiler:“gc”, Platform:“linux/amd64”}

yml file:

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: gcr.io/google_containers/nginx-slim:0.8

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

annotations:

volume.beta.kubernetes.io/storage-class: default

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

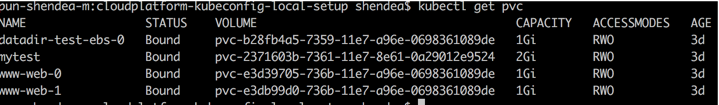

List of bound PVs:

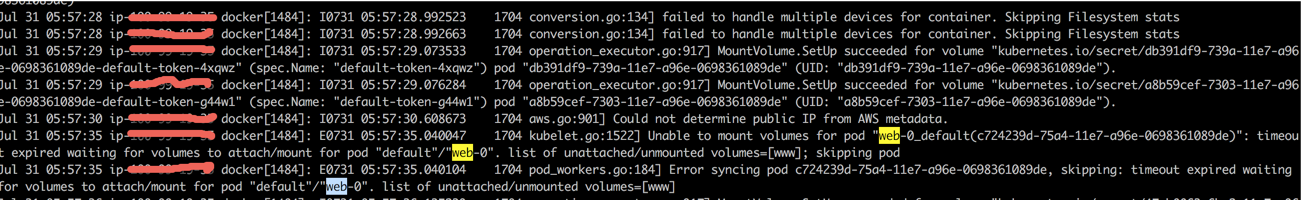

ERROR:

relevant kubelet logs:

About this issue

- Original URL

- State: closed

- Created 7 years ago

- Reactions: 6

- Comments: 77 (14 by maintainers)

Ok… I found out why I was having problems… the instance type of the node where the PV has to be attached was m5.4xlarge and, according to kops release notes, attaching PV to NVME instances is only supported by kubernetes 1.9.x so… anyone having this problem, make sure you are not facing having the same problem.

Here is how I fixed.

Kubernetes version: 1.9.10 Machine type: c5.xlarge

kops 1.8 release notes

kops edit ig nodesfrom:

image: kope.io/k8s-1.9-debian-jessie-amd64-hvm-ebs-2018-03-11to:image: kope.io/k8s-1.9-debian-stretch-amd64-hvm-ebs-2018-03-11kops update cluster $NAME@turgayozgur This indeed fixed the issue. Thanks. Saved me a lot of time.

We have the same issue for both version 1.9.3 and 1.10.2. Replacing C5 instance type with C4 or T2 solve this issue.

Affected Kubernetes versions:

Client Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.2", GitCommit:"81753b10df112992bf51bbc2c2f85208aad78335", GitTreeState:"clean", BuildDate:"2018-04-27T09:22:21Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"9", GitVersion:"v1.9.3", GitCommit:"d2835416544f298c919e2ead3be3d0864b52323b", GitTreeState:"clean", BuildDate:"2018-02-07T11:55:20Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"linux/amd64"}andClient Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.2", GitCommit:"81753b10df112992bf51bbc2c2f85208aad78335", GitTreeState:"clean", BuildDate:"2018-04-27T09:22:21Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.2", GitCommit:"81753b10df112992bf51bbc2c2f85208aad78335", GitTreeState:"clean", BuildDate:"2018-04-27T09:10:24Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"linux/amd64"}kops version:

1.9.0 (git-cccd71e67)Example error message:

Warning FailedMount 5s kubelet, ip-10-20-118-70.eu-west-1.compute.internal Unable to mount volumes for pod "wordpress-mysql-bcc89f687-2wxhq_test(56c877e2-52a6-11e8-9023-0249c3793a2a)": timeout expired waiting for volumes to attach or mount for pod "test"/"wordpress-mysql-bcc89f687-2wxhq". list of unmounted volumes=[mysql-persistent-storage]. list of unattached volumes=[mysql-persistent-storage default-token-srpp5]Some additional information. If I remove everything using

kubectl delete -f mantifest/influxdb.yaml, the EBS volume is in available status. If then I create it again, I get a timeout while trying to mount the volume but the EBS volume is ‘in use’. So… first time, I get an error because of the ‘available’ status, after that… the volume can’t be attached even if it’s ‘in use’ by the target node.Unfortunately this has nothing to do with AWS Availability Zones or VolumeScheduling. AZ related problems are kinda hot nowadays, so people like to mix up that problem with this one, but a quick look at the Availability Zones makes clear that there is no connection.

Today’s testing results: Kubernetes: 1.13.0 Instance: m5.4xlarge EBS: gp2

@lvicentesanchez Thanks a ton, this is exactly what turned out for us. We spawned new cluster with C5.9xlarge ( current generation ) instances on AWS which was getting volumes with NVME conventions which kuberntes 1.8 failed to understand and keep on reporting this volume attachement error on Rancher. Creating new cluster with older instance type with xvd** convention has solved the issue for us.

@huangjiasingle In logs it gives following error. Volume is not able to attach, because of some authorization issue. Is it related to aws IAM policy:

Failed to attach volume “pvc-fff84c66-7b35-11e7-b125-02f3f42ec6aa” on node “ip-100-x-x-x.us-west-2.compute.internal” with: Error attaching EBS volume “vol-00cbb836374d1b37b” to instance “i-03d4ba6b17ab9cf5f”: UnauthorizedOperation: You are not authorized to perform this operation. Encoded authorization failure message: 4nedpChQKhsxXs…

Rotten issues close after 30d of inactivity. Reopen the issue with

/reopen. Mark the issue as fresh with/remove-lifecycle rotten.Send feedback to sig-testing, kubernetes/test-infra and/or fejta. /close

@lvicentesanchez Also fixed for

1.9.3and1.10.0with kops1.8.1.As @Tarun17github pointed out, NVMe nodes don’t work. Personally tested C5 and M5, and they both fail.

Recreated cluster from

to

I updated IAM policy and added

ec2:AttachVolumeandec2:DetachVolume. It resolved authorization issue. But now it is giving another issue. Even though volume is available attached to instance :Failed to attach volume "pvc-79e4c457-7b57-11e7-a96e-0698361089de" on node "ip-100-x-x-x.us-west-2.compute.internal" with: Error attaching EBS volume "vol-00da392489f12395f" to instance "i-02bbbc571c95b69fd": IncorrectState: vol-00da392489f12395f is not 'available'.