kubernetes: kubelet fails with `UnmountVolume.NewUnmounter failed for volume` and `vol_data.json: no such file or directory` for CSI volumes and floods the logs

What happened?

I think kubelet sometimes is not able to properly identify unmounted volume and clean up its actualstateofworld cache. It thinks the volume is not unmounted and keeps on trying unmounting it. This outputs a lot of logs which flood journalctl -> fluentd/bit -> third party logging solution. The flooding causes 2 problems:

- Consumes node disk which reduces the amount of disk available for pods

- Increases ingestion rate for paid third party logging solution thereby costing extra money1 and 2 can be worked around by rotating the logs more frequently, dropping the logs or filtering them out from ingestion into the third party logging solution but it is not a permanent fix to the problem. Also, note that re-starting kubelet fixes the issue. Here’s what the error log looks like:

"Feb 13 22:05:35 ip-10-57-71-57 kubelet: E0213 22:05:35.753487 28558 reconciler.go:193] \"operationExecutor.UnmountVolume failed (controllerAttachDetachEnabled true) for volume \\\"drupal-code\\\" (UniqueName: \\\"kubernetes.io/csi/48033fa8-05f1-4c32-b109-4e25f23107ef-drupal-code\\\") pod \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\" (UID: \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\") : UnmountVolume.NewUnmounter failed for volume \\\"drupal-code\\\" (UniqueName: \\\"kubernetes.io/csi/48033fa8-05f1-4c32-b109-4e25f23107ef-drupal-code\\\") pod \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\" (UID: \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\") : kubernetes.io/csi: unmounter failed to load volume data file [/var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/mount]: kubernetes.io/csi: failed to open volume data file [/var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/vol_data.json]: open /var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/vol_data.json: no such file or directory\" err=\"UnmountVolume.NewUnmounter failed for volume \\\"drupal-code\\\" (UniqueName: \\\"kubernetes.io/csi/48033fa8-05f1-4c32-b109-4e25f23107ef-drupal-code\\\") pod \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\" (UID: \\\"48033fa8-05f1-4c32-b109-4e25f23107ef\\\") : kubernetes.io/csi: unmounter failed to load volume data file [/var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/mount]: kubernetes.io/csi: failed to open volume data file [/var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/vol_data.json]: open /var/lib/kubelet/pods/48033fa8-05f1-4c32-b109-4e25f23107ef/volumes/kubernetes.io~csi/drupal-code/vol_data.json: no such file or directory\"

If you look at the code for 1.22, you can see the the log is output here.

Couple of things to note here:

- We have seen this happen a lot for short-lived pods.

- We have already looked at the following issues/PRs but they don’t solve the problem

- https://github.com/kubernetes/kubernetes/issues/101378

- https://github.com/kubernetes/kubernetes/issues/101911

- https://github.com/kubernetes/kubernetes/issues/85280

- https://github.com/kubernetes/kubernetes/pull/102576 is ported back to 1.21 and 1.22 but it doesn’t fix the problem

- We have seen this problem happen in 1.21 and 1.22 version of kubelet

- The volume is mounted using https://github.com/warm-metal/csi-driver-image

- Because kubelet thinks the volume is still mounted, it doesn’t cleanly take out the pod. The Pod gets stuck in

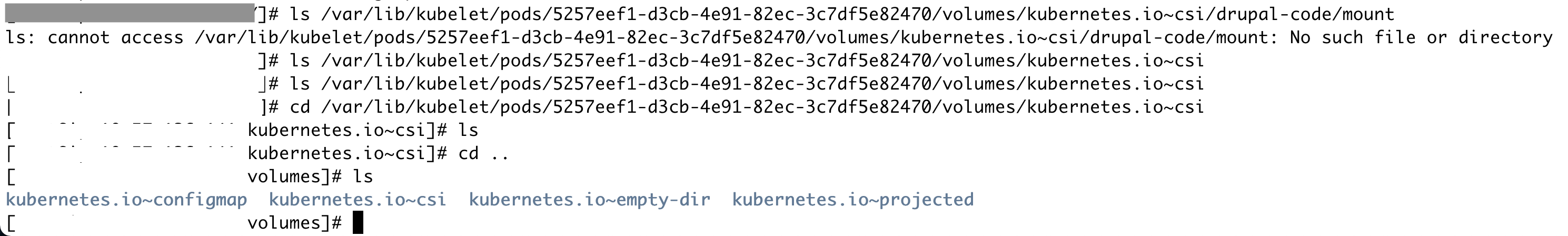

Terminatingstate because all the volumes attached the pod are not unmounted yet. - Note that the directory structure of CSI volume is present. Only

vol_data.jsonis absent

What did you expect to happen?

kubelet to not get stuck trying to unmount a volume

How can we reproduce it (as minimally and precisely as possible)?

We have tried reproducing this issue but it is quite difficult to reproduce manually. So far we have only been able to observe it in our logs after it has happened.

Anything else we need to know?

No response

Kubernetes version

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.1", GitCommit:"86ec240af8cbd1b60bcc4c03c20da9b98005b92e", GitTreeState:"clean", BuildDate:"2021-12-16T11:33:37Z", GoVersion:"go1.17.5", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"22+", GitVersion:"v1.22.16-eks-ffeb93d", GitCommit:"52e500d139bdef42fbc4540c357f0565c7867a81", GitTreeState:"clean", BuildDate:"2022-11-29T18:41:42Z", GoVersion:"go1.16.15", Compiler:"gc", Platform:"linux/amd64"}

Cloud provider

AWS

OS version

# On Linux:

$ cat /etc/os-release

# paste output here

$ uname -a

# paste output here

# On Windows:

C:\> wmic os get Caption, Version, BuildNumber, OSArchitecture

# paste output here

Install tools

Container runtime (CRI) and version (if applicable)

Docker

Related plugins (CNI, CSI, …) and versions (if applicable)

CSI plugin: https://github.com/warm-metal/csi-driver-image

About this issue

- Original URL

- State: open

- Created a year ago

- Comments: 34 (26 by maintainers)

Alright one last update. If anyone is running into problems like these, make sure your CSI driver implements

NodeUnpublishcorrectly at very minimum and idempotent. This issue imo is almost entirely caused by problematic CSI driver implementations.Got the same issue today randomly out of nowhere on a microk8s 1.27 cluster and lonhorn 1.5.1, so it’s not fixed yet. The journalctl keep flooding this error every ms:

I had a promotheus pod stuck in “terminating”. I managed to force delete it but the errors continue. How to stop it?

https://github.com/kubernetes/kubernetes/issues/114207#issuecomment-1535623925

if it is the correct fix

I think these two issues are the same, there is a TOCTOU problem and

vol.datamaybe removed multiple times which trigger this issue. We have fixed this issue by skippingUnmountDevicewhenvol_data.jsonfile does not exist, since in this situation,UnmountDevicemust has been executed successfully before, no need to doUnmountDevicemultiple times.These two problems look like similar. TearDown Operation may be performed multiple times. I will take a look if I have time LOL.

@HirazawaUi @vadasambar I saw the similar issue too on Openshift OCP cluster https://issues.redhat.com/browse/OCPBUGS-11470 Version

AWS with ebs.csi.aws.com csidriver

How can we reproduce it (as minimally and precisely as possible)? I encountered this in a long run test. The test have 15 concurrent users who create namespace, create pod and pvc under the namespace and then delete the namespace, continuously. The issue happened on about 4-5 days after I started the long run test. It happened very rarely, once or twice in a 5 days long run, which had totally 10k+ namespaces creation/deletion.

This bug is similar to a previous bug: https://bugzilla.redhat.com/show_bug.cgi?id=2038780 https://github.com/kubernetes/kubernetes/pull/110670

I have reproduced this error in a local cluster running version 1.26.3 following the steps mentioned above. However, I am uncertain if this error will be triggered when kubelet is functioning normally. I will continue to monitor it. Starting from a particular issue may help to better understand the source code of kubelet 😃

/assign i’ll have a look