kubernetes: Kube-proxy/ipvs;Frequently cannot simultaneous access clusterip and endpoint ip(real server) in pod

What happened: I use calico cni and kube_proxy is on ipvs mode. i found it’s not stable when i simultaneous access apiserver in pod use clusterip and endpoint ip(real server)

** 172.31.0.1 is cluster ip ** 172.28.49.135 is pod ip ** 10.7.210.11 is endpoint ip (real server)

sh-4.2# cat /proc/net/nf_conntrack|grep 40008

ipv4 2 tcp 6 2 CLOSE src=172.28.49.135 dst=172.31.0.1 sport=40008 dport=443 src=172.31.0.1 dst=172.28.49.135 sport=443 dport=40008 [ASSURED] mark=0 secctx=system_u:object_r:unlabeled_t:s0 zone=0 use=2

ipv4 2 tcp 6 63 SYN_SENT src=172.28.49.135 dst=10.7.210.11 sport=40008 dport=16443 [UNREPLIED] src=10.7.210.11 dst=172.28.49.135 sport=16443 dport=40008 mark=0 secctx=system_u:object_r:unlabeled_t:s0 zone=0 use=2

What you expected to happen:

Stay stable when i simultaneous access apiserver in pod use clusterip and endpoint ip(real server)

How to reproduce it (as minimally and precisely as possible):

enter an pod and execute two command: 1.while true; do curl -k https://{api-server-clusterip}:{api-server-service-port}/healthz; done 2.while true; do curl -k https://{api-server-realserver-ip}:{api-server-realserver-port}/healthz; done

Anything else we need to know?:

Environment:

- Kubernetes version (use

kubectl version):1.18 - Cloud provider or hardware configuration:

- OS (e.g:

cat /etc/os-release): CentOS 7 - Kernel (e.g.

uname -a): 3.10.0-957.el7.x86_64 - Install tools:

- Network plugin and version (if this is a network-related bug): calico

- Others:

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 52 (26 by maintainers)

Hi guys!

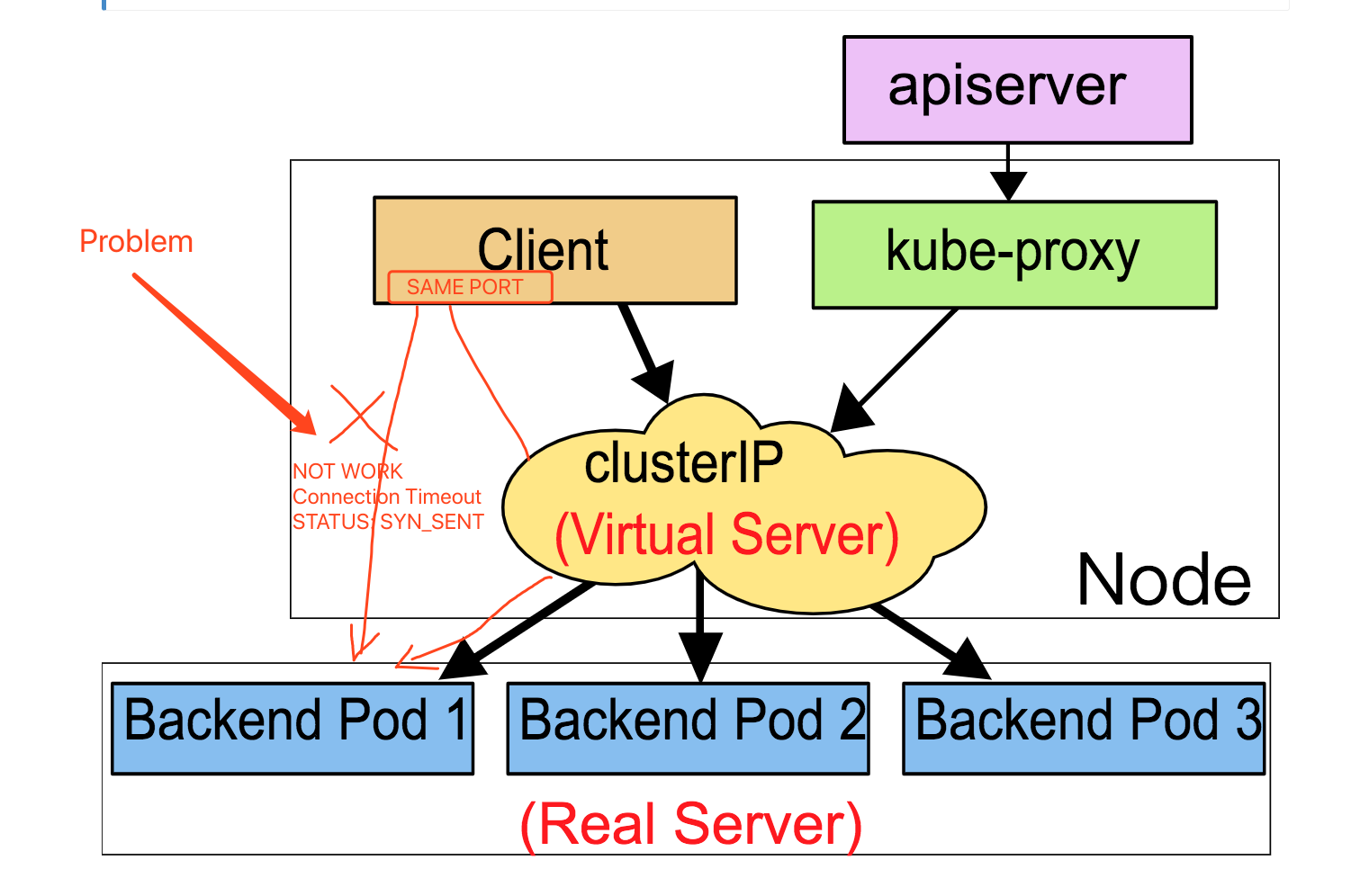

I reproduced this problem with kernel =5.9 and k8s=1.23. Client with same source port can’t access to Virtual Server and Real Server at same time in IPVS mode. Please see shown the below:

the pod “ep” is RS of vip1.

Then will see the connection is ESTABLISHED:

and new a terminal for client-pod, and access to Real Server with same source port indirectly:

Wait a while, Ncat reports TIMEOUT. and look up conntrack entry on master:

RS retry sends SYN_RECV four times but no response, then the connection is destroyed. I notice that the dport of reply conntrack is

3663, Instead of the expected5566. I don’t know why the port has changed, I checked the kernel source code and didn’t find the logic to modify the port (maybe I missed it).On client side(client pod), The status of the connection stays at SYN_SENT:

On server side(RS), The status of the connection stays at SYN_RECV:

I try to find some message about this by enable

net.netfilter.nf_conntrack_log_invalid:I found out that kernel thinks this reply packet is not invalid, so drop it.

But the strange thing is:if I access to RS firstly and then VS, it works. both connections are successfully established:

I’m so confused. I agree this is kernel bug instead of

kube-proxy, I don’t know what’s the root cause.NOTE: mode iptables is works well.

@uablrek Thanks for the troubleshooting! proxy-mode=iptables is GOOD for us, but we want to found the root cause ,because we have the same problem when we use loadbalancer(based ipvs nat,like:keepalived) .

IPVS NAT not support simultaneous access vip and realserver ip by same client port ?

I speculate answer is not. (I test it with ‘nc’ and ‘ncat’,failed connected). iptables is good because it do the NAT for the port.

@SerialVelocity

curl may not support two client use the some local port.