kubernetes: CSI driver registration failed on windows node

What happened:

The CSI driver registration on windows nodes fail initially. The node-driver-registrar logs indicate there is no GetInfo RPC call from kubelet.

node-driver-registrar logs:

➜ kl secrets-store-csi-driver-windows-84mvr node-driver-registrar

I0825 18:26:59.728292 4116 main.go:113] Version: v2.2.0-0-gbd1ad626

I0825 18:27:01.414533 4116 main.go:137] Attempting to open a gRPC connection with: "unix://C:\\csi\\csi.sock"

I0825 18:27:01.414533 4116 connection.go:153] Connecting to unix://C:\csi\csi.sock

I0825 18:27:05.211270 4116 main.go:144] Calling CSI driver to discover driver name

I0825 18:27:05.211270 4116 connection.go:182] GRPC call: /csi.v1.Identity/GetPluginInfo

I0825 18:27:05.211270 4116 connection.go:183] GRPC request: {}

I0825 18:27:05.589304 4116 connection.go:185] GRPC response: {"name":"secrets-store.csi.k8s.io","vendor_version":"v0.2.0"}

I0825 18:27:05.589304 4116 connection.go:186] GRPC error: <nil>

I0825 18:27:05.589304 4116 main.go:154] CSI driver name: "secrets-store.csi.k8s.io"

I0825 18:27:06.186200 4116 node_register.go:52] Starting Registration Server at: /registration/secrets-store.csi.k8s.io-reg.sock

I0825 18:27:06.187475 4116 node_register.go:61] Registration Server started at: /registration/secrets-store.csi.k8s.io-reg.sock

I0825 18:27:06.248675 4116 node_register.go:83] Skipping healthz server because HTTP endpoint is set to: ""

On a working node, there are 2 additional log lines:

I0825 17:59:50.355019 2096 node_register.go:61] Registration Server started at: /registration/secrets-store.csi.k8s.io-reg.sock

I0825 17:59:50.395339 2096 node_register.go:83] Skipping healthz server because HTTP endpoint is set to: ""

Things to note:

- The issue resolves after the

node-driver-registrarcontainer is restarted a couple of times. - This issue is more common in Kubernetes v1.21+.

- The issue does not occur in Linux.

What you expected to happen:

- CSI Driver registers successfully on the first attempt

How to reproduce it (as minimally and precisely as possible):

- Deploy a Kubernetes v1.21+ cluster with windows node and deploy the Secrets Store CSI Driver.

Anything else we need to know?:

Me and @jsturtevant did a little bit of digging in the kubelet logs. We increased the log verbosity and found the following log

I0825 18:26:50.956072 7192 plugin_watcher.go:52] "Plugin Watcher Start" path="\\var\\lib\\kubelet\\plugins_registry"

I0825 18:26:50.956072 7192 plugin_watcher.go:101] "Ensuring Plugin directory" path="\\var\\lib\\kubelet\\plugins_registry"

I0825 18:26:50.957521 7192 plugin_watcher.go:149] "Ignoring file" path="\\var\\lib\\kubelet\\plugins_registry\\secrets-store.csi.k8s.io-reg.sock" mode="-rw-rw-rw-"

which seem to indicate kubelet is ignoring the socket path. The issue however does resolve after multiple restarts of the node-driver-registrar container.

Relevant issues:

- https://github.com/kubernetes-csi/node-driver-registrar/issues/143

- https://github.com/golang/go/issues/33357. This is the exact same code and issue we’re seeing fail for Windows.

Reference code:

/sig windows /sig storage

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 3

- Comments: 31 (26 by maintainers)

I believe this bug is to focus on root causing the problem.

I’ve been doing some tests on AKS

1.21.9. Their Windows nodes are currently using version 1809:The Kubelet log verbosity was increased (connect to the node, edit

C:\k\kubeclusterconfig.jsonand add--v=6as an additional command line parameter) and Kubelet restarted (eithertaskkill /f /im:kubelet.exeor connect remotely and restart the Kubelet service). Note to anyone coming across this (and future me): Microsoft doesn’t support this as an official method to increase the verbosity for Kubelet; also killing the Kubelet process is not something you’d want to do in a production environment.Here are the logs (on AKS Windows nodes, they can be found in

C:\k\kubelet.err) for a successful attempt to start the CSI secret store driver (first block) and for a failed one (2nd block). Pay special attention to the timestamps:I was initially thrown off by the whole

os.Statvsos.Lstattopic, but eventually discovered that on a Windows node neither function is used to determine whether the socket is valid or not, but insteadnet.Dialis used, just as can be seen here https://github.com/kubernetes/kubernetes/blob/4e10ffe45399b797fd4a0da31adc6272090e973c/pkg/kubelet/util/util_windows.go#L130-L145The code that invokes the function above - and that also prints the logs seen previously, is this one https://github.com/kubernetes/kubernetes/blob/4e10ffe45399b797fd4a0da31adc6272090e973c/pkg/kubelet/pluginmanager/pluginwatcher/plugin_watcher.go#L179-L186

The fact the Kubelet plugin watcher waits for new files to be created - and that it immediately attempts to use them as sockets - presents a problem depending on how the underlying implementation is done: the socket file could be created just fine, but the Unix Domain socket backing it might not yet be up and running. This lead me to the idea that the whole problem might be due to a race condition.

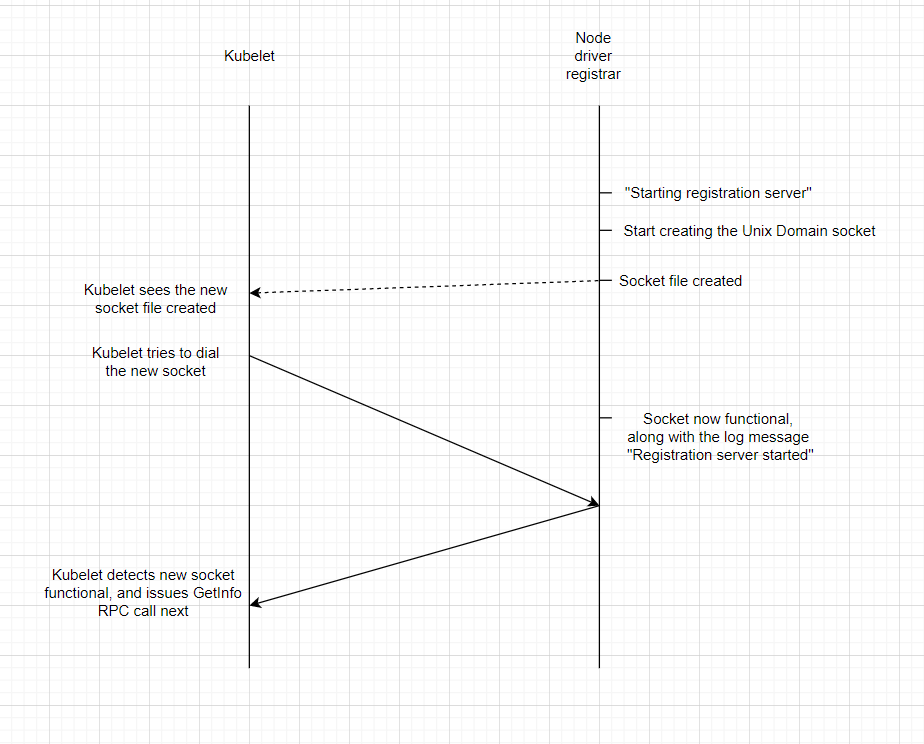

Here’s a diagram showing the case when everything works as expected:

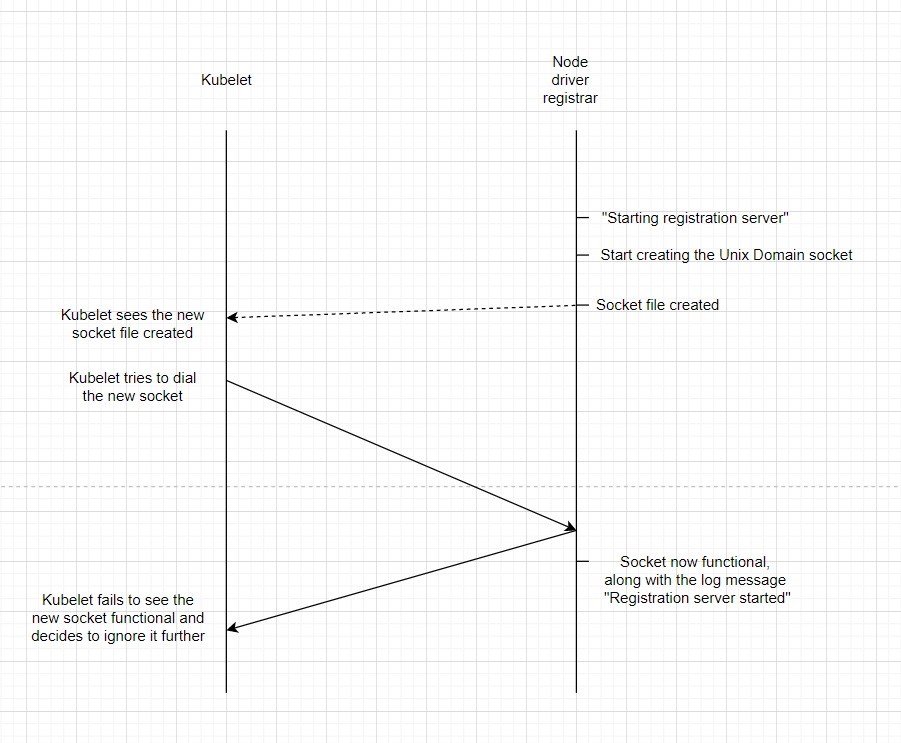

And this is the case when things will break:

Let’s look at the logs for the node driver registrar. The first output is for the successful outcome:

And here’s the failed attempt:

The events actually correspond to the Kubelet logs above, and since all were generated on the same node allows us to rely on the timestamps. It takes less than 1 ms to get from the “Starting Registration Server…” to “Registration Server started…” on the successful run, but about 3 ms for the same thing on the failed run. If you look at the Kubelet logs for the failed run, the fact that Kubelet decides to ignore the socket comes before the timestamp for the “Registration Server started…” in the node driver registrar logs, just as in the diagram above for the failed scenario.

I suspected at one point that the node driver registrar might be to blame, as it’s the one creating the socket in the first place and responsible for it. My concern was that it didn’t respond as expected on the socket, which would lead Kubelet to not use it. To test I created a simple daemon set to essentially mirror the check that the Kubelet does as seen here https://github.com/luckerby/checkSocketFile/blob/master/main.go#L61-L82

But the output suggests there’s no problem with the socket itself. Once it’s up and running, the troubleshooting code found the socket working as expected. The error lines below only occur when the CSI secrets store driver pod is restarted (which causes the respective socket to be unregistered briefly, in turn removing the file itself), but the “gap” between show a fully-functional socket:

@mauriciopoppe Yes, I’ll try to have something done in the next few days