kubeadm: Failed create pod sandbox. Error syncing pod. Pod sandbox changed, it will be killed and re-created.

kube version: 1.8.3 OS version: debian stretch

# kubectl describe po nginx-dm-66d87c478c-h7f9r

Name: nginx-dm-66d87c478c-h7f9r

Namespace: default

Node: uy02-07/192.168.5.40

Start Time: Wed, 29 Nov 2017 02:14:17 -0500

Labels: name=nginx

pod-template-hash=2284370347

Annotations: kubernetes.io/created-by={"kind":"SerializedReference","apiVersion":"v1","reference":{"kind":"ReplicaSet","namespace":"default","name":"nginx-dm-66d87c478c","uid":"e959b0e0-d4d4-11e7-a260-f8db8846244f...

Status: Pending

IP:

Created By: ReplicaSet/nginx-dm-66d87c478c

Controlled By: ReplicaSet/nginx-dm-66d87c478c

Containers:

nginx:

Container ID:

Image: nginx:alpine

Image ID:

Port: 80/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/usr/share/nginx/html from gluster-dev-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-pkg8v (ro)

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes:

gluster-dev-volume:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: glusterfs-nginx

ReadOnly: false

default-token-pkg8v:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-pkg8v

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.alpha.kubernetes.io/notReady:NoExecute for 300s

node.alpha.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m default-scheduler Successfully assigned nginx-dm-66d87c478c-h7f9r to uy02-07

Normal SuccessfulMountVolume 4m kubelet, uy02-07 MountVolume.SetUp succeeded for volume "default-token-pkg8v"

Normal SuccessfulMountVolume 4m kubelet, uy02-07 MountVolume.SetUp succeeded for volume "gluster-dev-volume"

Warning FailedCreatePodSandBox 4m kubelet, uy02-07 Failed create pod sandbox.

Warning FailedSync 2m (x11 over 4m) kubelet, uy02-07 Error syncing pod

Normal SandboxChanged 2m (x11 over 4m) kubelet, uy02-07 Pod sandbox changed, it will be killed and re-created.

The new joined node always reports this error when run any pod.

# kubectl get no

NAME STATUS ROLES AGE VERSION

uy02-07 Ready <none> 1d v1.8.3

uy05-13 Ready master 6d v1.8.3

uy08-07 Ready <none> 6d v1.8.3

uy08-08 Ready <none> 6d v1.8.3

While the kube-proxy and calico-node is running normally.

# kubectl get po --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default glusterfs 1/1 Running 0 21h 192.168.122.39 uy05-13

default nginx-dm-66d87c478c-2kvlj 1/1 Running 0 15m 192.168.122.214 uy08-07

default nginx-dm-66d87c478c-h7f9r 0/1 ContainerCreating 0 15m <none> uy02-07

default nginx-dm-66d87c478c-h9vht 1/1 Running 0 15m 192.168.122.147 uy08-08

default nginx-dm-66d87c478c-nvw2f 1/1 Running 0 15m 192.168.122.40 uy05-13

kube-system calico-etcd-cnwlt 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system calico-kube-controllers-55449f8d88-dffp5 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system calico-node-d6v5n 2/2 Running 4 6d 192.168.5.105 uy08-08

kube-system calico-node-fqxl2 2/2 Running 0 6d 192.168.5.104 uy08-07

kube-system calico-node-hbzd4 2/2 Running 6 6d 192.168.5.42 uy05-13

kube-system calico-node-tcltp 2/2 Running 0 1d 192.168.5.40 uy02-07

kube-system heapster-59ff54b574-ct5td 1/1 Running 2 6d 192.168.122.34 uy05-13

kube-system heapster-59ff54b574-d7hwv 1/1 Running 0 6d 192.168.122.210 uy08-07

kube-system heapster-59ff54b574-vxxbv 1/1 Running 1 6d 192.168.122.143 uy08-08

kube-system kube-apiserver-uy05-13 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system kube-apiserver-uy08-07 1/1 Running 0 6d 192.168.5.104 uy08-07

kube-system kube-apiserver-uy08-08 1/1 Running 1 6d 192.168.5.105 uy08-08

kube-system kube-controller-manager-uy05-13 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system kube-controller-manager-uy08-07 1/1 Running 0 6d 192.168.5.104 uy08-07

kube-system kube-controller-manager-uy08-08 1/1 Running 1 6d 192.168.5.105 uy08-08

kube-system kube-dns-545bc4bfd4-4xf99 3/3 Running 0 6d 192.168.122.209 uy08-07

kube-system kube-dns-545bc4bfd4-8fv7p 3/3 Running 3 6d 192.168.122.142 uy08-08

kube-system kube-dns-545bc4bfd4-jbj9t 3/3 Running 6 6d 192.168.122.35 uy05-13

kube-system kube-proxy-8c59t 1/1 Running 1 6d 192.168.5.105 uy08-08

kube-system kube-proxy-bdx5p 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system kube-proxy-dmzm4 1/1 Running 0 1d 192.168.5.40 uy02-07

kube-system kube-proxy-gnfcx 1/1 Running 0 6d 192.168.5.104 uy08-07

kube-system kube-scheduler-uy05-13 1/1 Running 2 6d 192.168.5.42 uy05-13

kube-system kube-scheduler-uy08-07 1/1 Running 0 6d 192.168.5.104 uy08-07

kube-system kube-scheduler-uy08-08 1/1 Running 1 6d 192.168.5.105 uy08-08

kube-system kubernetes-dashboard-69c5c78645-4r8zw 1/1 Running 2 6d 192.168.122.36 uy05-13

There are 3 files in /etc/kubernetes:

tree

.

├── bootstrap-kubelet.conf

├── kubelet.conf

├── manifests

└── pki

└── ca.crt

It seems something wrong with bootstrap, the requestor is not system:node:NODENAME? Should I generate the certs of the node manually?

# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-kwlj5 6d system:node:uy05-13 Approved,Issued

csr-l9qkz 6d system:node:uy08-07 Approved,Issued

csr-z9nmd 6d system:node:uy08-08 Approved,Issued

node-csr-QA17kKepnsWFF0CdeHM-eyL0iGTazi_g15N0lJShbcw 1d system:bootstrap:2a8d9c Approved,Issued

/var/lib/kubelet# tree

.

├── pki

│ ├── kubelet-client.crt

│ ├── kubelet-client.key

│ ├── kubelet.crt

│ └── kubelet.key

├── plugins

│ └── kubernetes.io

│ └── glusterfs

│ └── gluster-dev-volume

│ └── nginx-dm-66d87c478c-h7f9r-glusterfs.log

└── pods

├── 1bd84367-d3e8-11e7-a260-f8db8846244f

│ ├── containers

│ │ ├── calico-node

│ │ │ └── 1f334834

│ │ └── install-cni

│ │ └── 28629784

│ ├── etc-hosts

│ ├── plugins

│ │ └── kubernetes.io~empty-dir

│ │ └── wrapped_calico-cni-plugin-token-s9hdp

│ │ └── ready

│ └── volumes

│ └── kubernetes.io~secret

│ └── calico-cni-plugin-token-s9hdp

│ ├── ca.crt -> ..data/ca.crt

│ ├── namespace -> ..data/namespace

│ └── token -> ..data/token

└── 1bd844d1-d3e8-11e7-a260-f8db8846244f

├── containers

│ └── kube-proxy

│ └── de681f49

├── etc-hosts

├── plugins

│ └── kubernetes.io~empty-dir

│ ├── wrapped_kube-proxy

│ │ └── ready

│ └── wrapped_kube-proxy-token-5lbfn

│ └── ready

└── volumes

├── kubernetes.io~configmap

│ └── kube-proxy

│ └── kubeconfig.conf -> ..data/kubeconfig.conf

└── kubernetes.io~secret

└── kube-proxy-token-5lbfn

├── ca.crt -> ..data/ca.crt

├── namespace -> ..data/namespace

└── token -> ..data/token

About this issue

- Original URL

- State: closed

- Created 7 years ago

- Comments: 22 (1 by maintainers)

Resolved. This is a network issue, there is another cni network config in

/etc/cni/net.d.Remove it and restart kubelet, it turns working. Close it.

The issue is happening due to bug in weave network. I resolved this issue with below command. kubectl apply -f “https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d ‘\n’)” Reference : https://www.weave.works/docs/net/latest/kubernetes/kube-addon/

This has resolved at my side. So reinstall the kubernetes and try above one.

restarting kubelet service worked for me too

please we delete all the directory net.d.? and how to restart kubelet ?

For any future adventurers, I strongly suggest you start with this step. Restarting

kubeletis a non-destructive operation that has proven (in my experience, YMMV) to resolve many an odd issue. I also strongly suggest you not delete any network configuration files unless you know what you’re doing, as you might inadvertently cause potentially larger and even stranger issues.When in doubt,

docker restart kubeletcan’t hurt 😃 The same generally goes forkube-apiserverthough I’m a little more hesitant with that one for reasons I’m not 100% sure of.@thedarkfalcon i spun a cluster with kops 1.9 (which is fairly old kops version) it turns out that

portmapCNI plugin is not installed by this kops version. Portmap plugin is needed by weavenet 2.5.0 as from 2.5 weave is using.conflistfor CNI config and refferring to portmap.If you use any latest kops releases (1.10 or 1.11) it installs portmap CNI plugin by default.

Just for anyone arriving at this thread, I found that Weave Net 2.5.0 was breaking my clusters. I had an exisiting cluster which broke after applying updates (including Weave), existing pods kept functioning, however any new pods failed to create. I then created a new cluster, and the exact same thing happened; Created cluster using kops, applied weave, applied kubedashboard, the dashboard failed to create, and the kubedns pods wouldn’t create either.

I then created a new cluster but specified the weave 2.4.1 yml instead of getting the latest, the dashboard and kubedns spun up fine. I then deleted weave from my first cluster, and installed weave 2.4, all the pods that had been stuck in ContainerCreating started to work.

Possibly a compatibility issue between the K8S version that kops deploys, and the version weave needs. However weave 2.5.0 has daemonset for older K8S versions… so I don’t know why I’m encountering problems.

Edit: Further details.

Kops version: Version 1.9.0 (git-cccd71e67) Kubectl version: Client: v1.10.3, Server: v1.9.6

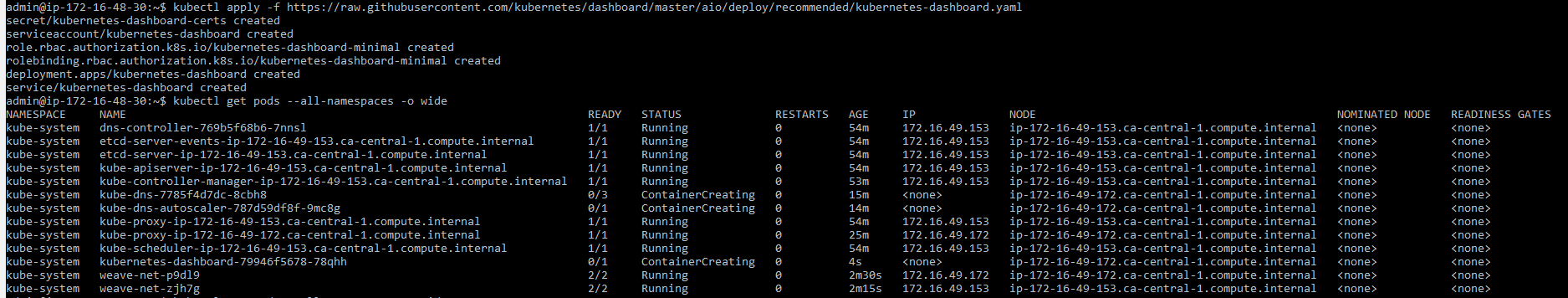

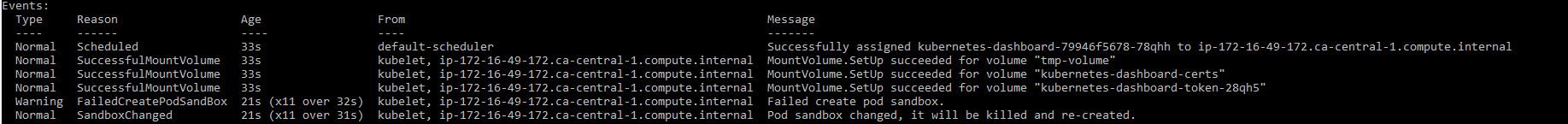

Base cluster after deploying weave 2.5.0 Adding kube dashboard - obviously it fails to create container too.

Adding kube dashboard - obviously it fails to create container too.

Description of dashboard.

Description of dashboard.

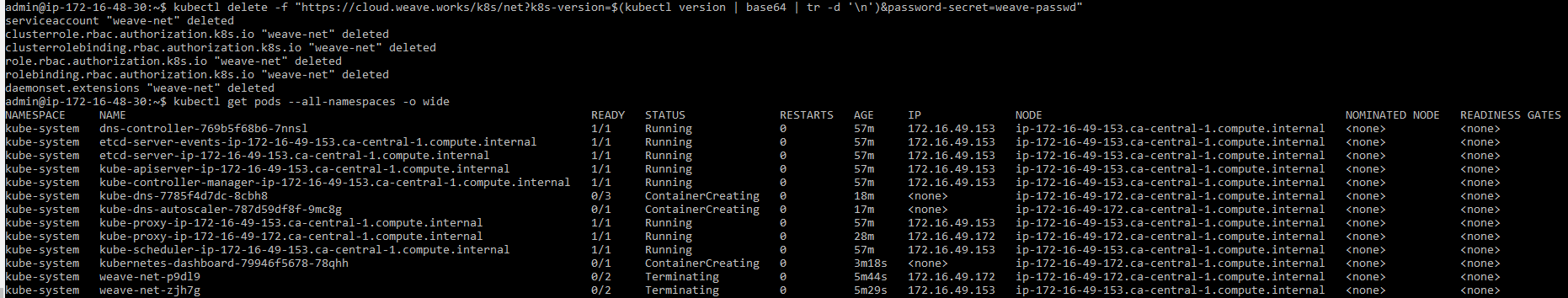

Deleting weavenet 2.5.0

Deleting weavenet 2.5.0

Applying weavenet 2.4.1

Applying weavenet 2.4.1

All pods now successfully create.

All pods now successfully create.

@sagarfale I noticed this command is to install weave. (at https://kubernetes.io/docs/setup/independent/create-cluster-kubeadm/) But reinstalling weave doesn’t help…