kops: `kops validate cluster` always fails with "machine ... has not yet joined cluster for k8s 1.10.x, 1.11.0

- What

kopsversion are you running? The commandkops version, will display this information.

┬─[fmil@fmil:~/tmp]─[01:55:22 PM] ╰─>$ kops version Version 1.10.0-alpha.1 (git-7f70266f5) (but same results on 1.9.0, 1.9.1)

-

What Kubernetes version are you running?

kubectl versionwill print the version if a cluster is running or provide the Kubernetes version specified as akopsflag. -

What cloud provider are you using?

GCP

- What commands did you run? What is the simplest way to reproduce this issue?

env KOPS_FEATURE_FLAGS=AlphaAllowGCE kops create cluster <redacted>

–zones=us-central1-a

–state=<redacted>

–project=$(gcloud config get-value project)

–kubernetes-version=1.11.0

env KOPS_FEATURE_FLAGS=AlphaAllowGCE kops update cluster c1.fmil.k8s.local --yes kops validate cluster

- What happened after the commands executed?

kops validate cluster never validates successfully. (above command is with kubernetes 1.11.0, but trying with 1.10.3 is the same.)

Error messages are such: machine “https://www.googleapis.com/compute/<redacted>/master-us-central1-a-016z” has not yet joined cluster machine “https://www.googleapis.com/compute/<redacted>/nodes-bq8c” has not yet joined cluster.

- What did you expect to happen?

cluster validates successfully, eventually. Typically within 5 minutes this should happen.

I observed, however, that even though the cluster does not validate, it works just fine. I.e. kubectl version works, and every other operation on the cluster that I tried also works.

- Please provide your cluster manifest. Execute

kops get --name my.example.com -o yamlto display your cluster manifest. You may want to remove your cluster name and other sensitive information.

apiVersion: kops/v1alpha2 kind: Cluster metadata: creationTimestamp: 2018-06-20T16:14:34Z name: c2.fmil.k8s.local spec: api: loadBalancer: type: Public authorization: rbac: {} channel: stable cloudProvider: gce configBase: gs://redacted etcdClusters:

- etcdMembers:

- instanceGroup: master-us-central1-a name: a name: main

- etcdMembers:

- instanceGroup: master-us-central1-a name: a name: events iam: allowContainerRegistry: true legacy: false kubernetesApiAccess:

- 0.0.0.0/0 kubernetesVersion: 1.11.0 masterPublicName: api.c2.fmil.k8s.local networking: kubenet: {} nonMasqueradeCIDR: redacted project: redacted sshAccess:

- 0.0.0.0/0 subnets:

- name: us-central1 region: us-central1 type: Public topology: dns: type: Public masters: public nodes: public

- Please run the commands with most verbose logging by adding the

-v 10flag. Paste the logs into this report, or in a gist and provide the gist link here.

n/a

- Anything else do we need to know?

n/a

About this issue

- Original URL

- State: closed

- Created 6 years ago

- Reactions: 21

- Comments: 18 (3 by maintainers)

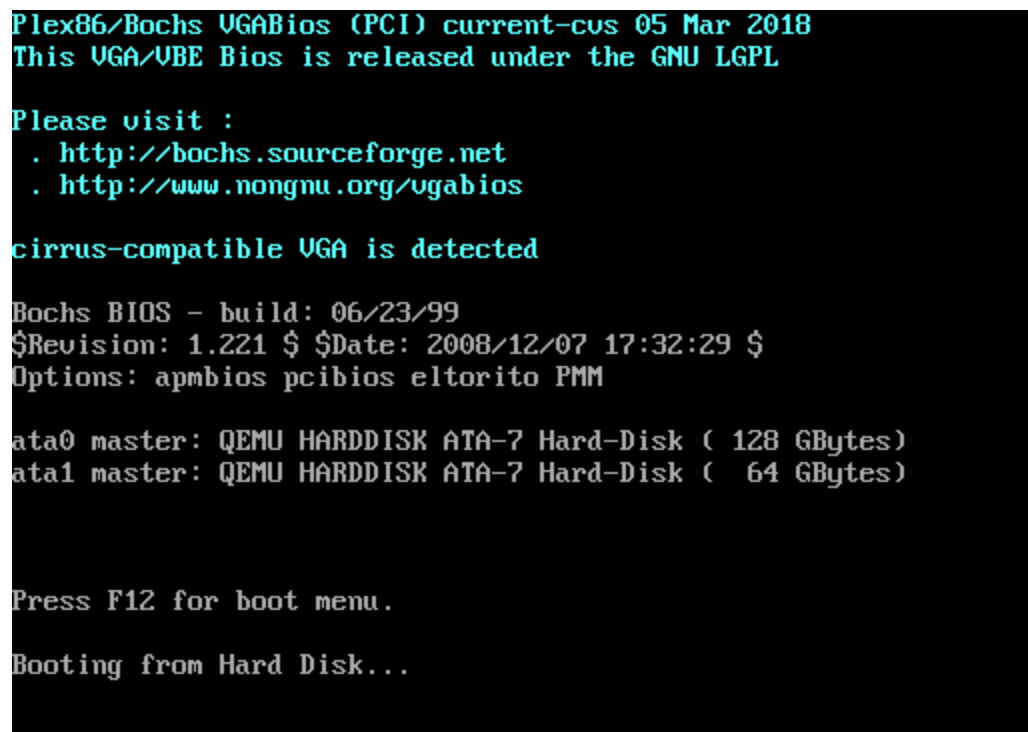

I have the same issue too, only master can be access. The screenshot of instance node report this

I’ve experienced the same issue. Details, from my perspective.

machine "i-xxxxxxxxxxxxxxxx" has not yet joined clusterkops rolling-update clusterSUCCEEDS in validating the cluster in-between node rolling.I am currently seeing a similar issue. The issue appeared when I switched to using the

amazon-vpc-routed-enioption.Full command:

In the kubelet logs on the nodes I see this message:

kops version is

1.10.1Downgrading to Kubernetes

1.10.11appears to make the issue disappear.Thank you @mlapointe22. This fixed my problem for 2 days 🗡️

Hey All!

I had this issue and what fixed it for me was to hop into Route 53.

kopshad left the default bootstrap IP (203.0.113.123 in my case) in the api.internal A record for the master node.As soon as I updated the api.internal A record in Route 53 to point to the Private IP of the master node, the workers started joining the cluster no problem.