kubeflow: GKE Kubeflow google cloud SDK "ERROR: timed out"

/kind bug

What steps did you take and what happened: [A clear and concise description of what the bug is.]

- Deploy Kubeflow 0.7 on GKE

- Run a Pods(docker image google/cloud-sdk:273.0.0)

- start to use google cloud SDK(gsutil)

- gsutil and glcoud start to timeout few hours later

The timeout error does not occur initially, but begins to occur after a few hours. Once it occurs, the timeout error continues to occur. I did some research and couldn’t find a reason. If anyone knows the reason for this, please help.

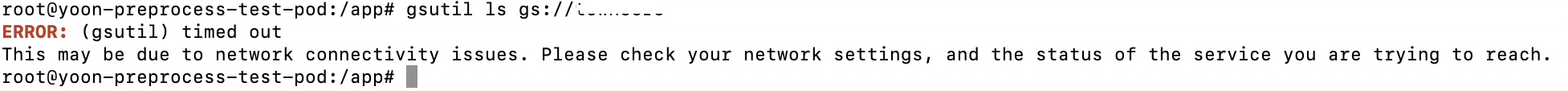

gsutil timeout message

yoon@daangn-gpu2:~$ k exec -it -n kubeflow yoon-preprocess-test-pod /bin/bash

root@yoon-preprocess-test-pod:/app# gsutil ls gs://<GS_URL>

ERROR: (gsutil) timed out

This may be due to network connectivity issues. Please check your network settings, and the status of the service you are trying to reach.

gcloud timeout message

yoon@daangn-gpu2:~$ k exec -it -n kubeflow yoon-preprocess-test-pod /bin/bash

root@yoon-preprocess-test-pod:/app# gcloud auth list

ERROR: (gcloud.auth.list) timed out

This may be due to network connectivity issues. Please check your network settings, and the status of the service you are trying to reach.

What did you expect to happen: No problem using google cloud sdk

Anything else you would like to add: [Miscellaneous information that will assist in solving the issue.]

Environment:

-

Kubeflow version: build version 0.7.0

-

kfctl version: kfctl v0.7.0

-

Kubernetes platform: GKE

-

Kubernetes version: Client Version: version.Info{Major:“1”, Minor:“15”, GitVersion:“v1.15.6”, GitCommit:“7015f71e75f670eb9e7ebd4b5749639d42e20079”, GitTreeState:“clean”, BuildDate:“2019-11-13T11:20:18Z”, GoVersion:“go1.12.12”, Compiler:“gc”, Platform:“linux/amd64”} Server Version: version.Info{Major:“1”, Minor:“14+”, GitVersion:“v1.14.9-gke.2”, GitCommit:“0f206d1d3e361e1bfe7911e1e1c686bc9a1e0aa5”, GitTreeState:“clean”, BuildDate:“2019-11-25T19:35:58Z”, GoVersion:“go1.12.12b4”, Compiler:“gc”, Platform:“linux/amd64”}

-

OS (e.g. from

/etc/os-release): -

Inside Pod - NAME=“Ubuntu” VERSION=“16.04.5 LTS (Xenial Xerus)” ID=ubuntu ID_LIKE=debian PRETTY_NAME=“Ubuntu 16.04.5 LTS” VERSION_ID=“16.04” HOME_URL=“http://www.ubuntu.com/” SUPPORT_URL=“http://help.ubuntu.com/” BUG_REPORT_URL=“http://bugs.launchpad.net/ubuntu/” VERSION_CODENAME=xenial

gsutil timeout image

glcoud timeout image

glcoud timeout image

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 1

- Comments: 17 (4 by maintainers)

We just filed a ticket with Google Support about this today. We see this problem on

1.15.4-gke.22but not on ~1.14.9-gke.2~1.14.8-gke.18. We are using workload identity for our pipelines. The gke-metadata-server daemonset pods report:We are able to temporarily resolve the issue by cycling the gke-metadata-server daemonset pods.

Related Google Ticket: https://issuetracker.google.com/issues/146622472

@louisvernon I fixed it by downgrading the cluster to version 1.14.8-gke.18. I will keep checking the tickets you have left and will upgrade the cluster later. Thanks.

I got the same issue: https://github.com/kubeflow/pipelines/issues/2773

I think that is GKE’s issue. The workload identity is unstable now