kubeflow: Error in Pipeline section on dashboard: `upstream connect error or disconnect/reset before headers`

/kind bug

What steps did you take and what happened: After successfully deployed kubeflow on Azure (I had to change the yaml file for installation, more details in #5246 ). I run the following command to be able to check the kubeflow dashboard:

kubectl port-forward svc/istio-ingressgateway -n istio-system 8080:80

I’m able to visit the Dashboard page, I can visit the Home, Notebook Servers, Katib and Artifact store sections. But when I try to visit the Pipelines section I got the following error:

upstream connect error or disconnect/reset before headers. reset reason: connection failure

What did you expect to happen:

I except to be able to visit the Pipelines section and run some examples pipelines.

Anything else you would like to add: YAML file used in the deployment: kfctl_k8s_istio.v1.1.0.yaml.txt

$ kubectl get all -n anonymous

NAME READY STATUS RESTARTS AGE

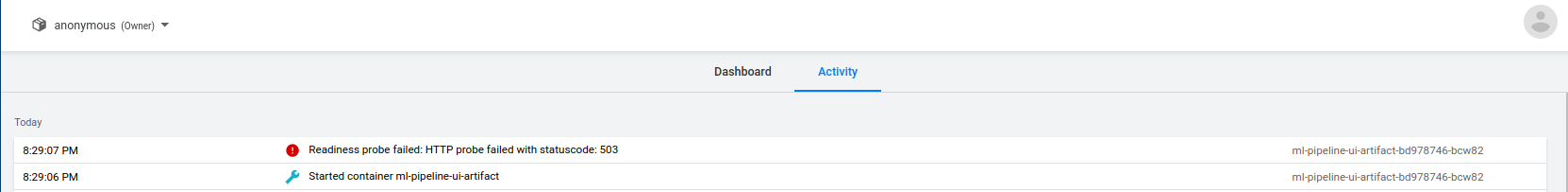

pod/ml-pipeline-ui-artifact-bd978746-bcw82 2/2 Running 0 29m

pod/ml-pipeline-visualizationserver-865c7865bc-nw2vb 2/2 Running 0 29m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ml-pipeline-ui-artifact ClusterIP 10.0.208.227 <none> 80/TCP 29m

service/ml-pipeline-visualizationserver ClusterIP 10.0.13.172 <none> 8888/TCP 29m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ml-pipeline-ui-artifact 1/1 1 1 29m

deployment.apps/ml-pipeline-visualizationserver 1/1 1 1 29m

NAME DESIRED CURRENT READY AGE

replicaset.apps/ml-pipeline-ui-artifact-bd978746 1 1 1 29m

replicaset.apps/ml-pipeline-visualizationserver-865c7865bc 1 1 1 29m

$ kubectl logs -n anonymous ml-pipeline-ui-artifact-bd978746-bcw82

Error from server (BadRequest): a container name must be specified for pod ml-pipeline-ui-artifact-bd978746-bcw82, choose one of: [ml-pipeline-ui-artifact istio-proxy] or one of the init containers: [istio-init]

$ kubectl logs -n anonymous pod/ml-pipeline-visualizationserver-865c7865bc-nw2vb

Error from server (BadRequest): a container name must be specified for pod ml-pipeline-visualizationserver-865c7865bc-nw2vb, choose one of: [ml-pipeline-visualizationserver istio-proxy] or one of the init containers: [istio-init]

Environment:

- Kubeflow version: build version v1beta1

- kfctl version: kfctl v1.1.0-0-g9a3621e

- Kubernetes platform: Azure Kubernetes Service

- Kubernetes version: (use

kubectl version):

Client Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.13", GitCommit:"39a145ca3413079bcb9c80846488786fed5fe1cb", GitTreeState:"clean", BuildDate:"2020-07-15T16:18:19Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.13", GitCommit:"1da71a35d52fa82847fd61c3db20c4f95d283977", GitTreeState:"clean", BuildDate:"2020-07-15T21:59:26Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

- OS (e.g. from

/etc/os-release): 18.04.5 LTS (Bionic Beaver)

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 6

- Comments: 25 (5 by maintainers)

Suspecting this to be a mTLS issue I disabled it on both ml-pipeline and ml-pipeline-ui destination rules as follows

$ kubectl edit destinationrule -n kubeflow ml-pipelineModify the tls.mode (the last line) from ISTIO_MUTUAL to DISABLE

do this for the ml-pipeline-ui destination rule as well

run

$./istioctl authn tls-check <istio-ingressgateway-pod> -n istio-system |grep pipelineto verify the client is HTTP for both ml-pipeline and ml-pipeline-ui authentication policies ( it was mTLS before the changes)

now accessing the pipelines UI works.

I assume, this is probably a configuration issue during install that needs to be fixed

Try this solution that is suggested above first might save you lot of time. Worked for me Thanks @danishsamad

Resolved the issue on kubeflow v1.2 also kfctl_k8s_istio.v1.2.0.yaml also. Deployment is on Azure AKS

kubectl edit destinationrule -n kubeflow ml-pipeline

Modify the tls.mode (the last line) from ISTIO_MUTUAL to DISABLE

kubectl edit destinationrule -n kubeflow ml-pipeline-ui

Modify the tls.mode (the last line) from ISTIO_MUTUAL to DISABLE

@danishsamad It could be some kind of race condition where your istio admission webhook wasn’t ready when you deployed the kubeflow components.

You can confirm this by checking if the

istio-proxycontainer is missing from theml-pipeline-ui:But if your istio is up and running, you almost certainly just need to recreate/restart all the pipeline stuff:

Hi all, the solution to this is simple, just make sure your

Namespace/kubeflowhas the right labels.PLEASE NOTE: you will need to recreate all resources in

Namespace/kubeflow, after making this change.I have a similar setup and facing the exact same issue. To debug I enabled access logs on my istio ingress gateway pod and I get this tls error when I access the pipelines page

[2020-09-03T14:34:03.746Z] "GET /pipeline/ HTTP/1.1" 503 UF,URX "-" "TLS error: 268435703:SSL routines:OPENSSL_internal:WRONG_VERSION_NUMBER" 0 91 76 - "10.244.1.34" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36" "e913d047-57e2-40a8-b1b0-b5a74b523aa9" "localhost:8080" "10.244.1.29:3000" outbound|80||ml-pipeline-ui.kubeflow.svc.cluster.local - 127.0.0.1:80 127.0.0.1:53600Also, when trying to upload a pipeline using a kale notebook I got the same “upstream connect error…”

thesuperzapper

You’re right. Thank you for the explanation. I spent a lot of time trying to use a fix (changing ISTIO_MUTUAL to DISABLE) but it works for a while then appear RBAC access denied error.

So, if you got “upstream connect error or disconnect/reset before headers” error check that ml-pipeline and ml-pipeline-ui pod got two running containers (ml-pipeline and istio-proxy).

Just want to mention that I deployed the istio-dex kubeflow version (using kfctl_istio_dex.v1.1.0.yaml) and the same problem appears, and the same hack fix it.

Hello there,

Well doing:

kubectl logs -n istio-system -l istio=ingressgatewayI get:kubectl logs -n kubeflow ml-pipeline-6bc56cd86d-l8866I got:kubectl logs -n kubeflow ml-pipeline-ui-8695cc6b46-v4t6hI got:Anyway, I follow the @danishsamad’s advice and run:

kubectl edit destinationrule -n kubeflow ml-pipelinekubectl edit destinationrule -n kubeflow ml-pipeline-uiAnd edited the

spec.trafficPolicy.tls.modesection, changing its value fromISTIO_MUTUALtoDISABLE. The I could visit the pipelines section.