kubeedge: Inconsistent status between master and node

What happened:

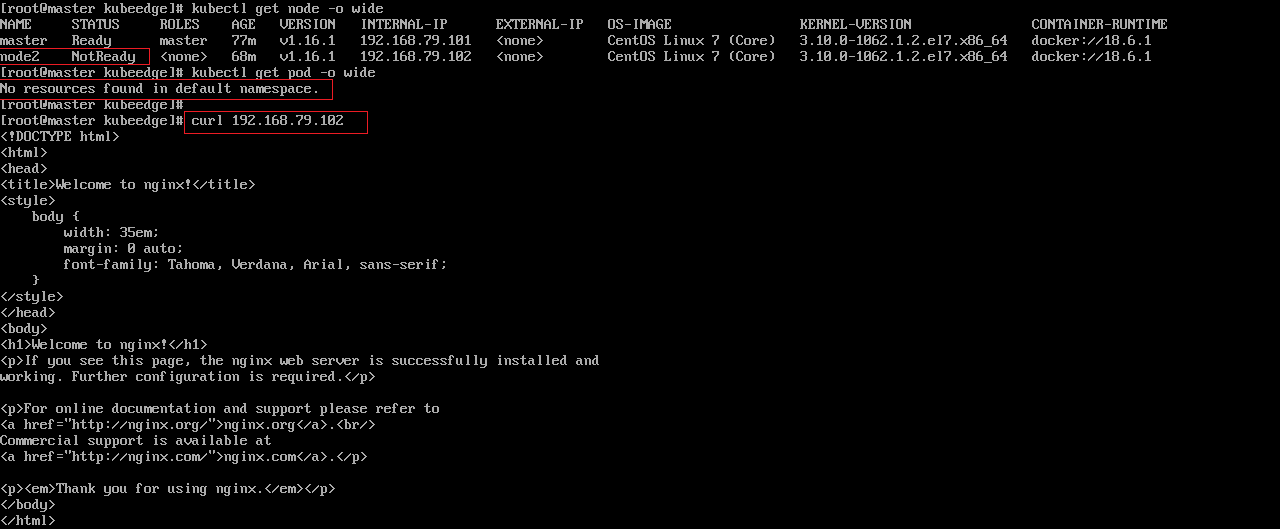

Master showed it that the status of node2 was NotReady and no pod was running.

However, the nginx was actually running in the background.

What you expected to happen: I think it should be in one of the two situations:

- Status of node2 is ‘Ready’ and there’s at least one pod here. Thus,

curlworks well. curldoesn’t work.

How to reproduce it (as minimally and precisely as possible):

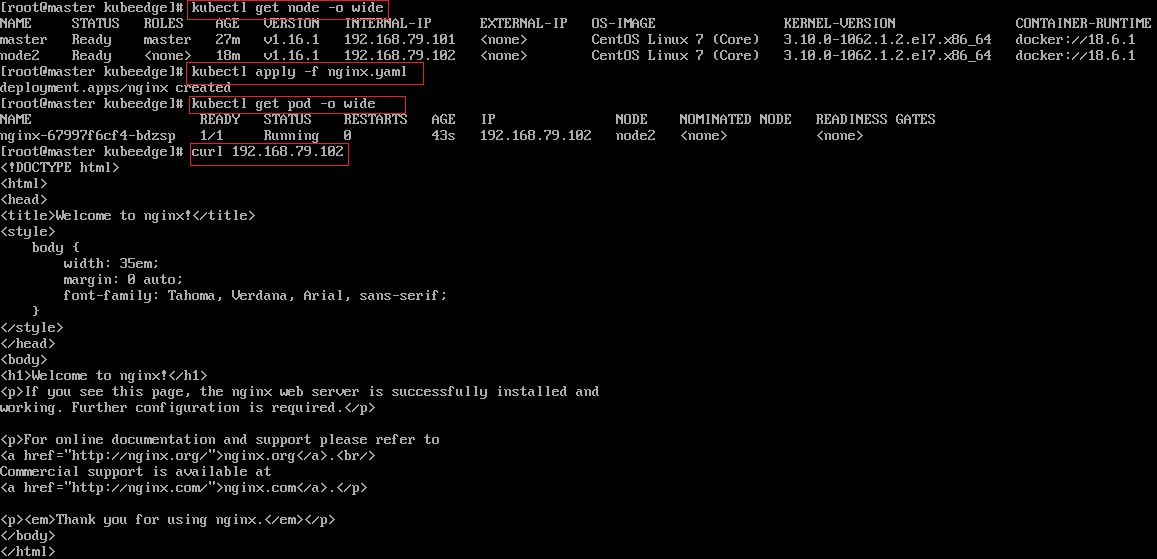

First, I created a k8s cluster with a master and a node and made a nginx deployment.

It woked well.

Then, I wanted to construct a kubeedge cluster.

- On the master

typed the cmd

keadm init --advertise-address="192.168.79.101" --kubeedge-version=1.3.1 - On the node

typed the cmd

kubeadm resetrm -rf ~/.kubekeadm join --cloudcore-ipport=192.168.79.101:10000 --interfacename=ens33 --token=SomeTokenHereHere,ens33is the interface name of master in my cluster.

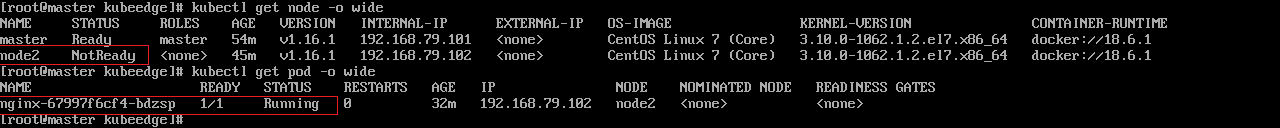

Then I saw the status of node was NotReady, and the nginx was still running.

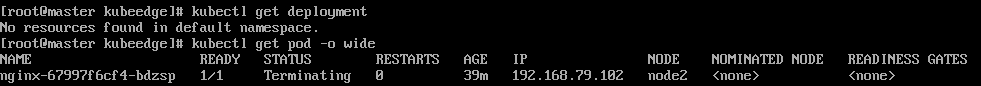

I typed the cmd kubectl delete deployment --all to delete the nginx deployment and stop the pod. The deployment was deleted successfully, but the pod status was always in Terminating.

Thus, I typed the cmd kubectl delete pod --all --force --grace-period=0 to delete it.

Then, the pod disappeared, but it still ran in the background.

cmd curl 192.168.79.102 still worked.

Anything else we need to know?: It worked correctly again after recovering it to k8s cluster. To be specific, using the following cmd.

- On Master

keadm reset - On Node

keadm resetUse cmd getting fromkubeadm token create --print-join-commandto join the node in the cluster.

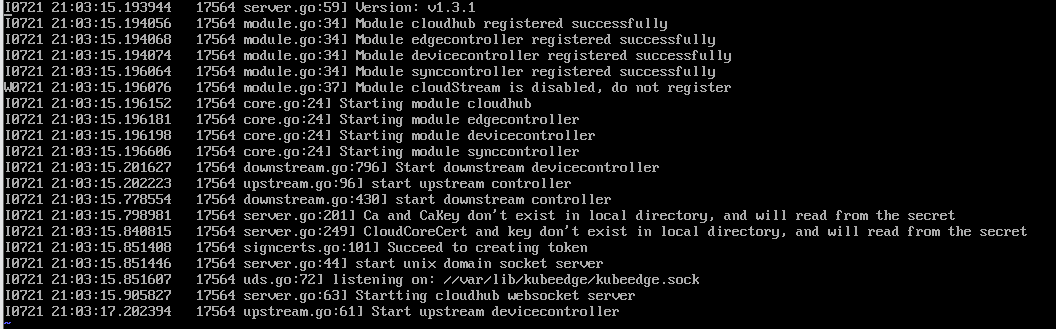

Log at cloud side

It seamed that no connection request came.

It seamed that no connection request came.

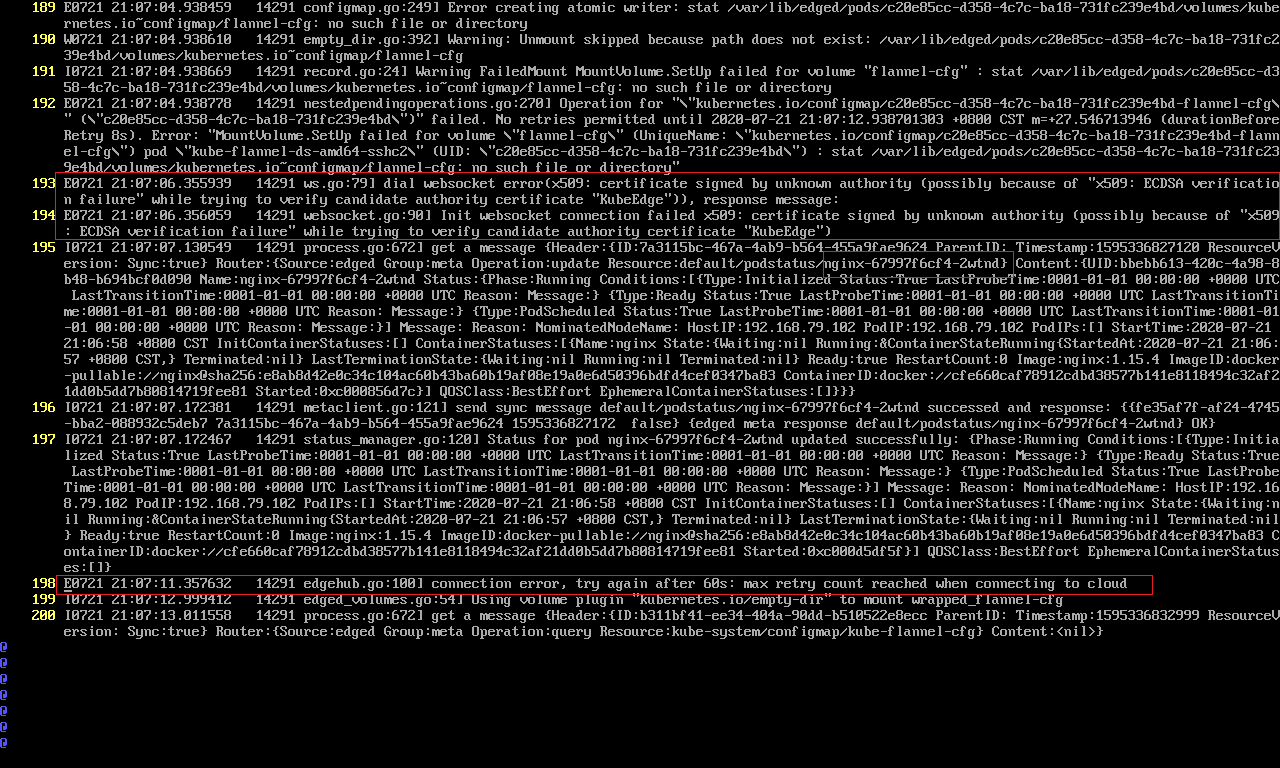

Log at edge side

It seams that someting wrong in certificatoin.

May be the node get the nginx pod from cache?

It seams that someting wrong in certificatoin.

May be the node get the nginx pod from cache?

CloudSide Environment: kubeedge 1.3.1 kubernetes 1.17.1 kubectl 1.16.1 kubeadm 1.16.1 kubelet 1.16.1 Linux CentOS 7

EdgeSide Environment: kubeedge 1.3.1 kubernetes 1.17.1 kubeadm 1.16.1 kubelet 1.16.1 Linux CentOS 7

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 15 (13 by maintainers)

hello, I see you have run

rm -rf ~/.kubeon the node, i don’t understand why delete it? the directory is not related to kubeedge. you should delete all ca and certificates in /etc/kubeedge/certs and /etc/kubeedge/ca on the edgenode. and then delete the certs in secret because cloudcore’s certs are stored in secret rather than in local.kubectl delete secret casecret -nkubeedgekubectl delete secret cloudcoresecret -nkubeedgewhen done, restart cloudcore and edgecorenow keadm reset in v1.3.1

can notdelete remaining certificates.In few word, it is not an inconsistent status i think.

KubeEdge runs autonomously, which means even disconnected from cloud side(k8s master node), the pod in edge side still running.

I see you exec delete cmd after disconnected from cloud, in this case the cmd can not reach edge side so that nginx still run as well at edge side, it is not a bug, it is what we wanted.

Please keep in mind, edge node is a special node in k8s cluster, what you see from k8s master node may be different from the fact in edge node. It is useful and powerful in edge case.