kubeedge: Edge deployment ERROR when edge use crio as runtime and systemd as cgroup-manager

What happened:

Follow the guide, Run KubeEdge from source, I use kubeadm v1.15.4 starts a Kubernetes cluster with version v1.15.4 and Run KubeEdge cloud part and edge part, using kubeedge release binary file cloudcore(edgecontroller) and edge_core with version v1.0.0.

Everything is ok when deploy application $GOPATH/src/github.com/kubeedge/kubeedge/build/deployment.yaml. Currently, the default runtime is docker and the default cgroup-manager is cgroupfs.

And I see in the document, kubeedge supports cri-o which our team needed. Meanwhile, CRI-O uses cgroupfs as a cgroup manager. However, they recommend using systemd as a cgroup manager in the document.

And then I modify the runtime of edged to crio and use systemd as cgroup-manager.

Here are my modifications

$ cat /etc/crictl.yaml

runtime-endpoint: unix:///var/run/crio/crio.sock

# cgroup-manager: systemd

$ crictl version

Version: 0.1.0

RuntimeName: cri-o

RuntimeVersion: 1.15.1-dev

RuntimeApiVersion: v1alpha1

- change

cgroup_manager = "systemd"in/etc/crio/crio.conf

$ cat /etc/crio/crio.conf

# The CRI-O configuration file specifies all of the available configuration

# options and command-line flags for the crio(8) OCI Kubernetes Container Runtime

# daemon, but in a TOML format that can be more easily modified and versioned.

#

# Please refer to crio.conf(5) for details of all configuration options.

# CRI-O supports partial configuration reload during runtime, which can be

# done by sending SIGHUP to the running process. Currently supported options

# are explicitly mentioned with: 'This option supports live configuration

# reload'.

# CRI-O reads its storage defaults from the containers-storage.conf(5) file

# located at /etc/containers/storage.conf. Modify this storage configuration if

# you want to change the system's defaults. If you want to modify storage just

# for CRI-O, you can change the storage configuration options here.

[crio]

# Path to the "root directory". CRI-O stores all of its data, including

# containers images, in this directory.

#root = "/var/lib/containers/storage"

# Path to the "run directory". CRI-O stores all of its state in this directory.

#runroot = "/var/run/containers/storage"

# Storage driver used to manage the storage of images and containers. Please

# refer to containers-storage.conf(5) to see all available storage drivers.

#storage_driver = "overlay"

# List to pass options to the storage driver. Please refer to

# containers-storage.conf(5) to see all available storage options.

#storage_option = [

#]

# The default log directory where all logs will go unless directly specified by

# the kubelet. The log directory specified must be an absolute directory.

log_dir = "/var/log/crio/pods"

# The crio.api table contains settings for the kubelet/gRPC interface.

[crio.api]

# Path to AF_LOCAL socket on which CRI-O will listen.

listen = "/var/run/crio/crio.sock"

# Host IP considered as the primary IP to use by CRI-O for things such as host network IP.

host_ip = ""

# IP address on which the stream server will listen.

stream_address = "127.0.0.1"

# The port on which the stream server will listen.

stream_port = "0"

# Enable encrypted TLS transport of the stream server.

stream_enable_tls = false

# Path to the x509 certificate file used to serve the encrypted stream. This

# file can change, and CRI-O will automatically pick up the changes within 5

# minutes.

stream_tls_cert = ""

# Path to the key file used to serve the encrypted stream. This file can

# change and CRI-O will automatically pick up the changes within 5 minutes.

stream_tls_key = ""

# Path to the x509 CA(s) file used to verify and authenticate client

# communication with the encrypted stream. This file can change and CRI-O will

# automatically pick up the changes within 5 minutes.

stream_tls_ca = ""

# Maximum grpc send message size in bytes. If not set or <=0, then CRI-O will default to 16 * 1024 * 1024.

grpc_max_send_msg_size = 16777216

# Maximum grpc receive message size. If not set or <= 0, then CRI-O will default to 16 * 1024 * 1024.

grpc_max_recv_msg_size = 16777216

# The crio.runtime table contains settings pertaining to the OCI runtime used

# and options for how to set up and manage the OCI runtime.

[crio.runtime]

# A list of ulimits to be set in containers by default, specified as

# "<ulimit name>=<soft limit>:<hard limit>", for example:

# "nofile=1024:2048"

# If nothing is set here, settings will be inherited from the CRI-O daemon

#default_ulimits = [

#]

# default_runtime is the _name_ of the OCI runtime to be used as the default.

# The name is matched against the runtimes map below.

default_runtime = "runc"

# If true, the runtime will not use pivot_root, but instead use MS_MOVE.

no_pivot = false

# Path to the conmon binary, used for monitoring the OCI runtime.

# Will be searched for using $PATH if empty.

conmon = ""

# Cgroup setting for conmon

conmon_cgroup = "pod"

# Environment variable list for the conmon process, used for passing necessary

# environment variables to conmon or the runtime.

conmon_env = [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

]

# If true, SELinux will be used for pod separation on the host.

selinux = false

# Path to the seccomp.json profile which is used as the default seccomp profile

# for the runtime. If not specified, then the internal default seccomp profile

# will be used.

seccomp_profile = ""

# Used to change the name of the default AppArmor profile of CRI-O. The default

# profile name is "crio-default-" followed by the version string of CRI-O.

apparmor_profile = "crio-default-1.15.1-dev"

# Cgroup management implementation used for the runtime.

cgroup_manager = "systemd"

# List of default capabilities for containers. If it is empty or commented out,

# only the capabilities defined in the containers json file by the user/kube

# will be added.

default_capabilities = [

"CHOWN",

"DAC_OVERRIDE",

"FSETID",

"FOWNER",

"NET_RAW",

"SETGID",

"SETUID",

"SETPCAP",

"NET_BIND_SERVICE",

"SYS_CHROOT",

"KILL",

]

# List of default sysctls. If it is empty or commented out, only the sysctls

# defined in the container json file by the user/kube will be added.

default_sysctls = [

]

# List of additional devices. specified as

# "<device-on-host>:<device-on-container>:<permissions>", for example: "--device=/dev/sdc:/dev/xvdc:rwm".

#If it is empty or commented out, only the devices

# defined in the container json file by the user/kube will be added.

additional_devices = [

]

# Path to OCI hooks directories for automatically executed hooks.

hooks_dir = [

]

# List of default mounts for each container. **Deprecated:** this option will

# be removed in future versions in favor of default_mounts_file.

default_mounts = [

]

# Path to the file specifying the defaults mounts for each container. The

# format of the config is /SRC:/DST, one mount per line. Notice that CRI-O reads

# its default mounts from the following two files:

#

# 1) /etc/containers/mounts.conf (i.e., default_mounts_file): This is the

# override file, where users can either add in their own default mounts, or

# override the default mounts shipped with the package.

#

# 2) /usr/share/containers/mounts.conf: This is the default file read for

# mounts. If you want CRI-O to read from a different, specific mounts file,

# you can change the default_mounts_file. Note, if this is done, CRI-O will

# only add mounts it finds in this file.

#

#default_mounts_file = ""

# Maximum number of processes allowed in a container.

pids_limit = 1024

# Maximum sized allowed for the container log file. Negative numbers indicate

# that no size limit is imposed. If it is positive, it must be >= 8192 to

# match/exceed conmon's read buffer. The file is truncated and re-opened so the

# limit is never exceeded.

log_size_max = -1

# Whether container output should be logged to journald in addition to the kuberentes log file

log_to_journald = false

# Path to directory in which container exit files are written to by conmon.

container_exits_dir = "/var/run/crio/exits"

# Path to directory for container attach sockets.

container_attach_socket_dir = "/var/run/crio"

# The prefix to use for the source of the bind mounts.

bind_mount_prefix = ""

# If set to true, all containers will run in read-only mode.

read_only = false

# Changes the verbosity of the logs based on the level it is set to. Options

# are fatal, panic, error, warn, info, and debug. This option supports live

# configuration reload.

log_level = "info"

# The UID mappings for the user namespace of each container. A range is

# specified in the form containerUID:HostUID:Size. Multiple ranges must be

# separated by comma.

uid_mappings = ""

# The GID mappings for the user namespace of each container. A range is

# specified in the form containerGID:HostGID:Size. Multiple ranges must be

# separated by comma.

gid_mappings = ""

# The minimal amount of time in seconds to wait before issuing a timeout

# regarding the proper termination of the container.

ctr_stop_timeout = 0

# ManageNetworkNSLifecycle determines whether we pin and remove network namespace

# and manage its lifecycle.

manage_network_ns_lifecycle = false

# The "crio.runtime.runtimes" table defines a list of OCI compatible runtimes.

# The runtime to use is picked based on the runtime_handler provided by the CRI.

# If no runtime_handler is provided, the runtime will be picked based on the level

# of trust of the workload. Each entry in the table should follow the format:

#

#[crio.runtime.runtimes.runtime-handler]

# runtime_path = "/path/to/the/executable"

# runtime_type = "oci"

# runtime_root = "/path/to/the/root"

#

# Where:

# - runtime-handler: name used to identify the runtime

# - runtime_path (optional, string): absolute path to the runtime executable in

# the host filesystem. If omitted, the runtime-handler identifier should match

# the runtime executable name, and the runtime executable should be placed

# in $PATH.

# - runtime_type (optional, string): type of runtime, one of: "oci", "vm". If

# omitted, an "oci" runtime is assumed.

# - runtime_root (optional, string): root directory for storage of containers

# state.

[crio.runtime.runtimes.runc]

runtime_path = ""

runtime_type = "oci"

runtime_root = "/run/runc"

# Kata Containers is an OCI runtime, where containers are run inside lightweight

# VMs. Kata provides additional isolation towards the host, minimizing the host attack

# surface and mitigating the consequences of containers breakout.

# Kata Containers with the default configured VMM

#[crio.runtime.runtimes.kata-runtime]

# Kata Containers with the QEMU VMM

#[crio.runtime.runtimes.kata-qemu]

# Kata Containers with the Firecracker VMM

#[crio.runtime.runtimes.kata-fc]

# The crio.image table contains settings pertaining to the management of OCI images.

#

# CRI-O reads its configured registries defaults from the system wide

# containers-registries.conf(5) located in /etc/containers/registries.conf. If

# you want to modify just CRI-O, you can change the registries configuration in

# this file. Otherwise, leave insecure_registries and registries commented out to

# use the system's defaults from /etc/containers/registries.conf.

[crio.image]

# Default transport for pulling images from a remote container storage.

default_transport = "docker://"

# The path to a file containing credentials necessary for pulling images from

# secure registries. The file is similar to that of /var/lib/kubelet/config.json

global_auth_file = ""

# The image used to instantiate infra containers.

# This option supports live configuration reload.

pause_image = "docker.io/kubeedge/pause:3.1"

# The path to a file containing credentials specific for pulling the pause_image from

# above. The file is similar to that of /var/lib/kubelet/config.json

# This option supports live configuration reload.

pause_image_auth_file = ""

# The command to run to have a container stay in the paused state.

# This option supports live configuration reload.

pause_command = "/pause"

# Path to the file which decides what sort of policy we use when deciding

# whether or not to trust an image that we've pulled. It is not recommended that

# this option be used, as the default behavior of using the system-wide default

# policy (i.e., /etc/containers/policy.json) is most often preferred. Please

# refer to containers-policy.json(5) for more details.

signature_policy = ""

# List of registries to skip TLS verification for pulling images. Please

# consider configuring the registries via /etc/containers/registries.conf before

# changing them here.

#insecure_registries = "[]"

# Controls how image volumes are handled. The valid values are mkdir, bind and

# ignore; the latter will ignore volumes entirely.

image_volumes = "mkdir"

# List of registries to be used when pulling an unqualified image (e.g.,

# "alpine:latest"). By default, registries is set to "docker.io" for

# compatibility reasons. Depending on your workload and usecase you may add more

# registries (e.g., "quay.io", "registry.fedoraproject.org",

# "registry.opensuse.org", etc.).

#registries = [

# ]

# The crio.network table containers settings pertaining to the management of

# CNI plugins.

[crio.network]

# Path to the directory where CNI configuration files are located.

#network_dir = "/etc/cni/net.d/"

network_dir = "/etc/cni/net.d.bak/"

# Paths to directories where CNI plugin binaries are located.

plugin_dirs = [

"/opt/cni/bin/",

]

# A necessary configuration for Prometheus based metrics retrieval

[crio.metrics]

# Globally enable or disable metrics support.

enable_metrics = false

# The port on which the metrics server will listen.

metrics_port = 9090

set edged.cgroup-driver=systemd, edged.runtime-type=remote,

edged.remote-runtime-endpoint=unix:///var/run/crio/crio.sock,

edged.remote-image-endpoint=unix:///var/run/crio/crio.sock

$ cat ~/cmd/conf/edge.yaml

mqtt:

server: tcp://127.0.0.1:1883 # external mqtt broker url.

internal-server: tcp://127.0.0.1:1884 # internal mqtt broker url.

mode: 0 # 0: internal mqtt broker enable only. 1: internal and external mqtt broker enable. 2: external mqtt broker enable only.

qos: 0 # 0: QOSAtMostOnce, 1: QOSAtLeastOnce, 2: QOSExactlyOnce.

retain: false # if the flag set true, server will store the message and can be delivered to future subscribers.

session-queue-size: 100 # A size of how many sessions will be handled. default to 100.

edgehub:

websocket:

url: wss://192.168.237.112:10000/e632aba927ea4ac2b575ec1603d56f10/edge-node/events

certfile: /etc/kubeedge/certs/edge.crt

keyfile: /etc/kubeedge/certs/edge.key

handshake-timeout: 30 #second

write-deadline: 15 # second

read-deadline: 15 # second

quic:

url: 127.0.0.1:10001

cafile: /etc/kubeedge/ca/rootCA.crt

certfile: /etc/kubeedge/certs/edge.crt

keyfile: /etc/kubeedge/certs/edge.key

handshake-timeout: 30 #second

write-deadline: 15 # second

read-deadline: 15 # second

controller:

protocol: websocket # websocket, quic

placement: false

heartbeat: 15 # second

refresh-ak-sk-interval: 10

auth-info-files-path: /var/IEF/secret

placement-url: https://10.154.193.32:7444/v1/placement_external/message_queue

project-id: e632aba927ea4ac2b575ec1603d56f10

node-id: edge-node

edged:

register-node-namespace: default

hostname-override: edge-node

interface-name: ens33

edged-memory-capacity-bytes: 7852396000

node-status-update-frequency: 10 # second

device-plugin-enabled: false

gpu-plugin-enabled: false

image-gc-high-threshold: 80 # percent

image-gc-low-threshold: 40 # percent

maximum-dead-containers-per-container: 1

docker-address: unix:///var/run/docker.sock

version: v1.10.9-kubeedge-v1.0.0

runtime-type: remote

remote-runtime-endpoint: unix:///var/run/crio/crio.sock

remote-image-endpoint: unix:///var/run/crio/crio.sock

runtime-request-timeout: 2

podsandbox-image: kubeedge/pause:3.1 # kubeedge/pause:3.1 for x86 arch , kubeedge/pause-arm:3.1 for arm arch, kubeedge/pause-arm64 for arm64 arch

image-pull-progress-deadline: 60 # second

cgroup-driver: systemd

mesh:

loadbalance:

strategy-name: RoundRobin

ERROR occurs when deploy the application

kubectl apply -f $GOPATH/src/github.com/kubeedge/kubeedge/build/deployment.yaml

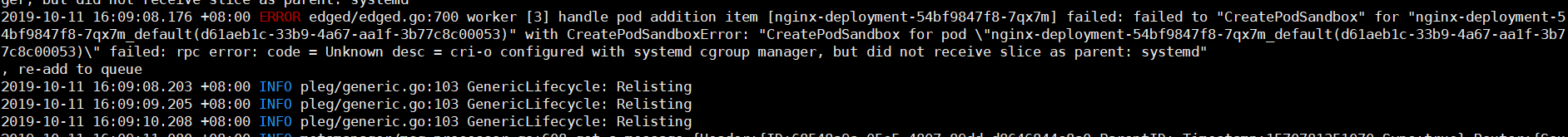

failed to "CreatePodSandbox" for "nginx-deployment6-7bb7ff7d5d-n2lzw_default(8b005a98-ccff-4b11-b651-a63d75ef502e)" with CreatePodSandboxError: "CreatePodSandbox for pod \"nginx-deployment6-7bb7ff7d5d-n2lzw_default(8b005a98-ccff-4b11-b651-a63d75ef502e)\" failed: rpc error: code = Unknown desc = cri-o configured with systemd cgroup manager, but did not receive slice as parent: systemd"

It troubles me.

What you expected to happen:

kubeedge should support crio and systemd.

How to reproduce it (as minimally and precisely as possible):

See the modification details above.

Anything else we need to know?:

tested in kubeedge v1.0.0

| cgroup_manager | runtime | situation | remark |

|---|---|---|---|

| cgrooupfs | docker | ok | |

| cgrooupfs | cri-o | ok | |

| systemd | docker | ok | modify /etc/docker/daemon.json set "exec-opts": ["native.cgroupdriver=systemd"] |

| systemd | cri-o | ERROR | install cri-o and modify configurations |

Environment:

- KubeEdge version(e.g.

cloudcore/edgecore --version):

edgecontroller in kubeedge-v1.0.0-linux-amd64.tar.gz and I have renamed it to cloudcore

CloudSide Environment:

- Hardware configuration (e.g.

lscpu):

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 2

Socket(s): 2

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 94

Model name: Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

Stepping: 3

CPU MHz: 2592.006

BogoMIPS: 5184.01

Virtualization: VT-x

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 6144K

NUMA node0 CPU(s): 0-3

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc pni pclmulqdq vmx ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp pti tpr_shadow vnmi ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx rdseed adx smap clflushopt xsaveopt xsavec arat flush_l1d arch_capabilities

- OS (e.g.

cat /etc/os-release):

$ cat /etc/os-release

NAME="Ubuntu"

VERSION="16.04.6 LTS (Xenial Xerus)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 16.04.6 LTS"

VERSION_ID="16.04"

HOME_URL="http://www.ubuntu.com/"

SUPPORT_URL="http://help.ubuntu.com/"

BUG_REPORT_URL="http://bugs.launchpad.net/ubuntu/"

VERSION_CODENAME=xenial

UBUNTU_CODENAME=xenial

- Kernel (e.g.

uname -a):

$ uname -a

Linux dev 4.4.0-164-generic #192-Ubuntu SMP Fri Sep 13 12:02:50 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

- Go version (e.g.

go version):

$ go version

go version go1.12.10 linux/amd64

EdgeSide Environment:

- edgecore version (e.g.

edgecore --version):

edge_core in kubeedge-v1.0.0-linux-amd64.tar.gz and I have renamed it to edgecore

- Hardware configuration (e.g.

lscpu):

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 2

Socket(s): 2

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 94

Model name: Intel(R) Core(TM) i7-6700HQ CPU @ 2.60GHz

Stepping: 3

CPU MHz: 2592.006

BogoMIPS: 5184.01

Virtualization: VT-x

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 6144K

NUMA node0 CPU(s): 0-3

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl xtopology tsc_reliable nonstop_tsc pni pclmulqdq vmx ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm 3dnowprefetch invpcid_single ssbd ibrs ibpb stibp pti tpr_shadow vnmi ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 invpcid rtm mpx rdseed adx smap clflushopt xsaveopt xsavec arat flush_l1d arch_capabilities

- OS (e.g.

cat /etc/os-release):

$ cat /etc/os-release

NAME="Ubuntu"

VERSION="16.04.6 LTS (Xenial Xerus)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 16.04.6 LTS"

VERSION_ID="16.04"

HOME_URL="http://www.ubuntu.com/"

SUPPORT_URL="http://help.ubuntu.com/"

BUG_REPORT_URL="http://bugs.launchpad.net/ubuntu/"

VERSION_CODENAME=xenial

UBUNTU_CODENAME=xenial

- Kernel (e.g.

uname -a):

$ uname -a

Linux edge-node 4.4.0-164-generic #192-Ubuntu SMP Fri Sep 13 12:02:50 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

- Go version (e.g.

go version):

$ go version

go version go1.12.10 linux/amd64

- Others: kubeedge_configuration_files.zip

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 19 (13 by maintainers)

I cannot reproduce your issue, did you update your edgecore.yaml for different runtime?