serving: Activator pod failing with `CrashLoopBackOff` on GKE

In what area(s)?

/area API /area autoscale

What version of Knative?

0.5.x

Expected Behavior

All pods in ns knative-serving on GKE are running.

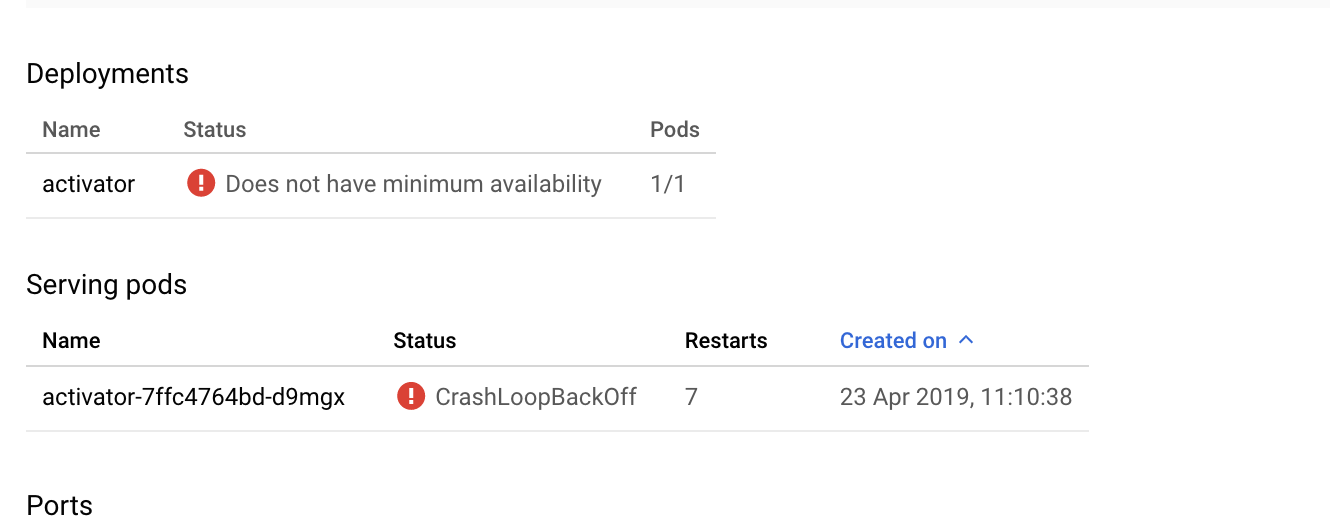

Actual Behavior

activator-service doesn’t have minimum availabaility.

➜ ~ kubectl get pods --namespace knative-serving

NAME READY STATUS RESTARTS AGE

activator-7ffc4764bd-d9mgx 1/2 Running 7 8m

autoscaler-6b67f6ffc7-qxt8m 2/2 Running 0 11m

controller-b54c5f66-tp9hz 1/1 Running 0 11m

webhook-5dff4764d-d2rss 1/1 Running 0 11m

Describe on pod shows liveness and readiness probe is failing.

➜ ~ kubectl describe pod activator-7ffc4764bd-d9mgx -n knative-serving

Name: activator-7ffc4764bd-d9mgx

Namespace: knative-serving

Priority: 0

PriorityClassName: <none>

Node: gke-euwe2-preemptible-2-6fb4f449-g2t5/10.31.252.108

Start Time: Tue, 23 Apr 2019 11:10:38 +0200

Labels: app=activator

pod-template-hash=3997032068

role=activator

serving.knative.dev/release=devel

Annotations: cni.projectcalico.org/podIP: 10.30.7.38/32

sidecar.istio.io/inject: true

sidecar.istio.io/status:

{"version":"bdaecb667bc51926819c154cd5e5f8922a3c562816a4f163d67ad95223aa7612","initContainers":["istio-init"],"containers":["istio-proxy"]...

Status: Running

IP: 10.30.7.38

Controlled By: ReplicaSet/activator-7ffc4764bd

Init Containers:

istio-init:

Container ID: docker://c45f7ae9c692ab5231ad0d7c3418ed47249f66117efb345336054dfe9b42087e

Image: gcr.io/istio-release/proxy_init:release-1.1-latest-daily

Image ID: docker-pullable://gcr.io/istio-release/proxy_init@sha256:8622455bb6825341b83ed191233357286bbd6f88ba7e28949d8056ec7d906842

Port: <none>

Host Port: <none>

Args:

-p

15001

-u

1337

-m

REDIRECT

-i

*

-x

-b

8080,8081,9090

-d

15020

State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 23 Apr 2019 11:10:39 +0200

Finished: Tue, 23 Apr 2019 11:10:40 +0200

Ready: True

Restart Count: 0

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 10m

memory: 10Mi

Environment: <none>

Mounts: <none>

Containers:

activator:

Container ID: docker://87bfebc2fc8dff0b1d2b29a4e9b9d1499e9a338c4b40675c637e8580ceb93934

Image: gcr.io/knative-releases/github.com/knative/serving/cmd/activator@sha256:bc61131f2d3f48fb97a1a98a3be8a34bb65905428dbbedeabe24eb949d6b53bc

Image ID: docker-pullable://gcr.io/knative-releases/github.com/knative/serving/cmd/activator@sha256:bc61131f2d3f48fb97a1a98a3be8a34bb65905428dbbedeabe24eb949d6b53bc

Ports: 8080/TCP, 8081/TCP, 9090/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Args:

-logtostderr=false

-stderrthreshold=FATAL

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Tue, 23 Apr 2019 11:15:38 +0200

Finished: Tue, 23 Apr 2019 11:16:05 +0200

Ready: False

Restart Count: 6

Limits:

cpu: 200m

memory: 600Mi

Requests:

cpu: 20m

memory: 60Mi

Liveness: http-get http://:8080/healthz delay=0s timeout=1s period=10s #success=1 #failure=3

Readiness: http-get http://:8080/healthz delay=0s timeout=1s period=10s #success=1 #failure=3

Environment:

POD_NAME: activator-7ffc4764bd-d9mgx (v1:metadata.name)

SYSTEM_NAMESPACE: knative-serving (v1:metadata.namespace)

CONFIG_LOGGING_NAME: config-logging

Mounts:

/etc/config-logging from config-logging (rw)

/etc/config-observability from config-observability (rw)

/var/run/secrets/kubernetes.io/serviceaccount from controller-token-6gbsg (ro)

istio-proxy:

Container ID: docker://787997a397c65d0e01476ba029f6fb3f5df49adfa33e1152e5031e6adbfcf16a

Image: gcr.io/istio-release/proxyv2:release-1.1-latest-daily

Image ID: docker-pullable://gcr.io/istio-release/proxyv2@sha256:23483db628fcf57ce6131c5b86decd97bf2f9a1aa67b13b5e23b05c8f3cf1e33

Port: 15090/TCP

Host Port: 0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--configPath

/etc/istio/proxy

--binaryPath

/usr/local/bin/envoy

--serviceCluster

activator.$(POD_NAMESPACE)

--drainDuration

45s

--parentShutdownDuration

1m0s

--discoveryAddress

istio-pilot.istio-system:15011

--zipkinAddress

zipkin.istio-system:9411

--connectTimeout

10s

--proxyAdminPort

15000

--concurrency

2

--controlPlaneAuthPolicy

MUTUAL_TLS

--statusPort

15020

--applicationPorts

8080,8081,9090

State: Running

Started: Tue, 23 Apr 2019 11:10:41 +0200

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 128Mi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15020/healthz/ready delay=1s timeout=1s period=2s #success=1 #failure=30

Environment:

POD_NAME: activator-7ffc4764bd-d9mgx (v1:metadata.name)

POD_NAMESPACE: knative-serving (v1:metadata.namespace)

INSTANCE_IP: (v1:status.podIP)

ISTIO_META_POD_NAME: activator-7ffc4764bd-d9mgx (v1:metadata.name)

ISTIO_META_CONFIG_NAMESPACE: knative-serving (v1:metadata.namespace)

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_METAJSON_ANNOTATIONS: {"sidecar.istio.io/inject":"true"}

ISTIO_METAJSON_LABELS: {"app":"activator","pod-template-hash":"3997032068","role":"activator","serving.knative.dev/release":"devel"}

Mounts:

/etc/certs/ from istio-certs (ro)

/etc/istio/proxy from istio-envoy (rw)

/var/run/secrets/kubernetes.io/serviceaccount from controller-token-6gbsg (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

config-logging:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: config-logging

Optional: false

config-observability:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: config-observability

Optional: false

controller-token-6gbsg:

Type: Secret (a volume populated by a Secret)

SecretName: controller-token-6gbsg

Optional: false

istio-envoy:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium: Memory

istio-certs:

Type: Secret (a volume populated by a Secret)

SecretName: istio.controller

Optional: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 8m1s default-scheduler Successfully assigned knative-serving/activator-7ffc4764bd-d9mgx to gke-euwe2-preemptible-2-6fb4f449-g2t5

Normal Pulled 8m kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Container image "gcr.io/istio-release/proxy_init:release-1.1-latest-daily" already present on machine

Normal Created 8m kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Created container

Normal Started 8m kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Started container

Normal Pulling 7m59s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 pulling image "gcr.io/knative-releases/github.com/knative/serving/cmd/activator@sha256:bc61131f2d3f48fb97a1a98a3be8a34bb65905428dbbedeabe24eb949d6b53bc"

Normal Started 7m58s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Started container

Normal Pulled 7m58s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Successfully pulled image "gcr.io/knative-releases/github.com/knative/serving/cmd/activator@sha256:bc61131f2d3f48fb97a1a98a3be8a34bb65905428dbbedeabe24eb949d6b53bc"

Normal Created 7m58s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Created container

Normal Started 7m58s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Started container

Normal Pulled 7m58s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Container image "gcr.io/istio-release/proxyv2:release-1.1-latest-daily" already present on machine

Warning Unhealthy 7m54s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Liveness probe failed: Get http://10.30.7.38:8080/healthz: read tcp 10.31.252.108:44088->10.30.7.38:8080: read: connection reset by peer

Warning Unhealthy 7m47s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Readiness probe failed: Get http://10.30.7.38:8080/healthz: read tcp 10.31.252.108:44140->10.30.7.38:8080: read: connection reset by peer

Warning Unhealthy 7m44s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Liveness probe failed: Get http://10.30.7.38:8080/healthz: read tcp 10.31.252.108:44172->10.30.7.38:8080: read: connection reset by peer

Warning Unhealthy 7m37s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Readiness probe failed: Get http://10.30.7.38:8080/healthz: read tcp 10.31.252.108:44230->10.30.7.38:8080: read: connection reset by peer

Warning Unhealthy 7m34s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Liveness probe failed: Get http://10.30.7.38:8080/healthz: read tcp 10.31.252.108:44262->10.30.7.38:8080: read: connection reset by peer

Normal Killing 7m34s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Killing container with id docker://activator:Container failed liveness probe.. Container will be killed and recreated.

Normal Pulled 7m34s kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Container image "gcr.io/knative-releases/github.com/knative/serving/cmd/activator@sha256:bc61131f2d3f48fb97a1a98a3be8a34bb65905428dbbedeabe24eb949d6b53bc" already present on machine

Normal Created 7m33s (x2 over 7m58s) kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Created container

Warning DNSConfigForming 3m1s (x32 over 8m1s) kubelet, gke-euwe2-preemptible-2-6fb4f449-g2t5 Search Line limits were exceeded, some search paths have been omitted, the applied search line is: knative-serving.svc.cluster.local svc.cluster.local cluster.local c.mb-k8s-tst.internal google.internal global

Steps to Reproduce the Problem

- Install knative on existing cluster with Istio installation (

version > 1.1) already available

kubectl apply --selector knative.dev/crd-install=true \

--filename https://github.com/knative/serving/releases/download/v0.5.0/serving.yaml \

--filename https://github.com/knative/build/releases/download/v0.5.0/build.yaml \

--filename https://github.com/knative/eventing/releases/download/v0.5.0/release.yaml \

--filename https://github.com/knative/eventing-sources/releases/download/v0.5.0/eventing-sources.yaml \

--filename https://github.com/knative/serving/releases/download/v0.5.0/monitoring.yaml \

--filename https://raw.githubusercontent.com/knative/serving/v0.5.0/third_party/config/build/clusterrole.yaml

kubectl apply --filename https://github.com/knative/serving/releases/download/v0.5.0/serving.yaml \

--filename https://github.com/knative/build/releases/download/v0.5.0/build.yaml \

--filename https://github.com/knative/eventing/releases/download/v0.5.0/release.yaml \

--filename https://github.com/knative/eventing-sources/releases/download/v0.5.0/eventing-sources.yaml \

--filename https://github.com/knative/serving/releases/download/v0.5.0/monitoring.yaml \

--filename https://raw.githubusercontent.com/knative/serving/v0.5.0/third_party/config/build/clusterrole.yaml

activatorservice onknative-servingfailed to reach availability.

Additional info

Logs on specific pod, doesn’t shows much errors but only that the pod gets restarted several times.

➜ ~ kubectl logs activator-7ffc4764bd-d9mgx -n knative-serving -c activator

{"level":"info","ts":"2019-04-23T09:24:56.125Z","caller":"logging/config.go:96","msg":"Successfully created the logger.","knative.dev/jsonconfig":"{\n \"level\": \"info\",\n \"development\": false,\n \"outputPaths\": [\"stdout\"],\n \"errorOutputPaths\": [\"stderr\"],\n \"encoding\": \"json\",\n \"encoderConfig\": {\n \"timeKey\": \"ts\",\n \"levelKey\": \"level\",\n \"nameKey\": \"logger\",\n \"callerKey\": \"caller\",\n \"messageKey\": \"msg\",\n \"stacktraceKey\": \"stacktrace\",\n \"lineEnding\": \"\",\n \"levelEncoder\": \"\",\n \"timeEncoder\": \"iso8601\",\n \"durationEncoder\": \"\",\n \"callerEncoder\": \"\"\n }\n}"}

{"level":"info","ts":"2019-04-23T09:24:56.126Z","caller":"logging/config.go:97","msg":"Logging level set to info"}

{"level":"warn","ts":"2019-04-23T09:24:56.126Z","caller":"logging/config.go:65","msg":"Fetch GitHub commit ID from kodata failed: \"ref: refs/heads/upstream/release-0.5\" is not a valid GitHub commit ID"}

{"level":"info","ts":"2019-04-23T09:24:56.126Z","logger":"activator","caller":"activator/main.go:134","msg":"Starting the knative activator","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.223Z","logger":"activator","caller":"activator/main.go:183","msg":"Waiting for informer caches to sync","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.524Z","logger":"activator","caller":"activator/main.go:246","msg":"Connecting to autoscaler atws://autoscaler.knative-serving.svc.cluster.local:8080","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.524Z","logger":"activator","caller":"util/env.go:33","msg":"POD_NAME=activator-7ffc4764bd-d9mgx","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.524Z","logger":"activator","caller":"websocket/connection.go:128","msg":"Connecting to \"ws://autoscaler.knative-serving.svc.cluster.local:8080\"","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.608Z","logger":"activator","caller":"metrics/prometheus_exporter.go:37","msg":"Created Opencensus Prometheus exporter with config: &{knative.dev/serving activator prometheus 5000000000 9090 false false }. Start the server for Prometheus exporter.","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.608Z","logger":"activator","caller":"metrics/config.go:236","msg":"Successfully updated the metrics exporter; old config: <nil>; new config &{knative.dev/serving activator prometheus 5000000000 9090 false false }","knative.dev/controller":"activator"}

{"level":"info","ts":"2019-04-23T09:24:56.615Z","logger":"activator","caller":"websocket/connection.go:133","msg":"Connected to \"ws://autoscaler.knative-serving.svc.cluster.local:8080\"","knative.dev/controller":"activator"}

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Comments: 25 (13 by maintainers)

@kbruner I used your workaround for my Cluster,the activator did not CrashLoopBackOff,but its logs also have error

Do you face this problem?

It looks like recent commits in this repo are attempting to address the probe issues on Istio. For those that need knative-serving 0.5.2 to work now, though:

Having the same issue on GKE, knative-serving 0.5.2, Istio 1.1.6. As

mTLSenforcement is an Istio best practice and likely true for many clusters that were already running on Istio for security reasons, and because Istio’s own recommended options for making HTTP health check probes work with mTLS are very much not one-size-fits-all, this will likely bite a lot of people.Additionally, activator tries to make network connections to github.com on start. In Istio clusters where cluster egress is whitelist-only, which was the default before Istio 1.1, that’s not possible unless the cluster has an appropriate

ServiceEntryresource (also aVirtualServiceresource in Istio versions before 1.1). This does not seem to break anything when running production release images, though.I would also recommend giving the

readinessProbea longer timeout. There’s a race condition on pod start with theistio-proxysidecar container. Ingress won’t work until the proxy has started accepting network connections, but it needs to pull its configuration fromistio-pilotbefore it will start listening. If the configuration is large oristio-pilotis slow, that can conceivably take longer than 30 seconds.Pod egress is also not possible with some Istio configurations until the

istio-proxycontainer is live and healthy. This means that whenactivatortries to pull from github.com immediately on process start and without retrying on initial failures, there’s a good chance the network connections will fail even if egress is allowed. Frankly, there’s no good workaround for that. In containers that havencorcurlinstalled, you can use that to “wait for” theistio-proxyport, or if/bin/sleepis present, you can wait before starting the activator command (after bumping up thereadinessProbetimeout), but theactivatorcontainer image doesn’t even have a shell installed. However, that doesn’t seem to break anything that I can tell with the release images.Here’s my workaround for the probe issues with

activator,which might only work if you haveglobal.disablePolicyChecks=false(trueis the default in Istio 1.1; I believe this option was added in 1.1 and previously, policy checks were always on, but I’m not 100% certain). This policy disables the mTLS requirement foractivator-service, so theistio-proxysidecar will accept peer connections with or without mTLS certificates. Don’t do this in production if you have strict zero-trust network requirements:If you need policy checks to stay disabled, an unpleasant short-term workaround would be to delete the

livenessProbeandreadinessProbefrom theactivatordeployment.