jest: Slow startup time in monorepos (single test/project)

All of this is done on OSX with 3,5 GHz Dual-Core Intel Core i7 and 1 jest worker.

Problem

We have a monorepo with currently around 26 packages. We currently run with yarn (1) workspaces. So the code base is not exactly small, but once you work on it you mostly work on one of those packages at a time. The problem is that even when just running a single test, it takes about ~10 seconds for the tests to finish. This is mostly startup time because jest reports the test itself running in ~100ms.

We would like to get this time down to allow for a better developer experience. The fact that all tests together take almost ten minutes doesn’t bother us that much, but running a single test should ideally finish in less than a second.

We hope that someone here can help us or at least point us in the right direction.

jest config

module.exports = {

testRunner: 'jest-circus/runner',

transform: {

'^.+\\.(t|j)sx?$': 'babel-jest',

},

transformIgnorePatterns: ['<rootDir>/node_modules/'],

moduleNameMapper: {

'//': 'Here are 10 modules mapped',

},

clearMocks: true,

roots: ['<rootDir>'],

snapshotSerializers: ['jest-date-serializer'],

testURL: 'http://www.test.com',

moduleFileExtensions: ['tsx', 'ts', 'js', 'json'],

testEnvironment: 'jest-environment-jsdom-sixteen',

setupFilesAfterEnv: [

'jest-canvas-mock',

'jest-localstorage-mock',

'<rootDir>/setup/index.ts',

'<rootDir>/setup/errorCatcher.ts',

],

reporters: ['default', '<rootDir>/setup/consoleErrorReporter.js'],

rootDir: '<rootDir>/../../../',

};

consoleErrorReporter gathers information about errors written to the console.

So in order to allow a custom config for each project the final config is dynamically built:

const fs = require('fs');

const { rootDir, ...baseConfig } = require('./setup/baseJestConfig');

const packages = []; // we have a function which returns all packages

const projects = packages.map(({ location, title }) => {

try {

// use config if provided by a package

fs.accessSync(`${location}/jest.config.js`);

return `<rootDir>/${location}`;

} catch (e) {

// otherwise just use the base config

return {

...baseConfig,

displayName: title,

testRegex: `${location}/.*(Test|.test)\\.(t|j)sx?$`,

};

}

});

module.exports = {

...baseConfig,

roots: ['<rootDir>'],

projects,

};

In the end projects would be an array of 26 configs, which mostly look the same.

What we tried so far

Different transpiler

I first thought transpilation might be a bottleneck. I tried swc and esbuild. To my surprise, it made no difference.

Define just the config for the package you are using

We initially filtered for configs we need for a run, but then found out about --selectedProjects.

Both approaches sped up startup time by a factor of three on my machine. My colleague (with slightly better hardware) could observe around 50% speedup, regardless of the total amount of tests that he ran.

How we tried to debug

Get some times

Hacked timings in jest-runtime/build/index.js like

console.time(module.filename);

compiledFunction.call(

module.exports,

module, // module object

module.exports, // module exports

module.require, // require implementation

module.path, // __dirname

module.filename, // __filename

this._environment.global, // global object

...lastArgs.filter(notEmpty)

);

console.timeEnd(module.filename);

Most files take less than a ms, longest took around 600ms. I can see this pilling up for 7-8k files when done in sync.

Profiled the node process

We are not very familiar with how to read and interpret these reports. Here is an excerpt from it, I cut off lines and just left the top 5 for each:

Statistical profiling result from isolate-0x10469d000-91221-v8.log, (20504 ticks, 27 unaccounted, 0 excluded).

[Shared libraries]:

ticks total nonlib name

300 1.5% /usr/lib/system/libsystem_platform.dylib

65 0.3% /usr/lib/system/libsystem_pthread.dylib

47 0.2% /usr/lib/system/libsystem_kernel.dylib

25 0.1% /usr/lib/system/libsystem_malloc.dylib

1 0.0% /usr/lib/system/libdispatch.dylib

[JavaScript]:

ticks total nonlib name

116 0.6% 0.6% RegExp: /\.git/|/\.hg/

53 0.3% 0.3% LazyCompile: *_ignore /Users/****/node_modules/jest-haste-map/build/index.js:1191:10

30 0.1% 0.1% LazyCompile: *<anonymous> /****/node_modules/jest-haste-map/build/crawlers/node.js:254:15

22 0.1% 0.1% RegExp: .*\/locales\/.*en\.json$

14 0.1% 0.1% LazyCompile: *resolve path.js:973:10

[C++]:

ticks total nonlib name

8465 41.3% 42.2% T __kernelrpc_thread_policy_set

3148 15.4% 15.7% T __ZN2v88internal19ScriptStreamingDataC2ENSt3__110unique_ptrINS_14ScriptCompiler20ExternalSourceStreamENS2_14default_deleteIS5_EEEENS4_14StreamedSource8EncodingE

2265 11.0% 11.3% T node::SyncProcessRunner::Spawn(v8::FunctionCallbackInfo<v8::Value> const&)

1135 5.5% 5.7% T __kernelrpc_mach_vm_purgable_control_trap

596 2.9% 3.0% t node::fs::Read(v8::FunctionCallbackInfo<v8::Value> const&)

[Summary]:

ticks total nonlib name

439 2.1% 2.2% JavaScript

19600 95.6% 97.7% C++

487 2.4% 2.4% GC

438 2.1% Shared libraries

27 0.1% Unaccounted

[C++ entry points]:

ticks cpp total name

4961 47.8% 24.2% T __ZN2v88internal21Builtin_HandleApiCallEiPmPNS0_7IsolateE

3711 35.8% 18.1% T __ZN2v88internal19ScriptStreamingDataC2ENSt3__110unique_ptrINS_14ScriptCompiler20ExternalSourceStreamENS2_14default_deleteIS5_EEEENS4_14StreamedSource8EncodingE

933 9.0% 4.6% T __kernelrpc_mach_vm_purgable_control_trap

130 1.3% 0.6% T __ZN2v88internal30Builtin_ErrorCaptureStackTraceEiPmPNS0_7IsolateE

129 1.2% 0.6% T _open$NOCANCEL

Interestingly the CPU profiler in node shows a lot (~4s) of “nothing” in between starting the script and executing jest:

Also the jestAdapter takes 8s before a tests starts and a total of 12s for the entire run.

Test Suites: 5 passed, 5 total

Tests: 26 passed, 26 total

As far as I can tell the “nothing” time is spent with reading files. onStreamRead and program, zoomed in:

Issues that might be related

https://github.com/facebook/jest/issues/10301 https://github.com/facebook/jest/issues/9554

About this issue

- Original URL

- State: open

- Created 4 years ago

- Reactions: 17

- Comments: 34 (1 by maintainers)

@mingshenggan as described in my above comment(s) it’s because jest recursively follows all import statements during startup. So by importing 1 MUI icon via the barrel export, it forces jest to crawl 1000s of file(s) also imported by that barrel export.

Certainly reducing the amount of imports will help, but assuming your project actually needs to import part(s) of larger libraries, it doesn’t solve the root issue within jest

I also have bad performance in a monorepo

Jest internally uses this “haste” module system, which works by doing a depth first search of all your imports, apparently

For example, if I create a simple test:

and then write a custom resolver https://jestjs.io/docs/configuration#resolver-string that logs out every file jest is resolving, I can see its crawling a bunch of unrelated files:

In my monorepo, its a similar situation but exponentially worse. I have a simple component I wrote a test for, and it imports from other packages in my monorepo. Each package has an

index.tswhich exports a ton of stuff, most packages depend on other packages. Unfortunately, Jest ends up doing what seems to be a giant DFS on nearly every file, and a ton ofnode_modulesunrelated to my test.For example, I’m unit testing a button. This button imports some remedial thing from a “shared” lib (think a simple string or something). Since it imports from a barrel export and the

index.tsin my “shared” lib exports other things which depend on heavy node modules, jest seems to be crawling those heavy node modules, even though its totally unrelated to the button I am testing which is dead simple. For example, looking at flame charts of the profiling I did, I can see my button caused everything in my “shared” to be crawled, including all offramer-motion, which has nothing to do with the button I am testing. It seems my “shared” lib just imports some remedial item from that library, like a smaller helper, but now jest has to crawl every file in that lib on every test run.Hey All, I had this same issue with two repos (so far) at our company. I was able to narrow this down to the

rootDirandroots. When I have config:config/jest.config.js

startup time is > 20s

If I change it to

startup time is <5s.

My theory is (as some others have mentioned) that jest does a depth first scan, based off of test regex, and finds all files in

rootsand then from that removes them based on ignore patterns. This would mean scanning the entire node_modules, building up a list, then excluding the ignore patterns. This would be very expensive and might come up with thousands of results, only to exclude them.I can’t reproduce this in a fresh repo, so I’m not sure if this has something to do with the packages installed, some other config, or what.

const jest: { globals: { "ts-jest": { "isolatedModules": true } }, }Testing was sped up with this configuration

Here is a way to speed up the initial run when watchman is installed.

Create a file named

.watchmanconfigwith the following contents:Now watchman will ignore those folders, resulting in a significant change (4-5 seconds as opposed to 10-12 seconds in our case).

Also, in case where watchman is not installed, find could be modified to ignore node_modules, that would help too. (or even use

fdtool if that is installed, that is very fast as well and ignores .gitignore)fd '\.snap?$|\.jsx?$|\.tsx?$|\.json$|\.node$'is orders of magnitude faster than find that jest uses by default.anybody wrote a custom resolver to fix this in monorepo with many files ?

@gor918 Yes it generally saves time to skip type checking, but I think there is a separate problem here with jest eagerly calling it’s resolver on every import statements, recursively, on startup (this affects large projects, not just monorepos, so the issue title is a bit of a misnomer)

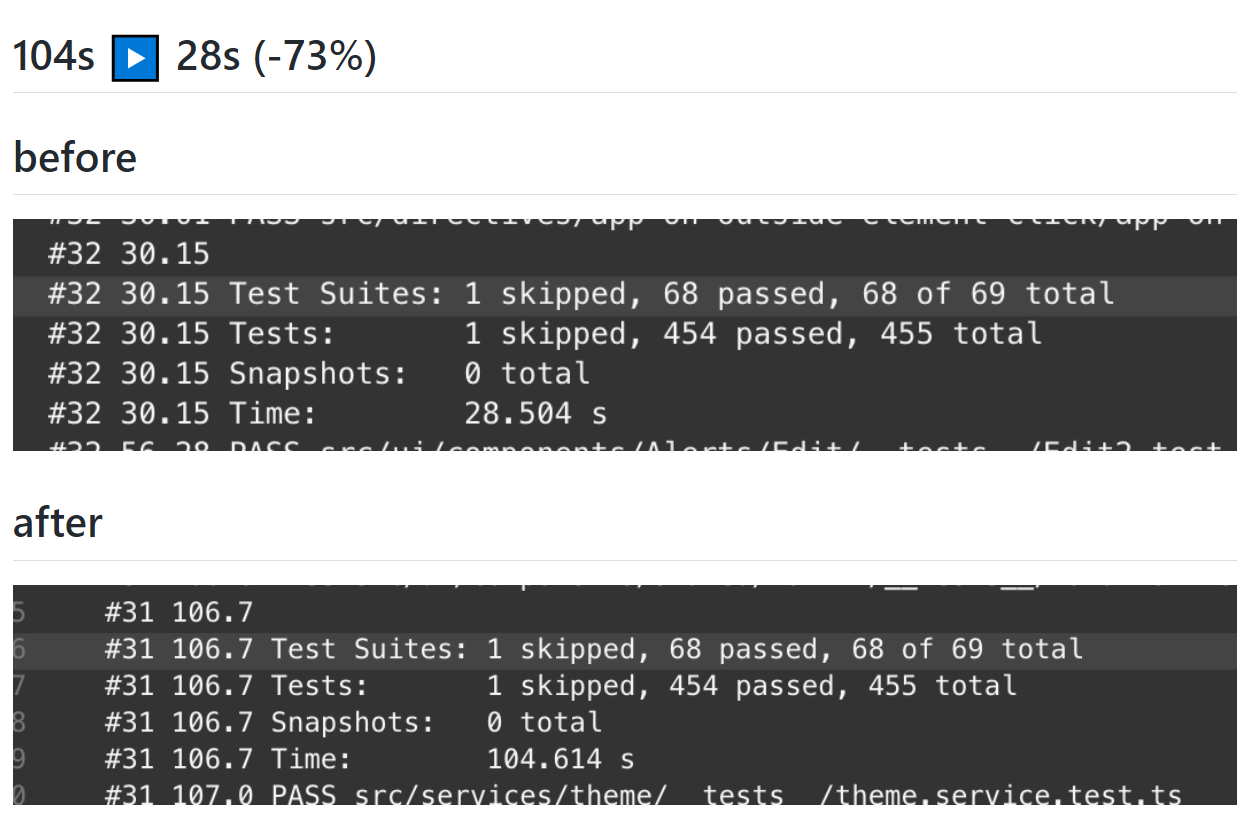

we experience that as well in a pretty big monorepo. i was playing with https://github.com/aelbore/esbuild-jest, it reduced the time by a big factor 104s => 28s but im not sure about the startup time. my guess is that it’s mostly improved the require/import part and the transpile time

Same problem on my side, you can see from snapshot it’s took around 18 seconds for jest adapter. With Jenkins build and --maxWorkers=5 thing going better, around(6-8 sec.) per test, but we have many and checking all of the test tooks us ~25min.

Will appreciate if someone can take a look.

With Jenkins build and --maxWorkers=5 thing going better, around(6-8 sec.) per test, but we have many and checking all of the test tooks us ~25min.

Will appreciate if someone can take a look.

anybody solved this?

Note Jest scans for test files; but also does a depth first crawl of every module for purposes of the mock system. Narrowing the test file pattern/root may help, but I think under the hood jest still crawls all of the files for purposes of mocking. There can be more than one issue, too 😃

I’m using https://nx.dev/

The custom resolver is this one: https://github.com/nrwl/nx/blob/master/packages/jest/plugins/resolver.ts and I just added a

console.log()in order to make the above observations.I’m also now realizing its possible the custom resolver is part of the problem, but I also think that if Jest could avoid eagerly crawling the filesystem (somehow), and instead do it “just in time”, that would probably boost performance a lot 😃

https://mui.com/material-ui/guides/minimizing-bundle-size/#option-two-use-a-babel-plugin is a workaround, in a basic minimal react app that imports once into material UI this shaves off maybe 10-20% of the “slow start” by reducing the number of files jest has to map out on the filesystem for the material UI library. Material UI is by no means the only library that benefits from proper tree shaking though…

Jest likely needs to be rewritten to remove “haste” (Facebooks module system), and instead bundle the code using a modern bundler that supports tree shaking – or just run the source directly via something like

node/ts-node. In turn, that will require rewriting jest mocks to rely on some other mechanism other than haste.Rather than rewriting the jest library, which seems untenable given lack of engagement from the maintainers, I would just recommend to avoid it and use some other test runner and when you need to mock use some other mocking library, like https://sinonjs.org/releases/latest/mocks/ – some would argue the whole benefit of writing tests is to avoid doing things like depending on a global singleton in your code, which jest aims to make easier. With a library like sinon, you have to pass mocks in as arguments or use a DI framework to inject your dependencies which leads to a better more flexible design for your code and avoids this whole nonsense with the slow “haste” module system Facebook made.

You are right it affects barrel exports and large code bases. Many larger react projects happen to exhibit both of these traits.

Internally jest does not use webpack or other “well-known” module systems. Facebook made their own module system called ‘haste’ and that is what enables jest mocks which makes it a breeze to test legacy code that is hard to test.

My two cents is that Facebook’s solution solved the testing problem, but it is better to actually design the code under test to use inversion of control and inject your dependencies. This makes your test code portable to "real " modules and not coupled to Jest which is apparently not scalable and not maintained adequately to meet our needs.

Instead of:

Write:

and then just don’t use jest, that would be my recommendation. The former code is just worse code overall than the latter code (ignoring any separate testing related implications). If you write the “good” easy to test code, you don’t really need jest anyway

Hello,

I may have some new insights on this issue. It could be related to throat.js which provides a mutex mechamism.

Here is how i came to this :

Please note that i could not apply the codemod to all js files, since it failed for half of them (some parsing issue, like ts code in js file) so it may not be representative of what’s really going on.

Here is an extract of the data i got (all functions with total time > 500ms):

It seems the culprit is

throatwhich provides mutex mechanism, so it looks like a deadlock issueWhich fits with the

lot (~4s) of "nothing"@beckerei mentioned.@robatwilliams when using watch mode having installed https://facebook.github.io/watchman/ with a proper config for the repo (e.g. exclude node_modules, dist, …) is a must.

Whenever I have time at work I look for ways to improve our test performance. However I haven’t found much despite what is already said in this thread.

Anyone looked into using a different test environment (e.g. node if no dom required, or something like happy dom as an alternative) curious if its worth going through tests and change the environment where possible?

@beckerei Haha would have loved to, but I am based in asia now.