istio: Istio is picking up new virtualservice slowly

Affected product area (please put an X in all that apply)

[ ] Configuration Infrastructure [ ] Docs [ ] Installation [X] Networking [X] Performance and Scalability [ ] Policies and Telemetry [ ] Security [ ] Test and Release [ ] User Experience [ ] Developer Infrastructure

Affected features (please put an X in all that apply)

[ ] Multi Cluster [ ] Virtual Machine [ ] Multi Control Plane

Version (include the output of istioctl version --remote and kubectl version and helm version if you used Helm)

client version: 1.5.4

cluster-local-gateway version:

cluster-local-gateway version:

cluster-local-gateway version:

ingressgateway version: 1.5.4

ingressgateway version: 1.5.4

ingressgateway version: 1.5.4

pilot version: 1.5.4

pilot version: 1.5.4

pilot version: 1.5.4

data plane version: 1.5.4 (6 proxies)

How was Istio installed?

cat << EOF > ./istio-minimal-operator.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

values:

global:

proxy:

autoInject: disabled

useMCP: false

# The third-party-jwt is not enabled on all k8s.

# See: https://istio.io/docs/ops/best-practices/security/#configure-third-party-service-account-tokens

jwtPolicy: first-party-jwt

addonComponents:

pilot:

enabled: true

prometheus:

enabled: false

components:

ingressGateways:

- name: istio-ingressgateway

enabled: true

- name: cluster-local-gateway

enabled: true

label:

istio: cluster-local-gateway

app: cluster-local-gateway

k8s:

service:

type: ClusterIP

ports:

- port: 15020

name: status-port

- port: 80

name: http2

- port: 443

name: https

EOF

./istioctl manifest generate -f istio-minimal-operator.yaml \

--set values.gateways.istio-egressgateway.enabled=false \

--set values.gateways.istio-ingressgateway.sds.enabled=true \

--set values.gateways.istio-ingressgateway.autoscaleMin=3 \

--set values.gateways.istio-ingressgateway.autoscaleMax=6 \

--set values.pilot.autoscaleMin=3 \

--set values.pilot.autoscaleMax=6 \

--set hub=icr.io/ext/istio > istio.yaml

kubectl apply -f istio.yaml // more visibility than istioctl manifest apply

Environment where bug was observed (cloud vendor, OS, etc) IKS

When we create ~1k virtualservices in a single cluster, the ingress gateway is picking up new virtualservice slowly.

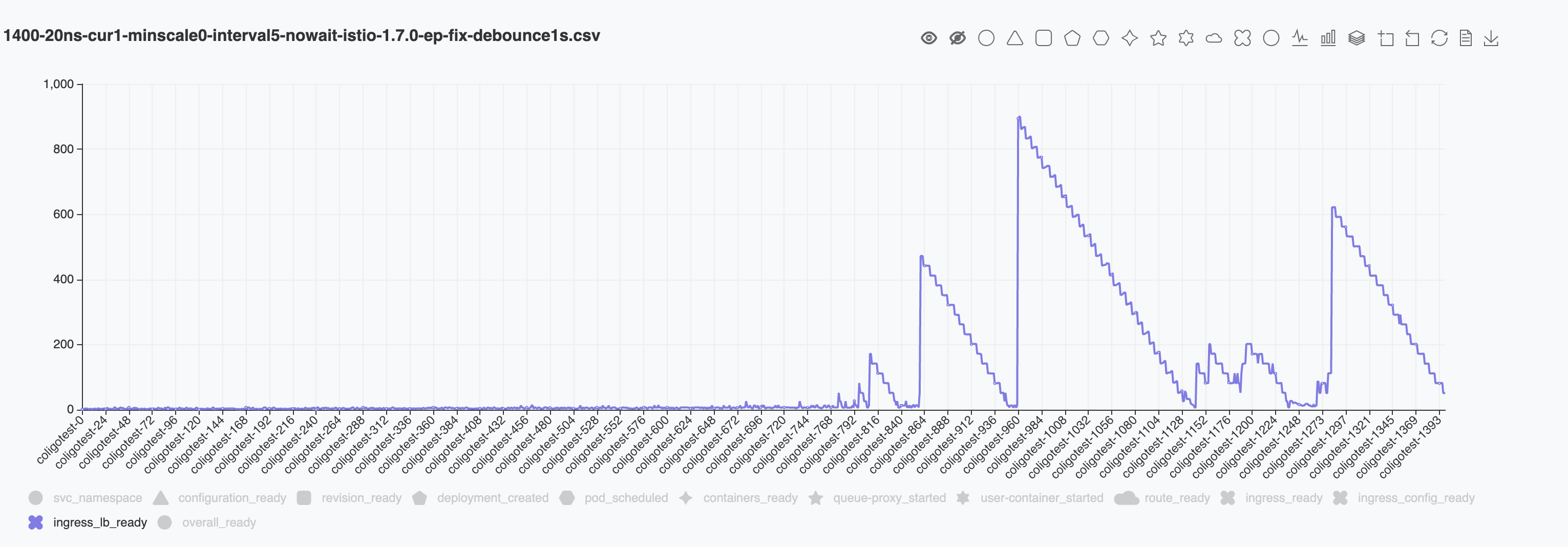

The blue line in the chart indicates the overall time for probing to gateway pod return with success. (200 response code and expected header

The blue line in the chart indicates the overall time for probing to gateway pod return with success. (200 response code and expected header K-Network-Hash). The stepped increasing of time is caused by the exponential retry backoff to execute probing. But the overall trend seems to have a linear growth which takes ~50s for a new virtual service to be picked up with 800 virtual services present.

I also tried to dump and grep the configs in istio-ingress-gateway pod after the virtual service was created. Initially the output was empty and It takes about 1min for the belowing result to showup.

curl localhost:15000/config_dump |grep testabc

"name": "outbound_.8022_._.testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound_.8022_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound_.80_._.testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound_.80_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound_.80_._.testabc-hhmjj-1.default.svc.cluster.local",

"service_name": "outbound_.80_._.testabc-hhmjj-1.default.svc.cluster.local"

"name": "outbound_.9090_._.testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound_.9090_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound_.9091_._.testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound_.9091_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound|8022||testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound|8022||testabc-hhmjj-1-private.default.svc.cluster.local"

"sni": "outbound_.8022_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound|80||testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound|80||testabc-hhmjj-1-private.default.svc.cluster.local"

"sni": "outbound_.80_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local",

"service_name": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local"

"sni": "outbound_.80_._.testabc-hhmjj-1.default.svc.cluster.local"

"name": "outbound|9090||testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound|9090||testabc-hhmjj-1-private.default.svc.cluster.local"

"sni": "outbound_.9090_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "outbound|9091||testabc-hhmjj-1-private.default.svc.cluster.local",

"service_name": "outbound|9091||testabc-hhmjj-1-private.default.svc.cluster.local"

"sni": "outbound_.9091_._.testabc-hhmjj-1-private.default.svc.cluster.local"

"name": "testabc.default.dev-serving.codeengine.dev.appdomain.cloud:80",

"testabc.default.dev-serving.codeengine.dev.appdomain.cloud",

"testabc.default.dev-serving.codeengine.dev.appdomain.cloud:80"

"prefix_match": "testabc.default.dev-serving.codeengine.dev.appdomain.cloud"

"cluster": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local",

"config": "/apis/networking.istio.io/v1alpha3/namespaces/default/virtual-service/testabc-ingress"

"operation": "testabc-hhmjj-1.default.svc.cluster.local:80/*"

"value": "testabc-hhmjj-1"

"name": "testabc.default.svc.cluster.local:80",

"testabc.default.svc.cluster.local",

"testabc.default.svc.cluster.local:80"

"prefix_match": "testabc.default.dev-serving.codeengine.dev.appdomain.cloud"

"cluster": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local",

"config": "/apis/networking.istio.io/v1alpha3/namespaces/default/virtual-service/testabc-ingress"

"operation": "testabc-hhmjj-1.default.svc.cluster.local:80/*"

"value": "testabc-hhmjj-1"

"name": "testabc.default.svc:80",

"testabc.default.svc",

"testabc.default.svc:80"

"prefix_match": "testabc.default.dev-serving.codeengine.dev.appdomain.cloud"

"cluster": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local",

"config": "/apis/networking.istio.io/v1alpha3/namespaces/default/virtual-service/testabc-ingress"

"operation": "testabc-hhmjj-1.default.svc.cluster.local:80/*"

"value": "testabc-hhmjj-1"

"name": "testabc.default:80",

"testabc.default",

"testabc.default:80"

"prefix_match": "testabc.default.dev-serving.codeengine.dev.appdomain.cloud"

"cluster": "outbound|80||testabc-hhmjj-1.default.svc.cluster.local",

"config": "/apis/networking.istio.io/v1alpha3/namespaces/default/virtual-service/testabc-ingress"

"operation": "testabc-hhmjj-1.default.svc.cluster.local:80/*"

"value": "testabc-hhmjj-1"

There is no mem/cpu pressure for istio components.

kubectl -n istio-system top pods

NAME CPU(cores) MEMORY(bytes)

cluster-local-gateway-644fd5f945-f4d6d 29m 953Mi

cluster-local-gateway-644fd5f945-mlhkc 34m 952Mi

cluster-local-gateway-644fd5f945-nt4qk 30m 958Mi

istio-ingressgateway-7759f4649d-b5whx 37m 1254Mi

istio-ingressgateway-7759f4649d-g2ppv 43m 1262Mi

istio-ingressgateway-7759f4649d-pv6qs 48m 1431Mi

istiod-6fb9877647-7h7wk 8m 875Mi

istiod-6fb9877647-k6n9m 9m 914Mi

istiod-6fb9877647-mncpn 26m 925Mi

Below is a typical virtual service created by knative.

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

annotations:

networking.knative.dev/ingress.class: istio.ingress.networking.knative.dev

creationTimestamp: "2020-07-20T08:23:54Z"

generation: 1

labels:

networking.internal.knative.dev/ingress: hello29

serving.knative.dev/route: hello29

serving.knative.dev/routeNamespace: default

name: hello29-ingress

namespace: default

ownerReferences:

- apiVersion: networking.internal.knative.dev/v1alpha1

blockOwnerDeletion: true

controller: true

kind: Ingress

name: hello29

uid: 433c415d-901e-4154-bfd9-43178d0db192

resourceVersion: "47989694"

selfLink: /apis/networking.istio.io/v1beta1/namespaces/default/virtualservices/hello29-ingress

uid: 20162863-b721-4d67-aa19-b84adc7dffe0

spec:

gateways:

- knative-serving/cluster-local-gateway

- knative-serving/knative-ingress-gateway

hosts:

- hello29.default

- hello29.default.dev-serving.codeengine.dev.appdomain.cloud

- hello29.default.svc

- hello29.default.svc.cluster.local

http:

- headers:

request:

set:

K-Network-Hash: 12a72f65db15ba3a00ad16b328c40b5398a86cc84ba3239ad37f4d5ef811b0fa

match:

- authority:

prefix: hello29.default

gateways:

- knative-serving/cluster-local-gateway

retries: {}

route:

- destination:

host: hello29-cpwpf-1.default.svc.cluster.local

port:

number: 80

headers:

request:

set:

Knative-Serving-Namespace: default

Knative-Serving-Revision: hello29-cpwpf-1

weight: 100

timeout: 600s

- headers:

request:

set:

K-Network-Hash: 12a72f65db15ba3a00ad16b328c40b5398a86cc84ba3239ad37f4d5ef811b0fa

match:

- authority:

prefix: hello29.default.dev-serving.codeengine.dev.appdomain.cloud

gateways:

- knative-serving/knative-ingress-gateway

retries: {}

route:

- destination:

host: hello29-cpwpf-1.default.svc.cluster.local

port:

number: 80

headers:

request:

set:

Knative-Serving-Namespace: default

Knative-Serving-Revision: hello29-cpwpf-1

weight: 100

timeout: 600s

---

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 82 (59 by maintainers)

Commits related to this issue

- Wait to send more pushes until receive has ACKed Fixes: https://github.com/istio/istio/issues/25685 Istio suffers from a problem at large scale (800+ services sequentially created) with significant ... — committed to sdake/istio by deleted user 4 years ago

- Wait to send more pushes until receive has ACKed Fixes: https://github.com/istio/istio/issues/25685 Istio suffers from a problem at large scale (800+ services sequentially created) with significant ... — committed to sdake/istio by deleted user 4 years ago

- Wait until ACK before sending additional pushes Fixes: #25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any... — committed to howardjohn/istio by howardjohn 4 years ago

- Wait until ACK before sending additional pushes (#28261) * Wait until ACK before sending additional pushes Fixes: #25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no b... — committed to istio/istio by howardjohn 4 years ago

- Add test for local rate limiting using Envoy Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnupur@googl... — committed to gargnupur/istio by gargnupur 4 years ago

- Add test for local rate limiting using Envoy (#28888) Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnu... — committed to istio/istio by gargnupur 4 years ago

- Add test for local rate limiting using Envoy (#28888) Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnu... — committed to daixiang0/istio by gargnupur 4 years ago

Here are some test results with tuning for

PILOT_DEBOUNCE_AFTERparameter. I tested usingPILOT_DEBOUNCE_MAX = 10sWe can tell that the similar sudden jumps happens after index 800. So I assume that the longer

PILOT_DEBOUNCE_AFTERwill alleivate the stress for configuring envoy. But it will not solve the issue completely.@linsun We did not set limit CPU for istiod. Also we set istiod and both public gateway and private gateway replicas to fixed value of 3.

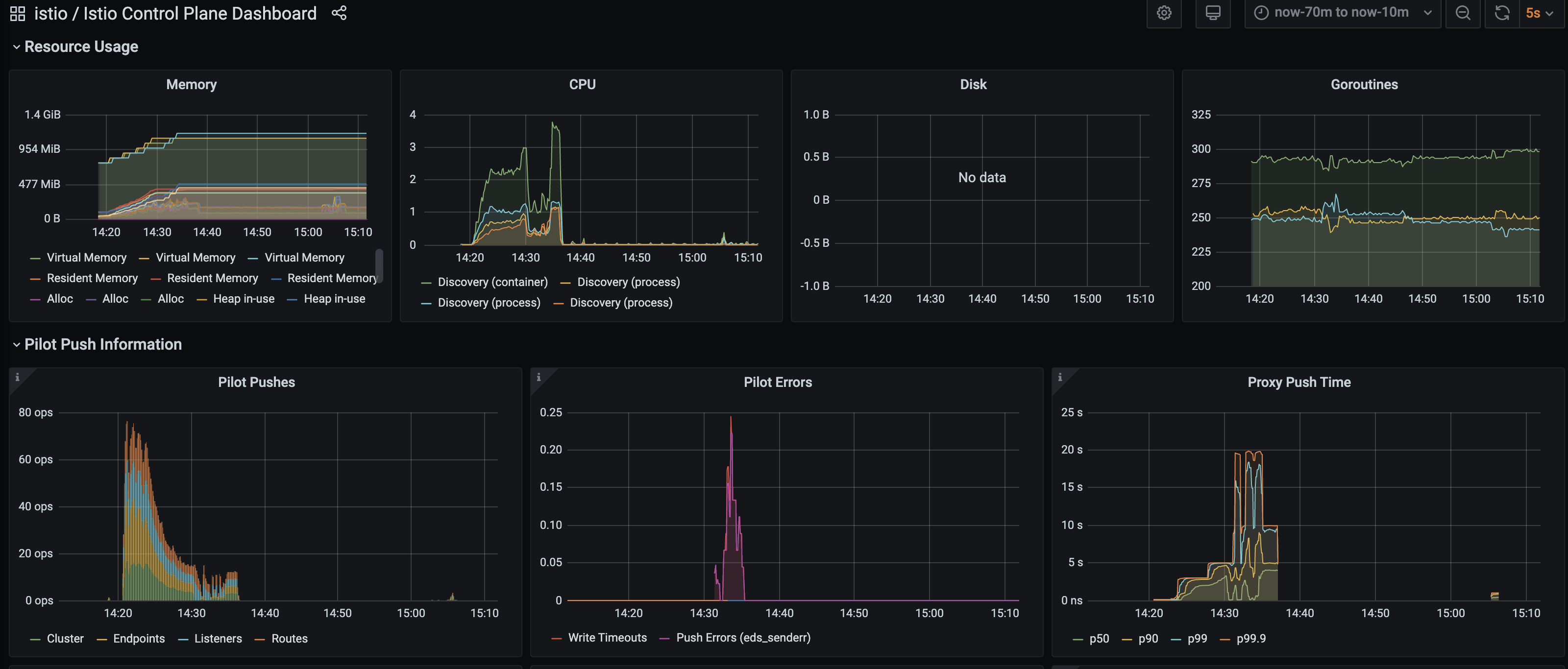

I have tried 1.6.8 and 1.7.0-rc.1 released today with no luck. This time I recorded some dashboard during the tests.

Seems that there did exist some errors for eds_sender. And some small pushes at ~15:05. I believe after the additional push the gateway got configured correctly. I will try to collect the detailed error logs.

with the fixes in https://github.com/istio/istio/issues/23029 I am able to reproduce the similar result as @sdake posted. The individual spikes are gone and I think It is a valid fix. Thank you 👍 .

This is a problem: https://github.com/istio/istio/issues/23029#issuecomment-683234340

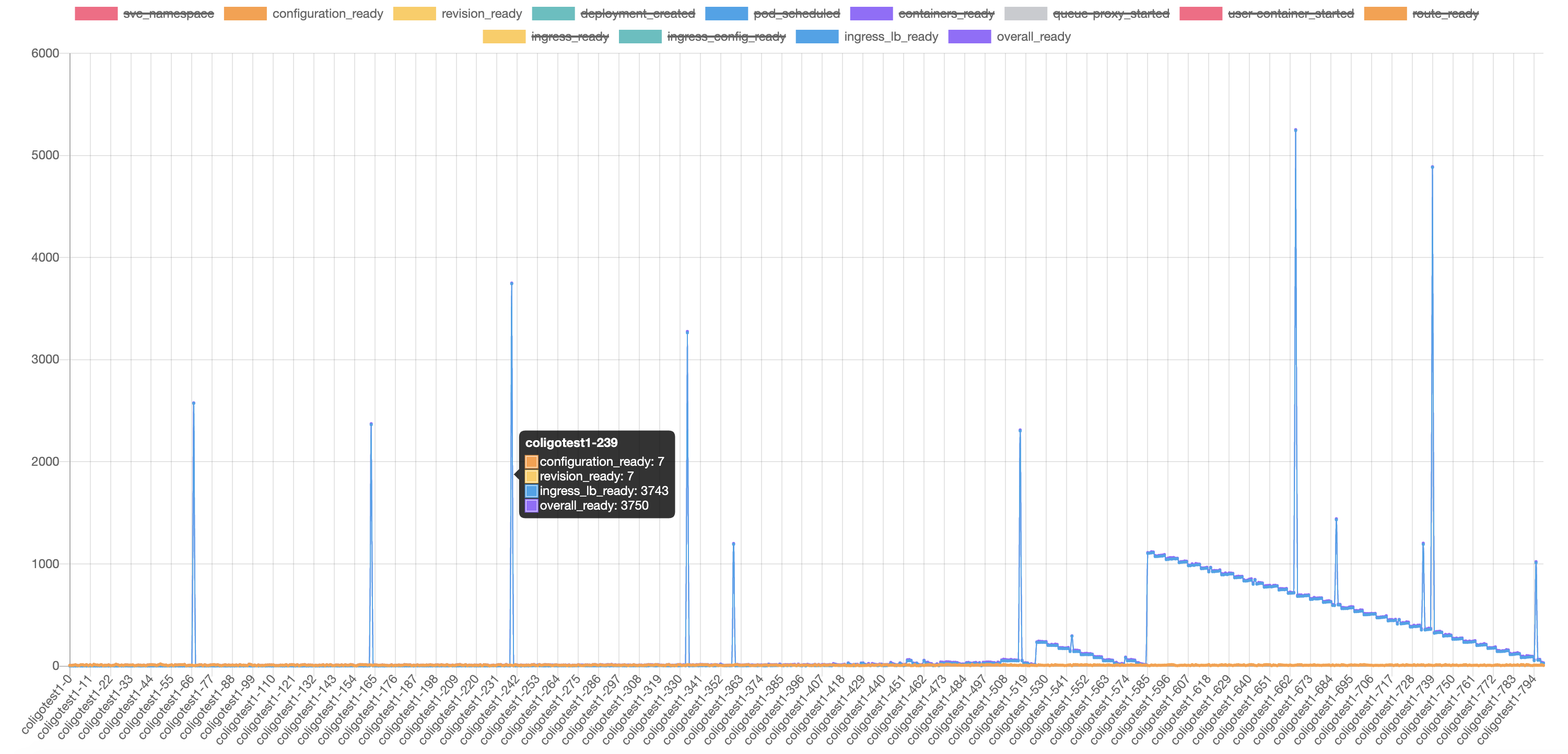

Here is another test which is our typical tests as I described at the beginning of this issue. We create 800 ksvc sequentially with 5s interval. Which takes roughly ~1hr for the whole 1600 virtual service to be created. We found the similar result, but there are NO errors in dashboard the

pilot errors. From the checking for of endpoints in envoy we can tell that the endpoint configs are missing in podistio-ingressgateway-6d899bb698-5fvg6andistio-ingressgateway-6d899bb698-qckqffor ksvccoligotest-9which stuck for >20minutes. Notice that from the dashboardPilot Pushesthere are small peaks at 10:15 and 11:15, which should get the missing endpoints configured correctly.I think the missing of endpoint configs contributes the unexpected peak values in the following chart. We have not seen this in releases prior to release 1.6.

@sdake FYI, here is the benchmark tool we’re using which will help to generate the Knative Service with different intervals as @lanceliuu mentioned above and can get the ingress_lb_ready duration time and dashboard. https://github.com/zhanggbj/kperf

These results are better - although ADS continues to disconnect and Envoy OOMs: https://github.com/istio/istio/issues/28192. I do feel like this is the first attempt of a PR that manages the numerous constraints of the protocol implementation .

.

when the connection is dropped because of the 5 second send timeout, the system falls over. Under heavy services churn, the proxy enters a super-overloaded state where it can take 200-800 seconds to recover - see: https://github.com/istio/istio/issues/25685#issuecomment-668488995.

Yes, that is what I am saying.

What is an acceptable delay for new VS being created? Obviously we want it to be as fast as possible but we need to be realistic so we can make the appropriate compromises

About the number relationship of Knative services:virtual service:K8s service, for 800 knative service, we only have 1.6k virtual service, FYI.

1 Knative service will create 2 virtualservice as below (we only use blue-ingress as we only use knative-serving/cluster-local-gateway knative-serving/knative-ingress-gateway)and 3 K8s services

Relevant PR: https://github.com/istio/istio/pull/24230 that we closed for now due to lack of time to test it

On Thu, Aug 13, 2020 at 2:43 PM Steven Dake notifications@github.com wrote:

Look at pilot_proxy_queue_time, pilot_push_triggers, pilot_proxy_convergence_time. All are on the grafana dashboard. 1.6.7 fixed a critical bug around endpoints getting stuck, may try that