istio: envoy crash when setup envoy access log service

Bug description

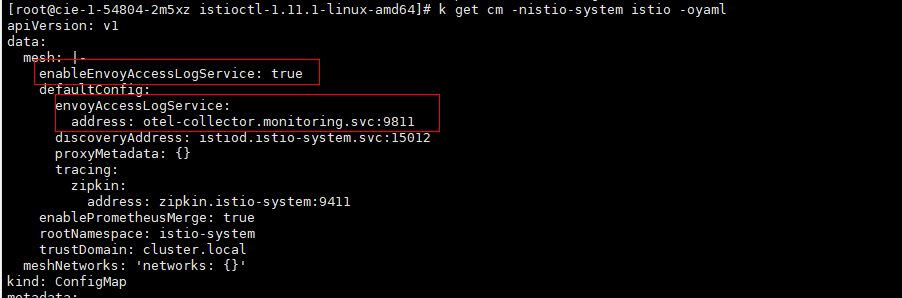

after change istio cm as bellow:

envoy crash and get logs as follow:

2021-08-27T06:34:45.956129Z critical envoy main std::terminate called! (possible uncaught exception, see trace)

2021-08-27T06:34:45.956159Z critical envoy backtrace Backtrace (use tools/stack_decode.py to get line numbers):

2021-08-27T06:34:45.956160Z critical envoy main std::terminate called! (possible uncaught exception, see trace)

2021-08-27T06:34:45.956179Z critical envoy backtrace Backtrace (use tools/stack_decode.py to get line numbers):

2021-08-27T06:34:45.956182Z critical envoy backtrace Envoy version: 9a976952980250ed356ebdd4f94e219e28a041dd/1.19.1-dev/Clean/RELEASE/BoringSSL

2021-08-27T06:34:45.956163Z critical envoy backtrace Envoy version: 9a976952980250ed356ebdd4f94e219e28a041dd/1.19.1-dev/Clean/RELEASE/BoringSSL

2021-08-27T06:34:45.961294Z warning envoy config gRPC config for type.googleapis.com/envoy.config.listener.v3.Listener rejected: Error adding/updating listener(s) 10.237.207.202_15012: Unknown gRPC client cluster 'envoy_accesslog_service'

10.237.0.1_443: Unknown gRPC client cluster 'envoy_accesslog_service'

10.237.0.10_53: Unknown gRPC client cluster 'envoy_accesslog_service'

10.237.207.202_443: Unknown gRPC client cluster 'envoy_accesslog_service'

0.0.0.0_80: Unknown gRPC client cluster 'envoy_accesslog_service'

0.0.0.0_8080: Unknown gRPC client cluster 'envoy_accesslog_service'

10.237.0.209_8000: Unknown gRPC client cluster 'envoy_accesslog_service'

0.0.0.0_15010: Unknown gRPC client cluster 'envoy_accesslog_service'

10.237.0.10_8080: Unknown gRPC client cluster 'envoy_accesslog_service'

0.0.0.0_15014: Unknown gRPC client cluster 'envoy_accesslog_service'

virtualOutbound: Unknown gRPC client cluster 'envoy_accesslog_service'

virtualInbound: Unknown gRPC client cluster 'envoy_accesslog_service'

2021-08-27T06:34:45.979164Z critical envoy backtrace #0: Envoy::TerminateHandler::logOnTerminate()::$_0::operator()() [0x5617e6f01486]

2021-08-27T06:34:45.979177Z critical envoy backtrace #0: Envoy::TerminateHandler::logOnTerminate()::$_0::operator()() [0x5617e6f01486]

2021-08-27T06:34:45.987414Z critical envoy backtrace #1: [0x5617e6f012e9]

2021-08-27T06:34:45.987428Z critical envoy backtrace #1: [0x5617e6f012e9]

2021-08-27T06:34:45.995368Z critical envoy backtrace #2: std::__terminate() [0x5617e75a9633]

2021-08-27T06:34:45.995386Z critical envoy backtrace #2: std::__terminate() [0x5617e75a9633]

2021-08-27T06:34:46.002841Z critical envoy backtrace #3: Envoy::Grpc::AsyncClientManagerImpl::factoryForGrpcService() [0x5617e674a74a]

2021-08-27T06:34:46.002866Z critical envoy backtrace #3: Envoy::Grpc::AsyncClientManagerImpl::factoryForGrpcService() [0x5617e674a74a]

2021-08-27T06:34:46.010364Z critical envoy backtrace #4: Envoy::Extensions::AccessLoggers::Common::GrpcAccessLoggerCache<>::getOrCreateLogger() [0x5617e4fe181d]

2021-08-27T06:34:46.012595Z critical envoy backtrace #4: Envoy::Extensions::AccessLoggers::Common::GrpcAccessLoggerCache<>::getOrCreateLogger() [0x5617e4fe181d]

2021-08-27T06:34:46.018062Z critical envoy backtrace #5: Envoy::Extensions::AccessLoggers::Common::GrpcAccessLoggerCache<>::getOrCreateLogger() [0x5617e4fe1a32]

2021-08-27T06:34:46.019678Z critical envoy backtrace #5: Envoy::Extensions::AccessLoggers::Common::GrpcAccessLoggerCache<>::getOrCreateLogger() [0x5617e4fe1a32]

2021-08-27T06:34:46.025081Z critical envoy backtrace #6: std::__1::__function::__func<>::operator()() [0x5617e4fe2e7d]

2021-08-27T06:34:46.026669Z critical envoy backtrace #6: std::__1::__function::__func<>::operator()() [0x5617e4fe2e7d]

2021-08-27T06:34:46.032533Z critical envoy backtrace #7: std::__1::__function::__func<>::operator()() [0x5617e66bb918]

2021-08-27T06:34:46.033677Z critical envoy backtrace #7: std::__1::__function::__func<>::operator()() [0x5617e66bb918]

2021-08-27T06:34:46.040005Z critical envoy backtrace #8: Envoy::Event::DispatcherImpl::runPostCallbacks() [0x5617e6c982cd]

2021-08-27T06:34:46.040706Z critical envoy backtrace #8: Envoy::Event::DispatcherImpl::runPostCallbacks() [0x5617e6c982cd]

2021-08-27T06:34:46.047389Z critical envoy backtrace #9: event_process_active_single_queue [0x5617e6dbd6d8]

2021-08-27T06:34:46.047735Z critical envoy backtrace #9: event_process_active_single_queue [0x5617e6dbd6d8]

2021-08-27T06:34:46.054742Z critical envoy backtrace #10: event_base_loop [0x5617e6dbc3c1]

2021-08-27T06:34:46.055113Z critical envoy backtrace #10: event_base_loop [0x5617e6dbc3c1]

2021-08-27T06:34:46.062208Z critical envoy backtrace #11: Envoy::Server::WorkerImpl::threadRoutine() [0x5617e66d958d]

2021-08-27T06:34:46.063583Z critical envoy backtrace #11: Envoy::Server::WorkerImpl::threadRoutine() [0x5617e66d958d]

2021-08-27T06:34:46.069610Z critical envoy backtrace #12: Envoy::Thread::ThreadImplPosix::ThreadImplPosix()::{lambda()#1}::__invoke() [0x5617e6fea673]

2021-08-27T06:34:46.069692Z critical envoy backtrace #13: start_thread [0x7ff20dd6f609]

2021-08-27T06:34:46.069719Z critical envoy backtrace Caught Aborted, suspect faulting address 0x53900000011

2021-08-27T06:34:46.069725Z critical envoy backtrace Backtrace (use tools/stack_decode.py to get line numbers):

2021-08-27T06:34:46.069727Z critical envoy backtrace Envoy version: 9a976952980250ed356ebdd4f94e219e28a041dd/1.19.1-dev/Clean/RELEASE/BoringSSL

2021-08-27T06:34:46.069757Z critical envoy backtrace #0: __restore_rt [0x7ff20dd7b3c0]

2021-08-27T06:34:46.070785Z critical envoy backtrace #12: Envoy::Thread::ThreadImplPosix::ThreadImplPosix()::{lambda()#1}::__invoke() [0x5617e6fea673]

2021-08-27T06:34:46.070843Z critical envoy backtrace #13: start_thread [0x7ff20dd6f609]

2021-08-27T06:34:46.074023Z info ads ADS: "@" nginx-69b486cdfd-vjsm8.default-1 terminated rpc error: code = Canceled desc = context canceled

2021-08-27T06:34:46.074089Z info ads ADS: "@" nginx-69b486cdfd-vjsm8.default-2 terminated rpc error: code = Canceled desc = context canceled

2021-08-27T06:34:46.074287Z error Epoch 0 exited with error: signal: aborted

2021-08-27T06:34:46.074309Z info No more active epochs, terminating

Affected product area (please put an X in all that apply)

[ ] Docs [ ] Installation [ ] Networking [ ] Performance and Scalability [ ] Extensions and Telemetry [ ] Security [ ] Test and Release [ ] User Experience [ ] Developer Infrastructure [ ] Upgrade

Affected features (please put an X in all that apply)

[ ] Multi Cluster [ ] Virtual Machine [ ] Multi Control Plane

Expected behavior

envoyAccessLogService not work before re-inject the pod, but the envoy should not crash

Steps to reproduce the bug

- install mesh

istioctl install --set profile=minimal - inject a pod in the default namespace

- change the istio cm in istio-system namespace

then you will the pod envoy crashed

istioctl install --set profile=minimal

Version (include the output of istioctl version --remote and kubectl version --short and helm version --short if you used Helm)

istioctl

client version: 1.11.1

control plane version: 1.11.1

data plane version: 1.11.1 (1 proxies)

kubectl

Client Version: v1.17.11

Server Version: v1.17.17-r0-CCE21.6.1.B004-17.37.5

How was Istio installed?

istioctl

Environment where the bug was observed (cloud vendor, OS, etc)

Additionally, please consider running istioctl bug-report and attach the generated cluster-state tarball to this issue.

Refer cluster state archive for more details.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Comments: 16 (13 by maintainers)

I guess no one is working on this currently… I could take a shot but not sure how long it takes.

the PR has landed the Envoy upstream so this should be fixed in the next Istio release.