istio: [1.5] Prometheus scraping pods shouldn't be reported in Istio telemetry

Bug description

Previously, the telemetry resulting from prometheus scraper was intentionally ignored: see this old PR: https://github.com/istio/istio/pull/12251

With telemetry v2, the same problem is reappearing. When prometheus is configured to scrape the user app’ pods, this traffic is being reported in istio/envoy telemetry. For the same reasons as we did one year ago (it was screwing up kiali graph), it would be great to ignore this traffic. Or perhaps do something to better identify its origin, so that we can act in Kiali.

I suspect the same issue could arise with traffic generated by k8s probes although I didn’t test it, but there was also a user-agent check for this.

The old Mixer rule that isn’t valid anymore with telemtry v2 is:

match: (context.protocol == "http" || context.protocol == "grpc") && (match((request.useragent | "-"), "kube-probe*") == false) && (match((request.useragent | "-"), "Prometheus*") == false)

Steps to reproduce the bug

-

Configure Prometheus & applications to scrape pods, e.g: via job

kubernetes-podsand/orkubernetes-pods-istio-secure -

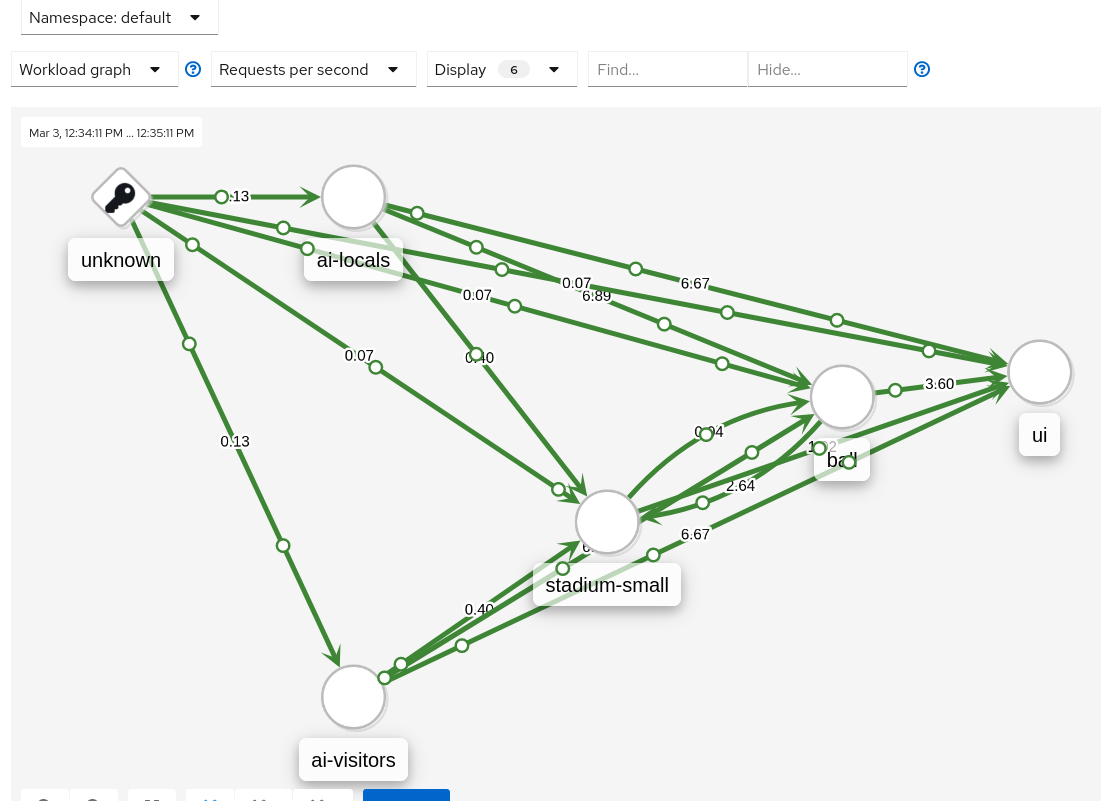

Check telemetry in prometheus and/or via Kiali, see traffic that originates from “Unknown”

Example in Kiali:

Version (include the output of istioctl version --remote and kubectl version and helm version if you used Helm)

client version: 1.5.0-beta.5

control plane version: 1.5.0-beta.5

data plane version: 1.5.0-beta.5 (10 proxies)

How was Istio installed?

via istioctl manifest apply --set profile=demo

Note, I had also to modify prometheus configmap to re-activate app’ pods scraping. It seems like prom’s configmap is not exactly similar to the one used for helm chart installation.

Environment where bug was observed (cloud vendor, OS, etc)

minikube

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 16 (11 by maintainers)

@jotak @jmazzitelli @douglas-reid We can fix it in 1.5.1