imgproxy: PDF support causes runaway goroutines

We appreciate the new formats and features being added to imgproxy, makes me glad we chose to use imgproxy to power our previewing system. When upgrading to version 2.15.0 (pro), I noticed that support for PDF as a source was added, so I decided to give it a try in our software to see what happens. We generate WebP “seed files” from almost every file using a slower method and give that to imgproxy as a source, but during the window of time between when a file is first added and before the seed is generated, we optionally will give imgproxy the original file as a source for “snappier” previews if we can.

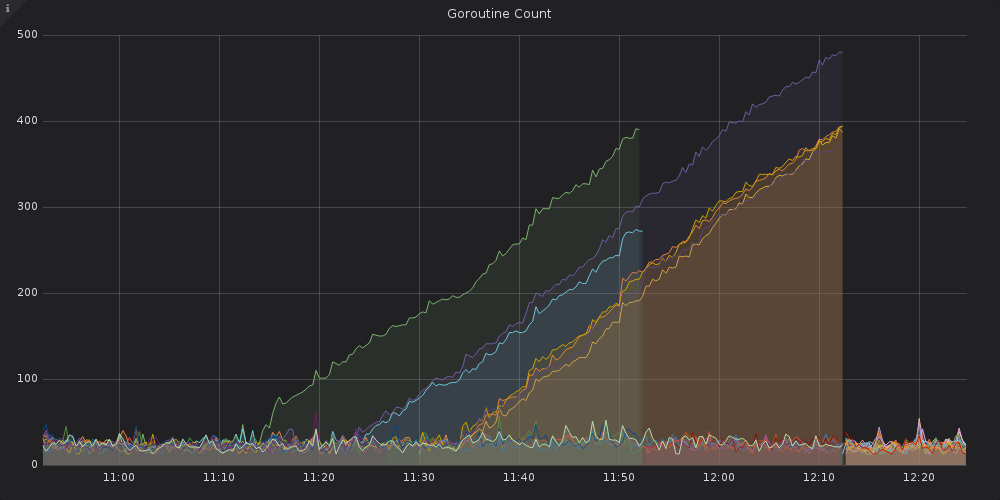

When adding the option to feed PDFs directly into imgproxy, we noticed that connections to imgproxy began timing out 15-ish minutes after deployment, so we rolled back. Digging into our collected metrics, I noticed that imgproxy pods that were given PDFs as input files began creating goroutines at an alarming rate without decreasing:

(Slopes end when the pods were killed and replaced with new pods.)

Memory usage remained relatively normal during this time, but latency for establishing a new connection went up, and our proxy layer began timing out after 15 seconds trying to establish a connection to imgproxy.

I’m not sure what exactly went wrong here, but I supect one of these two possibilities:

- There is a goroutine leak somewhere in the PDF implementation.

- PDFs are simply expected to be slow, and at our volume of traffic (>10K requests/minute) imgproxy simply gets piled up with too many concurrent requests.

Though I am suspecting the first possibility (hence this issue) because we have imgproxy configured with IMGPROXY_CONCURRENCY=50 per instance, and >400 goroutines seems really high when this configuration is set.

Thoughts?

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 18 (7 by maintainers)

Thank you for digging this out! I found what causes this issue. I build PDFium to reuse as much system-wide installed libraries as possible instead of statically linking ones that are distributed with PDFium. It occurred that lcms2 that comes with PDFium is very patched. It seems like one of those patches fixes this issue. I’ll try to release the fixed image ASAP.

Hi!

I have just tried to spam imgproxy v2.15.0 with 200 concurrent requests of PDF processing while the concurrency was set to 50. I have got 400+ goroutines, but their number didn’t grow. Since imgproxy doesn’t do anything special with PDFs, ones shouldn’t cause goroutines leak. Anyway, I’d appreciate some samples of your PDFs and imgproxy URLs.

You should know that

IMGPROXY_CONCURRENCY!= maximum of concurrent requests.IMGPROXY_CONCURRENCYis the number of images that can be processed simultaneously. If the number of concurrent requests if greater than concurrency, some requests will be put into a queue. The maximum number of concurrent requests can be configured withIMGPROXY_MAX_CLIENTS, and it’sIMGPROXY_CONCURRENCY * 10by default (500 in your case). Every request is processed in its own goroutine. Goroutines are also cast while working with channels. So if you have 200+ concurrent requests, you’ll get 400+ goroutines with ease.Also, PDFium is not thread-safe, and libvips wraps all PDF loading operations with mutexes. Thus if some of your PDFs are heavy to load, this may cause a jam in this place.

I always recommend scaling imgproxy horizontally rather than vertically. It’s better to have 10 pods with a concurrency of 5 than a single pod with a concurrency of 50. Try to split your pods into smaller ones and see if it helps.