next-i18next: Memory leak caused by cloning the i18n instance

Describe the bug

The #1073 pr introduced a significant memory leak when using getServerSideProps.

Occurs in next-i18next version

Steps to reproduce

- using simple example from repo.

- use

getServerSidePropsinstead ofgetStaticPropsin index.js - run

yarn buildon the example repo - start server in debug mode. In your

package.json

"start": "NODE_OPTIONS='--inspect' next start -p ${PORT:=3000}"

-

In chrome open

chrome://inspecturl then click on inspect

-

Tab to the

Memorytab. -

Load

localhost:3000. Take a snapshot (base). -

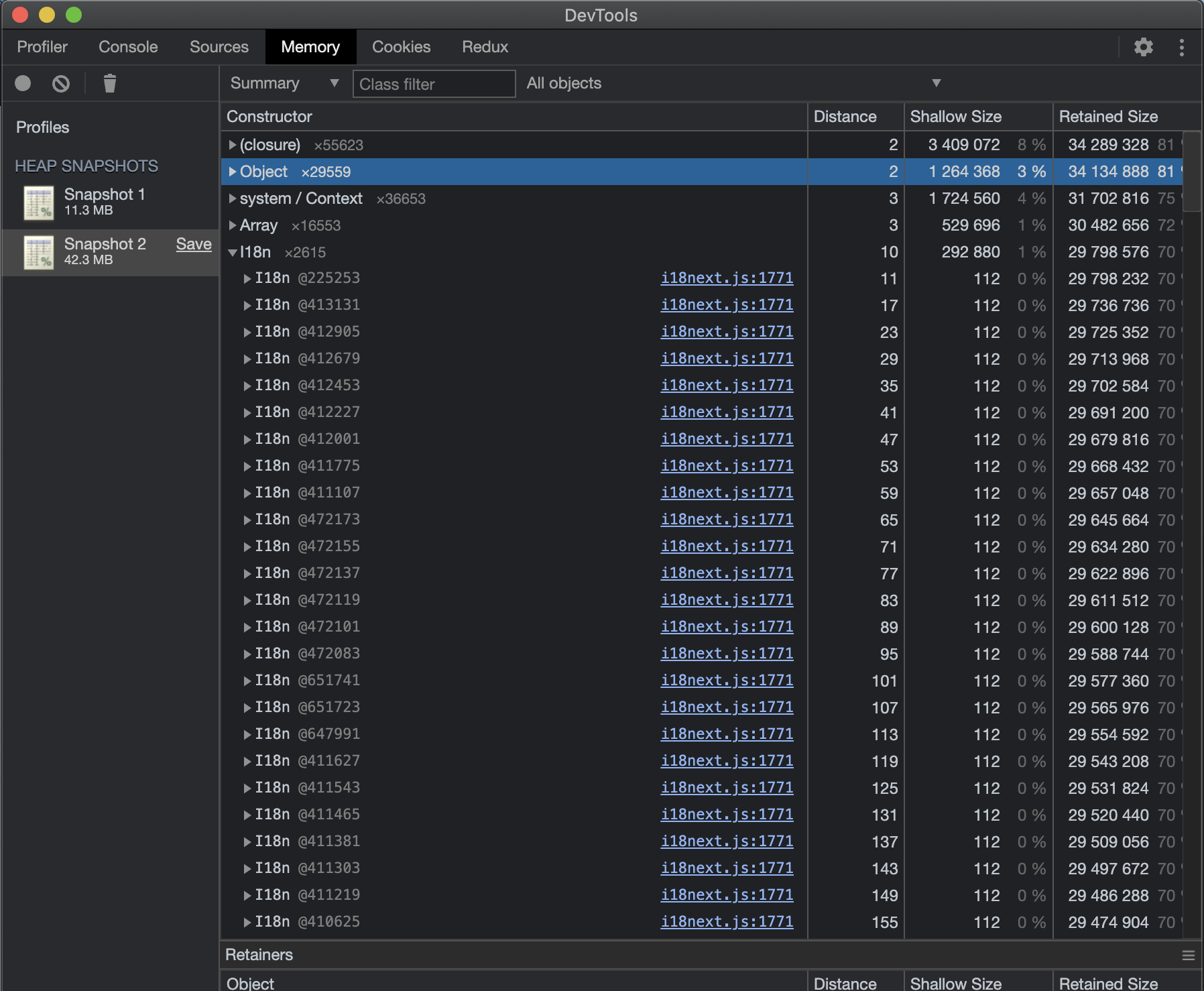

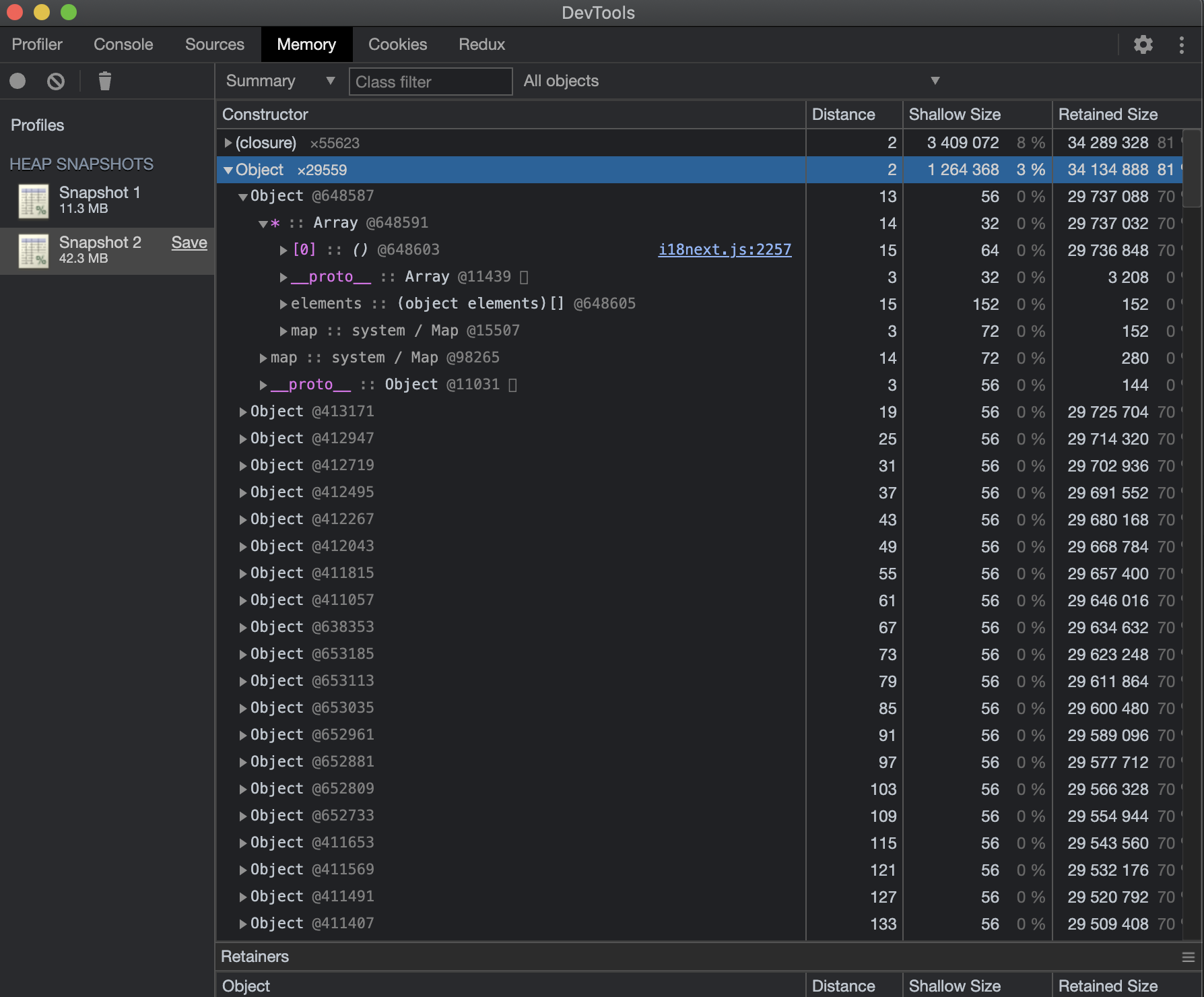

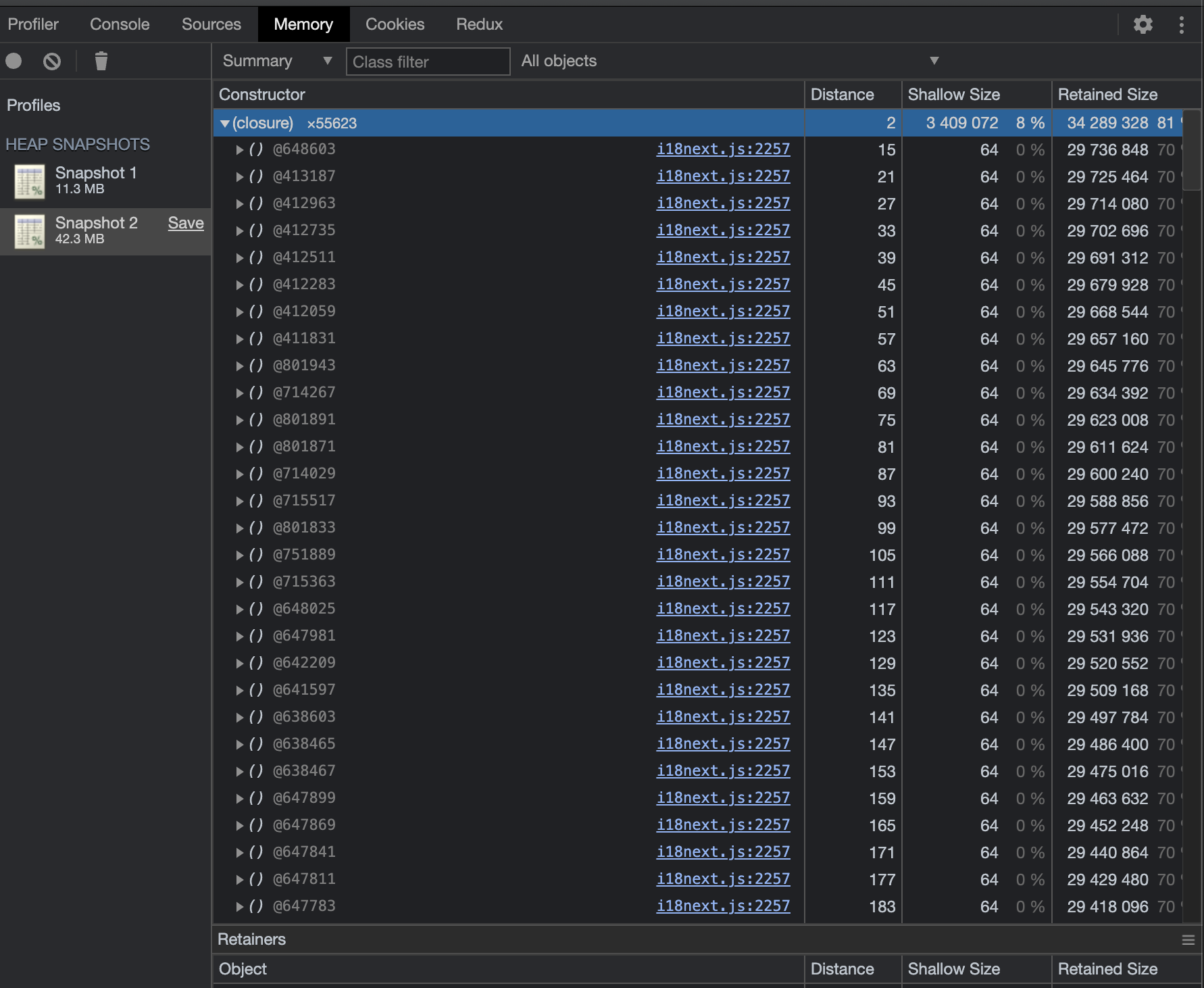

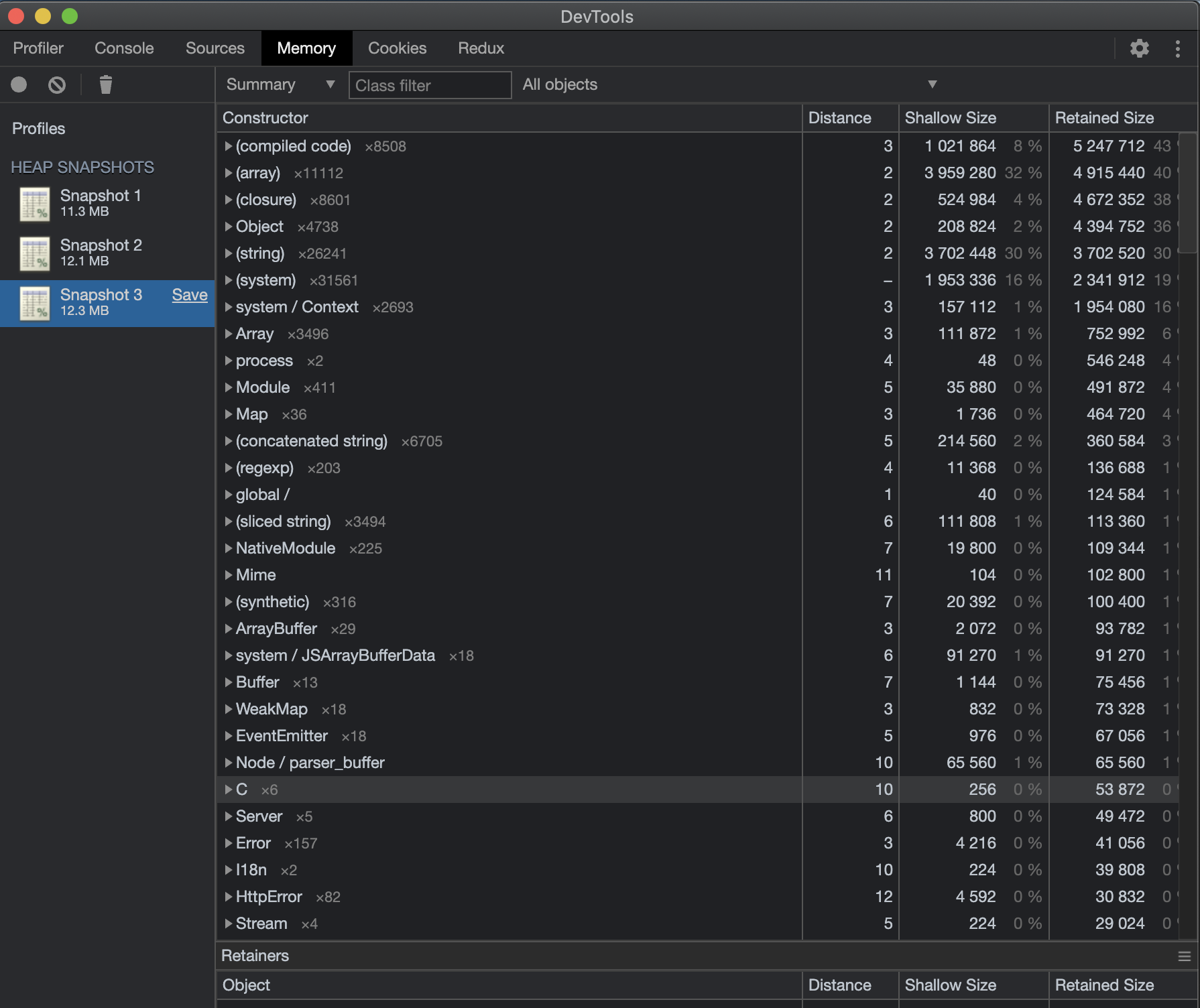

Use loadtest or any other load testing library to hit localhost:3000 (2000 total requests). Send 1000 requests, stop the load, click collect garbage, take snapshot. Inspect the retained data.

-

Send another 1000 requests, stop the load test, click collect garbage, take a snapshot. Inspect the retained data. NOTE: it will take some time for the snapshot because of the tree created by the leak.

-

Observer the memory significantly increased and the GC never releases it.

-

Inspect the retained objects and you will see the i18n references.

Expected behaviour

After stopping the loadtest and manually triggering GC (or waiting for it to auto trigger) memory should go back to baseline (plus any additional memory used post first request). This is the behaviour in v8.1.1

Screenshots

As you can see the exact same test with the only difference being the version does not show a memory leak. In 8.1.1 we go from 11.3 -> 12.3 In 8.1.2 we go from 11.3 -> 66.3

8.1.2

8.1.1

OS (please complete the following information)

- Device: [MacBook Pro (16-inch, 2019)]

- Browser: [Chrome Version 89.0.4389.90]

Additional context

Add any other context about the problem here.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Comments: 21 (14 by maintainers)

@adrai Looks good on my end! No memory leaks detected with this fix

here we go: https://github.com/isaachinman/next-i18next/pull/1110

@isaachinman @adrai Everything is looking great in prod, our servers are very happy! On behalf of my team and myself thank you for the fix and for being awesome! Much appreciated!

Published in v8.1.3. @dcodus Can you please test the release and see if the issue is resolved?

Great. If you are indeed using OSS for that volume of prod traffic, I would encourage you sponsor packages, or contribute PRs in the future.