semseg: Assertion `t >= 0 && t < n_classes` failed

Thanks for sharing your codes! I was not able to run the training code for Cityscapes dataset. Below you can see my configuration file and the error messages. This seems to be related to the labels being out of range. I looked at your loader (SemData). It reads the label files (in my case color label files from Cityscapes dataset) but does not convert them to the range [0, n_classes-1]. Could you have a look at it? Thanks a lot!

DATA: data_root: /content/CityScapes_modified train_list: dataset/cityscapes/fine_train.txt val_list: dataset/cityscapes/fine_val.txt classes: 19

TRAIN: arch: psp layers: 50 sync_bn: True # adopt syncbn or not train_h: 713 train_w: 713 scale_min: 0.5 # minimum random scale scale_max: 2.0 # maximum random scale rotate_min: -10 # minimum random rotate rotate_max: 10 # maximum random rotate zoom_factor: 8 # zoom factor for final prediction during training, be in [1, 2, 4, 8] ignore_label: 255 aux_weight: 0.4 train_gpu: [0] workers: 4 # data loader workers batch_size: 2 # batch size for training batch_size_val: 2 # batch size for validation during training, memory and speed tradeoff base_lr: 0.01 epochs: 200 start_epoch: 0 power: 0.9 momentum: 0.9 weight_decay: 0.0001 manual_seed: print_freq: 10 save_freq: 1 save_path: exp/cityscapes/pspnet50/model weight: # path to initial weight (default: none) resume: # path to latest checkpoint (default: none) evaluate: False # evaluate on validation set, extra gpu memory needed and small batch_size_val is recommend Distributed: dist_url: tcp://127.0.0.1:6789 dist_backend: ‘nccl’ multiprocessing_distributed: False world_size: 1 rank: 0 use_apex: True opt_level: ‘O0’ keep_batchnorm_fp32: loss_scale:

TEST: test_list: dataset/cityscapes/fine_val.txt split: val # split in [train, val and test] base_size: 2048 # based size for scaling test_h: 713 test_w: 713 scales: [1.0] # evaluation scales, ms as [0.5, 0.75, 1.0, 1.25, 1.5, 1.75] has_prediction: False # has prediction already or not index_start: 0 # evaluation start index in list index_step: 0 # evaluation step index in list, 0 means to end test_gpu: [0] model_path: exp/dataset/cityscapes/pspnet50/model/train_epoch_200.pth # evaluation model path save_folder: exp/dataset/cityscapes/pspnet50/result/epoch_200/val/ss # results save folder colors_path: dataset/cityscapes/cityscapes_colors.txt # path of dataset colors names_path: dataset/cityscapes/cityscapes_names.txt # path of dataset category names

#######################################################################

Error messages:

.

.

.

Totally 2975 samples in train set.

Starting Checking image&label pair train list…

Checking image&label pair train list done!

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [160,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [161,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [162,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [163,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [164,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [165,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [166,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [167,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [168,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [256,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [257,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [258,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [259,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [260,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [261,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [262,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [263,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [264,0,0] Assertion t >= 0 && t < n_classes failed.

/pytorch/aten/src/THCUNN/SpatialClassNLLCriterion.cu:104: void cunn_SpatialClassNLLCriterion_updateOutput_kernel(T *, T *, T *, long *, T *, int, int, int, int, int, long) [with T = float, AccumT = float]: block: [3,0,0], thread: [265,0,0] Assertion t >= 0 && t < n_classes failed.

THCudaCheck FAIL file=/pytorch/aten/src/THCUNN/generic/SpatialClassNLLCriterion.cu line=127 error=710 : device-side assert triggered

Traceback (most recent call last):

File “/usr/lib/python3.6/runpy.py”, line 193, in _run_module_as_main

“main”, mod_spec)

File “/usr/lib/python3.6/runpy.py”, line 85, in _run_code

exec(code, run_globals)

File “/content/semseg-master/tool/train.py”, line 426, in <module>

main()

File “/content/semseg-master/tool/train.py”, line 107, in main

main_worker(args.train_gpu, args.ngpus_per_node, args)

File “/content/semseg-master/tool/train.py”, line 236, in main_worker

loss_train, mIoU_train, mAcc_train, allAcc_train = train(train_loader, model, optimizer, epoch)

File “/content/semseg-master/tool/train.py”, line 281, in train

output, main_loss, aux_loss = model(input, target)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py”, line 541, in call

result = self.forward(*input, **kwargs)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/data_parallel.py”, line 150, in forward

return self.module(*inputs[0], **kwargs[0])

File “/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py”, line 541, in call

result = self.forward(*input, **kwargs)

File “/content/semseg-master/model/pspnet.py”, line 102, in forward

main_loss = self.criterion(x, y)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py”, line 541, in call

result = self.forward(*input, **kwargs)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/modules/loss.py”, line 916, in forward

ignore_index=self.ignore_index, reduction=self.reduction)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py”, line 2009, in cross_entropy

return nll_loss(log_softmax(input, 1), target, weight, None, ignore_index, None, reduction)

File “/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py”, line 1840, in nll_loss

ret = torch._C._nn.nll_loss2d(input, target, weight, _Reduction.get_enum(reduction), ignore_index)

RuntimeError: cuda runtime error (710) : device-side assert triggered at /pytorch/aten/src/THCUNN/generic/SpatialClassNLLCriterion.cu:127

About this issue

- Original URL

- State: open

- Created 5 years ago

- Comments: 16

I think I may have found what the problem is. As we all know, the class index is represented by the brightness value in the label file (or img). Secondly, the error log also shows that it is because of the class label index <=0 or > n_classes. So there should be an error in the label file, not a code problem.

For example, taking cityscapes as an example, the value of classes is 19 in the yaml file. Therefore, the range of the pixel value (brightness or label value) in the label image should be [0-18] and 255 for ignored_label. For example, taking ade20k as an example, the value of classes is 150 in the yaml file. Therefore, the range of the pixel value (brightness or label value) in the label image should be [0-149] and 255 for ignored_label.

So, There may be a problem when completing the label mapping work (cityscapes : json2labeltrainid.png, ade20k : seg.png2labeltrainid.png). For example, the class index are numbered starting from 1, causing the maximum class index bigger than n_classes. Recently I found this problem when dealing with ade20k’s 3148 class mapping to 150 class mapping work (convert ADE_train_*_seg.png to pspnet’s gray format).

I corrected SemData class by adding parts of the scrips from https://github.com/meetshah1995/pytorch-semseg. Below is how I corrected the SemData class in dataset.py. Also add “import numpy as np” at the beginning. Note that my corrections only apply to CityScapes dataset.

class SemData(Dataset): def init(self, split=‘train’, data_root=None, data_list=None, transform=None): self.split = split self.data_list = make_dataset(split, data_root, data_list) self.transform = transform

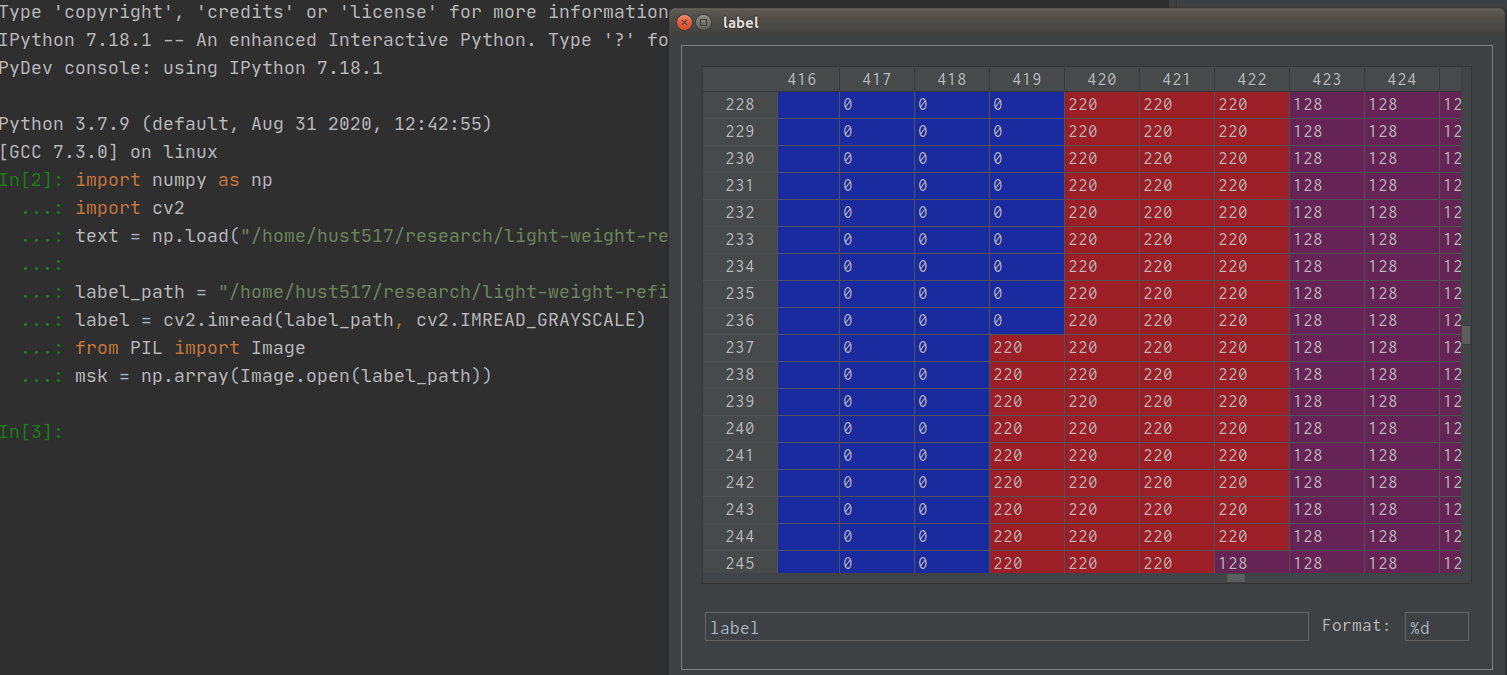

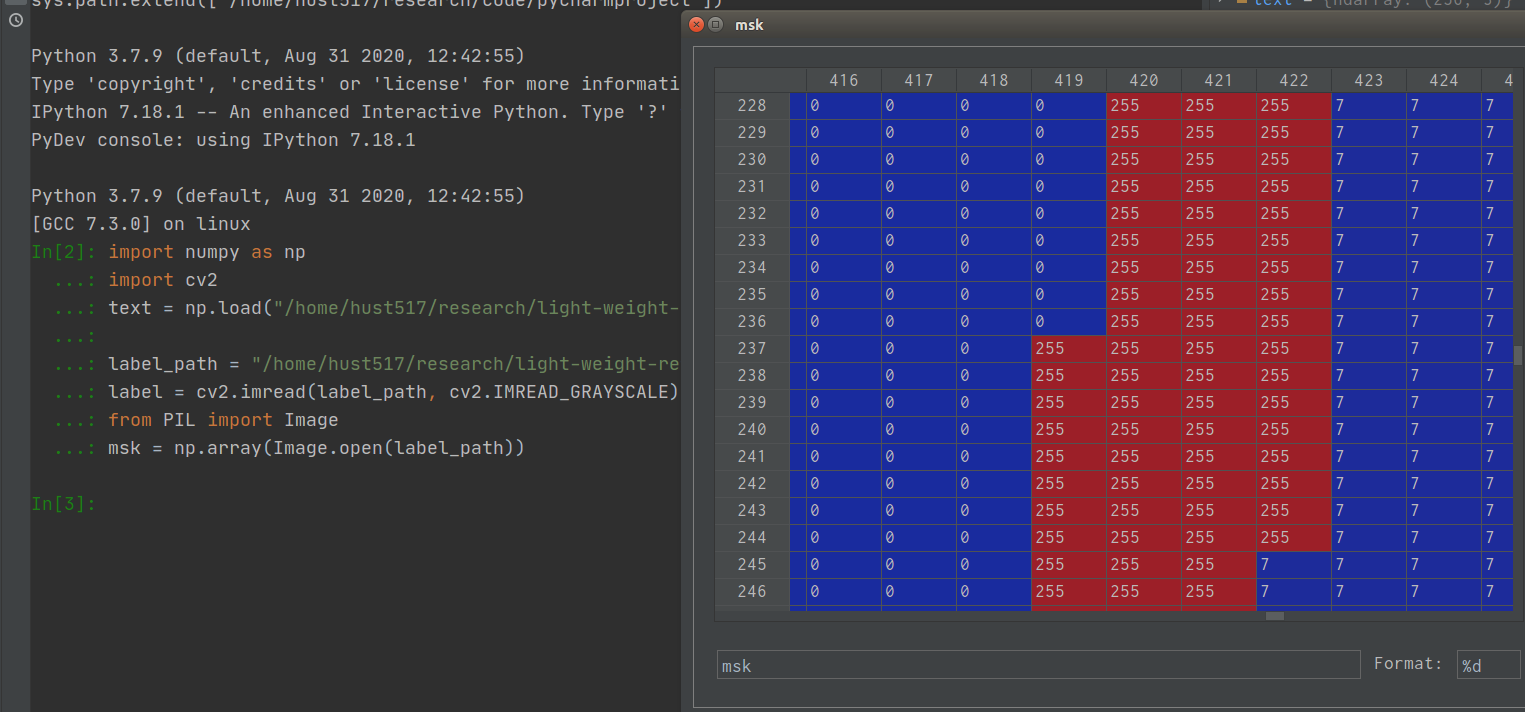

I also encountered into this problem recently. Maybe it’s because of the way reading label image in the code. Image.open:

Image.open:

So, I am quite curious about what the reason for this. I look forward to somebody’s answer.

So, I am quite curious about what the reason for this. I look forward to somebody’s answer.

label = cv2.imread(label_path, cv2.IMREAD_GRAYSCALE) # GRAY 1 channel ndarray with shape H * WWhen I used colored label image for training, it threw an error likeAssertiont >= 0 && t < n_classesfailed. I replaced the code with:label = np.array(Image.open(label_path))and finally, it worked. Then I compared these two methods. I loaded the same label image with color in both ways and observed their values as followed: cv2.imread: