MediaPipeUnityPlugin: HandLandmark Positions Don't Map Correctly

Plugin Version or Commit ID

v0.10.1

Unity Version

2021.3.3f1

Your Host OS

macOS Monterey 12.2

Target Platform

Android

Description

Hi, I am using this plugin with AR Foundation and I have an issue about matching the hand landmarks provided by Mediapipe graph. The landmarks come in the form of (0, 0) and (1, 1). I am trying to convert these 21 landmarks following the tutorial, by using rect.GetPoint(). But landmarks don’t match correctly, they shift a little bit. I cannot solve this issue, and don’t know why this is happening. Could you please help me?

Here is the result in my phone:

Here is my code:

`using System; using System.Collections; using System.Collections.Generic;

using Unity.Collections; using Unity.Collections.LowLevel.Unsafe; using UnityEngine; using UnityEngine.UI; using UnityEngine.XR.ARFoundation; using UnityEngine.XR.ARSubsystems;

using Mediapipe; using Mediapipe.Unity; using Mediapipe.Unity.CoordinateSystem;

using Stopwatch = System.Diagnostics.Stopwatch;

public class HandTrackingAR : MonoBehaviour { private enum ModelComplexity { Lite = 0, Full = 1, }

private enum MaxNumberHands

{

One = 1,

Two = 2,

}

[SerializeField] private TextAsset _gpuConfig;

[SerializeField] private ModelComplexity _modelComplexity = ModelComplexity.Full;

[SerializeField] private MaxNumberHands _maxNumHands = MaxNumberHands.One;

[SerializeField] private RawImage _screen;

[SerializeField] private ARCameraManager _cameraManager;

[SerializeField] private GameObject _handPoint;

private CalculatorGraph _graph;

private static UnityEngine.Rect _screenRect;

private GameObject[] hand;

private Stopwatch _stopwatch;

private ResourceManager _resourceManager;

private GpuResources _gpuResources;

private NativeArray<byte> _buffer;

private OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>> _handLandmarkStream;

private IEnumerator Start()

{

Glog.Logtostderr = true;

Glog.Minloglevel = 0;

Glog.V = 3;

Protobuf.SetLogHandler(Protobuf.DefaultLogHandler);

_cameraManager.frameReceived += OnCameraFrameReceived;

_gpuResources = GpuResources.Create().Value();

_stopwatch = new Stopwatch();

hand = new GameObject[21];

for (var i = 0; i < 21; i++)

{

hand[i] = Instantiate(_handPoint, _screen.transform);

}

_resourceManager = new StreamingAssetsResourceManager();

if (_modelComplexity == ModelComplexity.Lite)

{

yield return _resourceManager.PrepareAssetAsync("hand_landmark_lite.bytes");

yield return _resourceManager.PrepareAssetAsync("hand_recrop.bytes");

yield return _resourceManager.PrepareAssetAsync("handedness.txt");

yield return _resourceManager.PrepareAssetAsync("palm_detection_lite.bytes");

}

else

{

yield return _resourceManager.PrepareAssetAsync("hand_landmark_full.bytes");

yield return _resourceManager.PrepareAssetAsync("hand_recrop.bytes");

yield return _resourceManager.PrepareAssetAsync("handedness.txt");

yield return _resourceManager.PrepareAssetAsync("palm_detection_full.bytes");

}

_graph = new CalculatorGraph(_gpuConfig.text);

_graph.SetGpuResources(_gpuResources).AssertOk();

_screenRect = _screen.GetComponent<RectTransform>().rect;

_handLandmarkStream = new OutputStream<NormalizedLandmarkListVectorPacket, List<NormalizedLandmarkList>>(_graph, "hand_landmarks");

_handLandmarkStream.StartPolling().AssertOk();

var sidePacket = new SidePacket();

sidePacket.Emplace("model_complexity", new IntPacket((int)_modelComplexity));

sidePacket.Emplace("num_hands", new IntPacket((int)_maxNumHands));

sidePacket.Emplace("input_rotation", new IntPacket(270));

sidePacket.Emplace("input_horizontally_flipped", new BoolPacket(true));

sidePacket.Emplace("input_vertically_flipped", new BoolPacket(true));

_graph.StartRun(sidePacket).AssertOk();

_stopwatch.Start();

}

private unsafe void OnCameraFrameReceived(ARCameraFrameEventArgs eventArgs)

{

if (_cameraManager.TryAcquireLatestCpuImage(out XRCpuImage image))

{

alocBuffer(image);

var conversionParams = new XRCpuImage.ConversionParams(image, TextureFormat.RGBA32);

var ptr = (IntPtr)NativeArrayUnsafeUtility.GetUnsafePtr(_buffer);

image.Convert(conversionParams, ptr, _buffer.Length);

image.Dispose();

var imageFrame = new ImageFrame(ImageFormat.Types.Format.Srgba, image.width, image.height, 4 * image.width, _buffer);

var currentTimestamp = _stopwatch.ElapsedTicks / (TimeSpan.TicksPerMillisecond / 1000);

var imageFramePacket = new ImageFramePacket(imageFrame, new Timestamp(currentTimestamp));

_graph.AddPacketToInputStream("input_video", imageFramePacket).AssertOk();

StartCoroutine(WaitForEndOfFrameCoroutine());

var arlistX = new ArrayList(); // recommended

var arlistY = new ArrayList(); // recommended

if (_handLandmarkStream.TryGetNext(out var handLandmarks))

{

foreach (var landmarks in handLandmarks)

{

for (var i = 0; i < landmarks.Landmark.Count; i++)

{

var worldLandmarkPos = _screenRect.GetPoint(landmarks.Landmark[i]);

hand[i].transform.localPosition = worldLandmarkPos;

arlistX.Add(worldLandmarkPos.x);

arlistY.Add(worldLandmarkPos.y);

}

generateObject(arlistX,arlistY);

}

}

}

}

private IEnumerator WaitForEndOfFrameCoroutine()

{

yield return new WaitForEndOfFrame();

}

private void generateObject(ArrayList alx,ArrayList aly){

Vector2 down = new Vector2((float)alx[0],(float) aly[0]);

Vector2 left = new Vector2((float)alx[4],(float)aly[4]);

Vector2 up = new Vector2((float)alx[12],(float) aly[12]);

Vector2 right = new Vector2((float)alx[20],(float) aly[20]);

Vector2 middle = new Vector2((float)alx[9],(float) aly[9]);

float distance1 = Vector2.Distance(down, middle);

float distance2 = Vector2.Distance(left, middle);

float distance3 = Vector2.Distance(up, middle);

float distance4 = Vector2.Distance(right, middle);

var distances = new ArrayList();

distances.Add(distance1);

distances.Add(distance2);

distances.Add(distance3);

distances.Add(distance4);

float max = 0;

for(var i=0;i<distances.Count;i++){

if((float)distances[i] > max)

max = (float)distances[i];

}

Vector3 position = new Vector3(middle.x, middle.y, 1.0f);

Vector3 scale = new Vector3(max*1.5f,max*1.5f,0.1f);

GameObject hand= GameObject.Find("HandObjectCollider");

hand.transform.localPosition = position;

hand.transform.localScale = scale;

}

private void alocBuffer(XRCpuImage image)

{

var length = image.width * image.height * 4;

if (_buffer == null || _buffer.Length != length)

{

_buffer = new NativeArray<byte>(length, Allocator.Persistent, NativeArrayOptions.UninitializedMemory);

}

}

private void OnDestroy()

{

_cameraManager.frameReceived -= OnCameraFrameReceived;

var statusGraph = _graph.CloseAllPacketSources();

if (!statusGraph.Ok())

{

Debug.Log($"Failed to close packet sources: {statusGraph}");

}

statusGraph = _graph.WaitUntilDone();

if (!statusGraph.Ok())

{

Debug.Log(statusGraph);

}

_graph.Dispose();

if (_gpuResources != null)

_gpuResources.Dispose();

_buffer.Dispose();

}

private void OnApplicationQuit()

{

Protobuf.ResetLogHandler();

}

}`

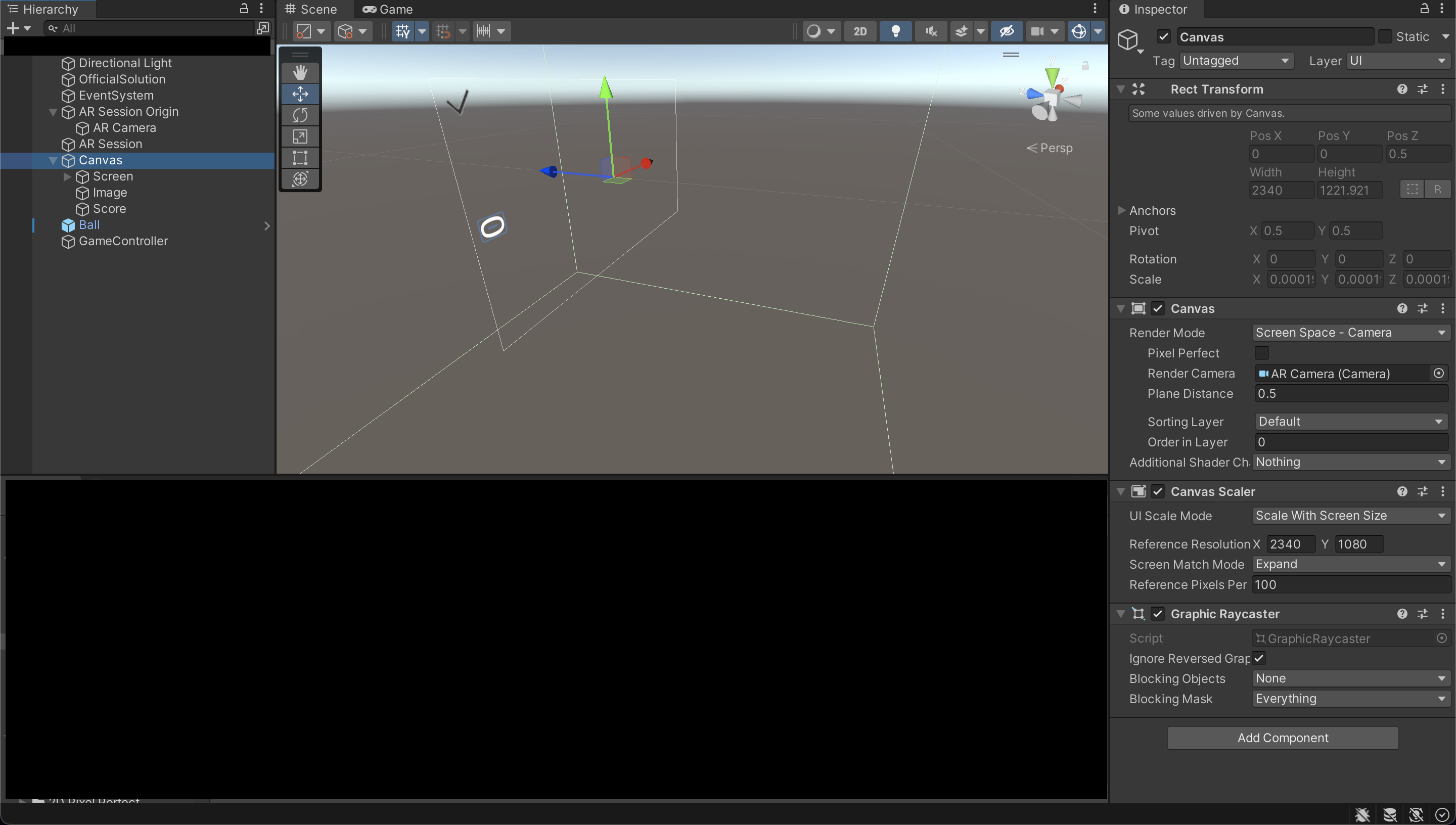

Here is my scene:

Code to Reproduce the issue

No response

Additional Context

No response

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 15 (7 by maintainers)

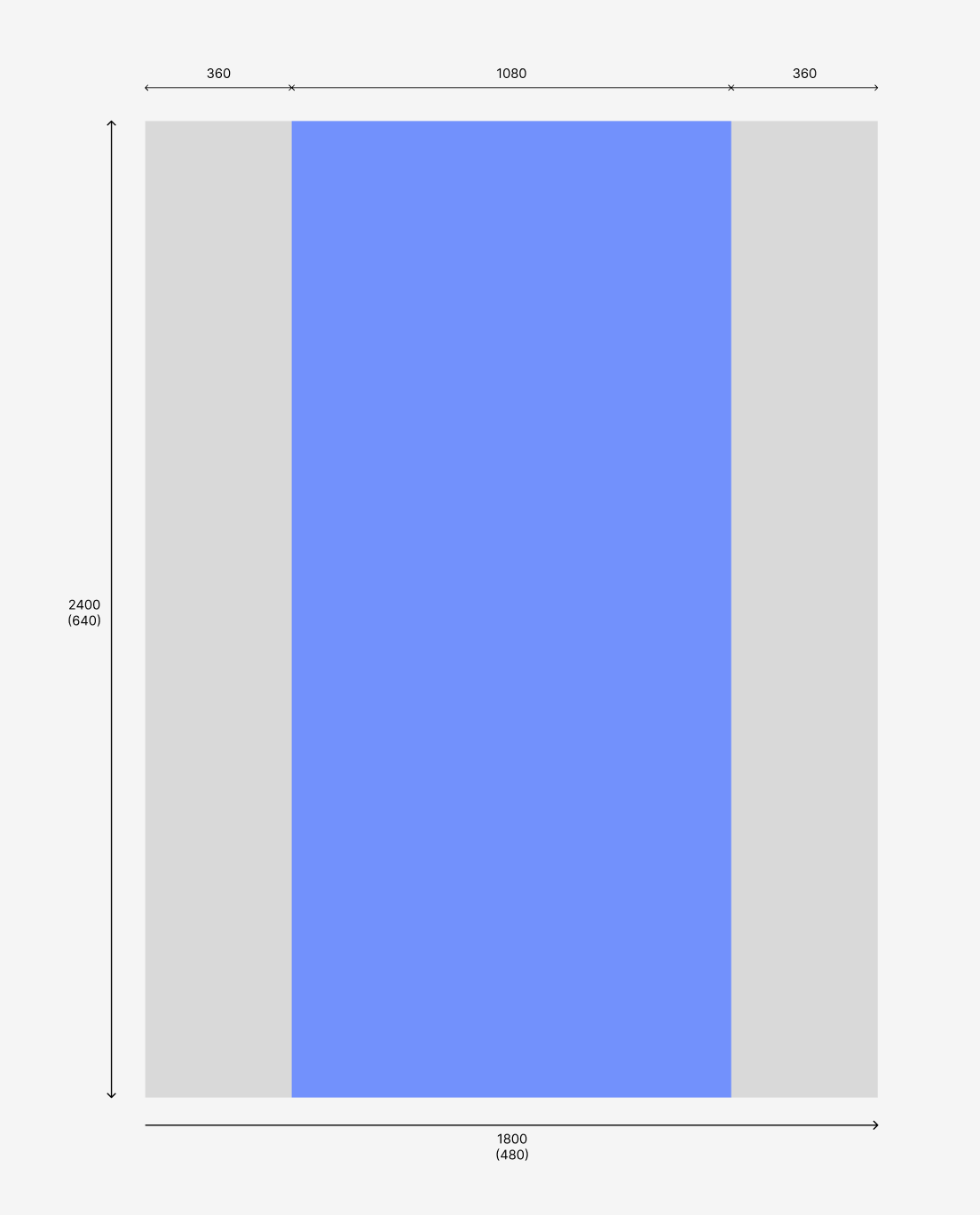

You can crop the input image (e.g. using

ImageCroppingCalculator) or convert the output value (if the screen size is 2400x1080, then the visible part is 640x (480 x 1080/1800), so you need to multiplyxby 1800/1080).2400 x 3/4 = 1800.

Please google it or ask elsewhere.