core: Fail on upgrade to 2023.3.0 - Database migration error with duplicate key exception

The problem

Using MariaDB:

When upgrading to the latest 2023.3.0 Database migration from 33 --> 35 fails with a deadlock error.

When examining the logs it appears the following statement is the cause:

UPDATE statistics set start_ts=IF(start is NULL,0,UNIX_TIMESTAMP(start) ), created_ts=UNIX_TIMESTAMP(created), last_reset_ts=UNIX_TIMESTAMP(last_reset) where start_ts is NULL LIMIT 250000;

When running that statement in phpMyAdmin I get an error with a unique key violation.

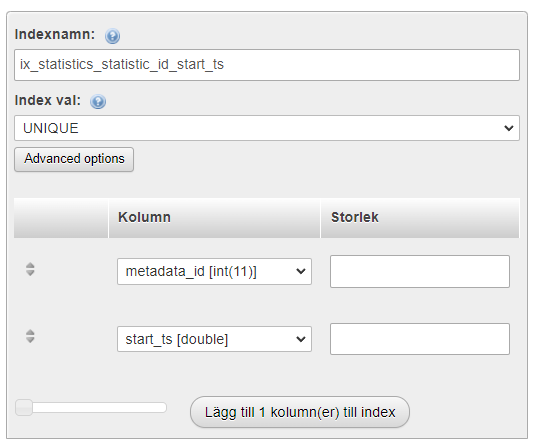

#1062 - Dublett '76-1666249200' för nyckel 'ix_statistics_statistic_id_start_ts'

Swedish, but Dublett = Duplicate and för nyckel = for key

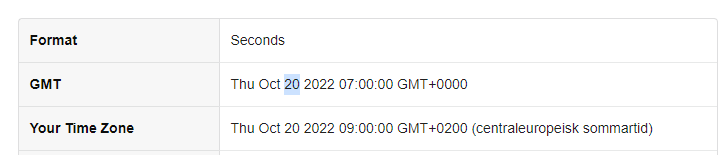

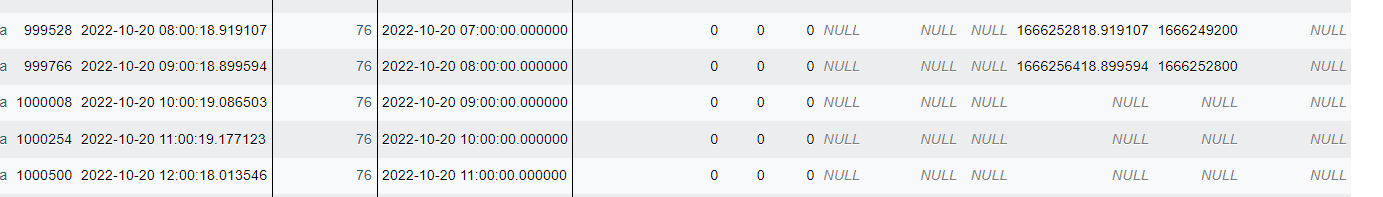

As you can se that specific unixtime exist for the time 07:00, but 09:00 is the one to be updated.

Looking up that specific time the difference there for some reason is exactly the difference between local time and GMT

That index appears to be a unique index so if the update generates identical indexes this would cause this error.

That index appears to be a unique index so if the update generates identical indexes this would cause this error.

Locking at the date it is clear something happened when the script was running…

That is the local time for that unix timestamp.

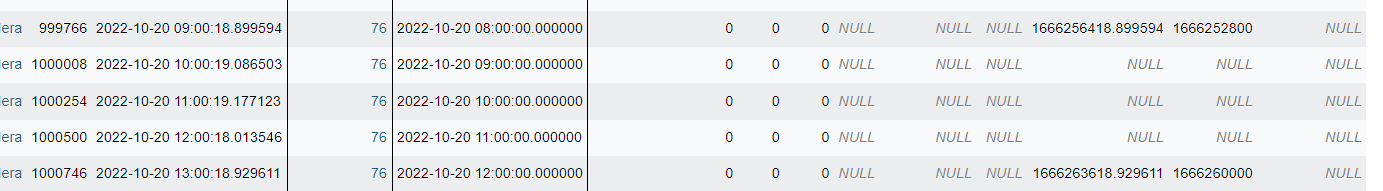

Running the update with some WHERE clauses is interesting. Updating everything with a later date works just fine. However updating everything on another metadataid generates another error… At the exact same date:

#1062 - Dublett '77-1666249200' för nyckel 'ix_statistics_statistic_id_start_ts'

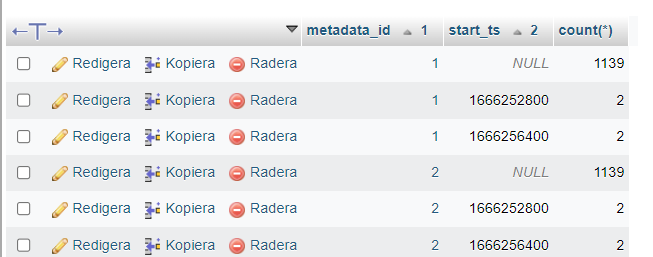

This is the rows that does not update, for that specific metadata_id

I changed the index to be non unique and that of course makes the query work, but it does generate a lot of duplicates, on specific dates it appears.

What version of Home Assistant Core has the issue?

2023.3.0

What was the last working version of Home Assistant Core?

2023.2.?

What type of installation are you running?

Home Assistant OS

Integration causing the issue

No response

Link to integration documentation on our website

No response

Diagnostics information

2023-03-02 21:13:20.778 ERROR (Recorder) [homeassistant.components.recorder.util] Error executing query: (MySQLdb.OperationalError) (1205, ‘Lock wait timeout exceeded; try restarting transaction’) [SQL: UPDATE statistics set start_ts=IF(start is NULL,0,UNIX_TIMESTAMP(start) ), created_ts=UNIX_TIMESTAMP(created), last_reset_ts=UNIX_TIMESTAMP(last_reset) where start_ts is NULL LIMIT 250000;] (Background on this error at: https://sqlalche.me/e/20/e3q8) Traceback (most recent call last): File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1964, in _exec_single_context self.dialect.do_execute( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/default.py”, line 747, in do_execute cursor.execute(statement, parameters) File “/usr/local/lib/python3.10/site-packages/MySQLdb/cursors.py”, line 206, in execute res = self._query(query) File “/usr/local/lib/python3.10/site-packages/MySQLdb/cursors.py”, line 319, in _query db.query(q) File “/usr/local/lib/python3.10/site-packages/MySQLdb/connections.py”, line 254, in query _mysql.connection.query(self, query) MySQLdb.OperationalError: (1205, ‘Lock wait timeout exceeded; try restarting transaction’)

The above exception was the direct cause of the following exception:

Traceback (most recent call last): File “/usr/src/homeassistant/homeassistant/components/recorder/util.py”, line 123, in session_scope yield session File “/usr/src/homeassistant/homeassistant/components/recorder/migration.py”, line 1154, in _migrate_statistics_columns_to_timestamp result = session.connection().execute( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1414, in execute return meth( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/sql/elements.py”, line 485, in _execute_on_connection return connection._execute_clauseelement( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1638, in _execute_clauseelement ret = self._execute_context( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1842, in _execute_context return self._exec_single_context( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1983, in _exec_single_context self._handle_dbapi_exception( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 2325, in _handle_dbapi_exception raise sqlalchemy_exception.with_traceback(exc_info[2]) from e File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/base.py”, line 1964, in _exec_single_context self.dialect.do_execute( File “/usr/local/lib/python3.10/site-packages/sqlalchemy/engine/default.py”, line 747, in do_execute cursor.execute(statement, parameters) File “/usr/local/lib/python3.10/site-packages/MySQLdb/cursors.py”, line 206, in execute res = self._query(query) File “/usr/local/lib/python3.10/site-packages/MySQLdb/cursors.py”, line 319, in _query db.query(q) File “/usr/local/lib/python3.10/site-packages/MySQLdb/connections.py”, line 254, in query _mysql.connection.query(self, query) sqlalchemy.exc.OperationalError: (MySQLdb.OperationalError) (1205, ‘Lock wait timeout exceeded; try restarting transaction’) [SQL: UPDATE statistics set start_ts=IF(start is NULL,0,UNIX_TIMESTAMP(start) ), created_ts=UNIX_TIMESTAMP(created), last_reset_ts=UNIX_TIMESTAMP(last_reset) where start_ts is NULL LIMIT 250000;] (Background on this error at: https://sqlalche.me/e/20/e3q8)

Example YAML snippet

No response

Anything in the logs that might be useful for us?

No response

Additional information

No response

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 20 (18 by maintainers)

Commits related to this issue

- Handle slow InnoDB rollback when encountering duplicates during migration fixes #89069 — committed to home-assistant/core by bdraco a year ago

- Handle InnoDB deadlocks during migration (#89073) * Handle slow InnoDB rollback when encountering duplicates during migration fixes #89069 * adjust * fix mock * tests * return on succe... — committed to home-assistant/core by bdraco a year ago

- Handle InnoDB deadlocks during migration (#89073) * Handle slow InnoDB rollback when encountering duplicates during migration fixes #89069 * adjust * fix mock * tests * return on succe... — committed to home-assistant/core by bdraco a year ago

I think the linked PR is likely to solve this unless there are more log messages that are relevant.

I need to do more manual testing with it but it’s getting late here so I’ll have to pick it up tomorrow