graphql-engine: hasura + timescaledb on pg11 > Upserts not working anymore

Hi there,

I recently switched Hasura backend db from timescale/timescaledb:1.2.1-pg10 to timescale/timescaledb:1.2.1-pg11.

As described in this issue on Timescaledb github, when doing an UPSERT operation on an hypertable (through Graphql-engine), I’m now faced with this error: ERROR: hypertables do not support ON CONFLICT statements that reference constraints.

To workaround this, Guys at Timescaledb advised me to instead of mentioning the constraint name (like does graphql-engine statement) :

INSERT INTO "data" ( "timestamp", "uuid" ) VALUES ( "2019-03-06T16:46:46.988Z", "9db6dbe1-cd14-1d17-38c0-4d4a36679fd6" ) ON CONFLICT ON CONSTRAINT "data_pkey" DO NOTHING RETURNING *

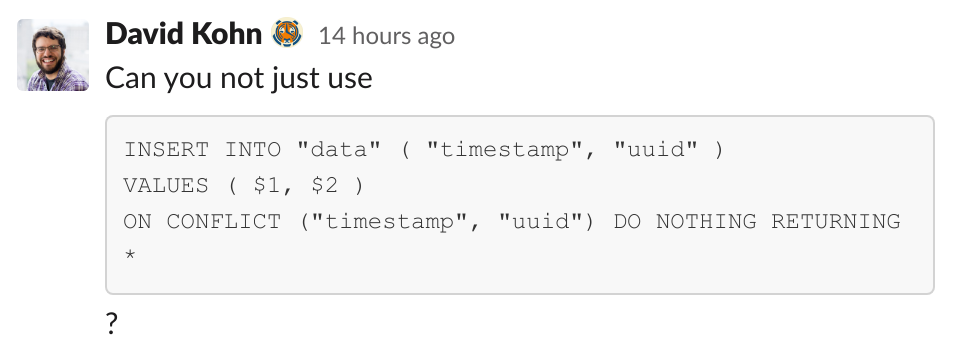

To explicitly mention column names (involved in the constraint):

Even though I believe the “design issue” is more on Timescaledb side, I’d be interested to hear your input on this > would that make sense for Hasura to change the way it’s working now, If not, why?

Many thanks!

About this issue

- Original URL

- State: closed

- Created 5 years ago

- Reactions: 5

- Comments: 30 (3 by maintainers)

Hello, @0x777 @marionschleifer @tirumaraiselvan, did you guys plan to release a fix to this bug? It’s soon going to be 2 years this issue is open. Reproducing should be quite straightforward:

docker pull timescale/timescaledb:latest-pg12docker pull hasura/graphql-engineCREATE EXTENSION IF NOT EXISTS timescaledb CASCADE;as well asCREATE SCHEMA "schema1";&CREATE TABLE "schema1"."table1" ("id" int AS IDENTITY PRIMARY KEY,"value" text NOT NULL, "timestamp" timestamp with time zone NOT NULL);and finally runSELECT create_hypertable('schema1.table1', 'timestamp');to convert schema.table1 to a timescale “hypertable”.with these query variables:

This will throw an “ON CONFLICT ON CONSTRAINT” error, as with Hypertables one can’t reference a primary key by its name, but only by its column names:

Your help would be much appreciated ❤️

Hello, any resolution to this issue?

Hi @marionschleifer , any update on this issue? Thanks!

@ondrejspilkaABB sure. So, I’ve defined a SQL function named

ingest_packetsand registered it in Hasura to be able to use it later via the GraphQL API.and then create

unit.insert_or_get_packet:and

insert_or_update_record:Using the below improvements, I was able to increase the Bringes ingestion rate from <50 packets/second to ~5000 packets/second.

Hope this is useful for you. If you have any questions please let me know.

Until the upstream TimescaleDB issue is fixed, would it be possible to allow

ON CONFLICT DO NOTHINGin graphQL without explicitly specifying any constraint? As the Postgres docs state:This does not cover the

ON CONFLICT DO UPDATEcase, but might be a helpful first step.Thanks @iosifnicolae2 that is super useful.

Would love to see this officially supported by Hasura…

@0x777 any progress on the issue?

@waterdrop01 We’ll try to add a workaround.

Hello @0x777, any chance you share with us the progress and problems you may encounter related to this issue? This would be useful for people following this issue to get a sense of the amount of work remaining to tackle it! Thanks!

Hello @tirumaraiselvan, maybe you can provide estimation, when this is going to be fixed? Use-case is for example batch upserts, so here your product loses some competitiveness…

Hi @tirumaraiselvan, any visibility regarding this issue? Would be greatly appreciated