GRDB.swift: Deadlock in Pool.swift in v6.10.1

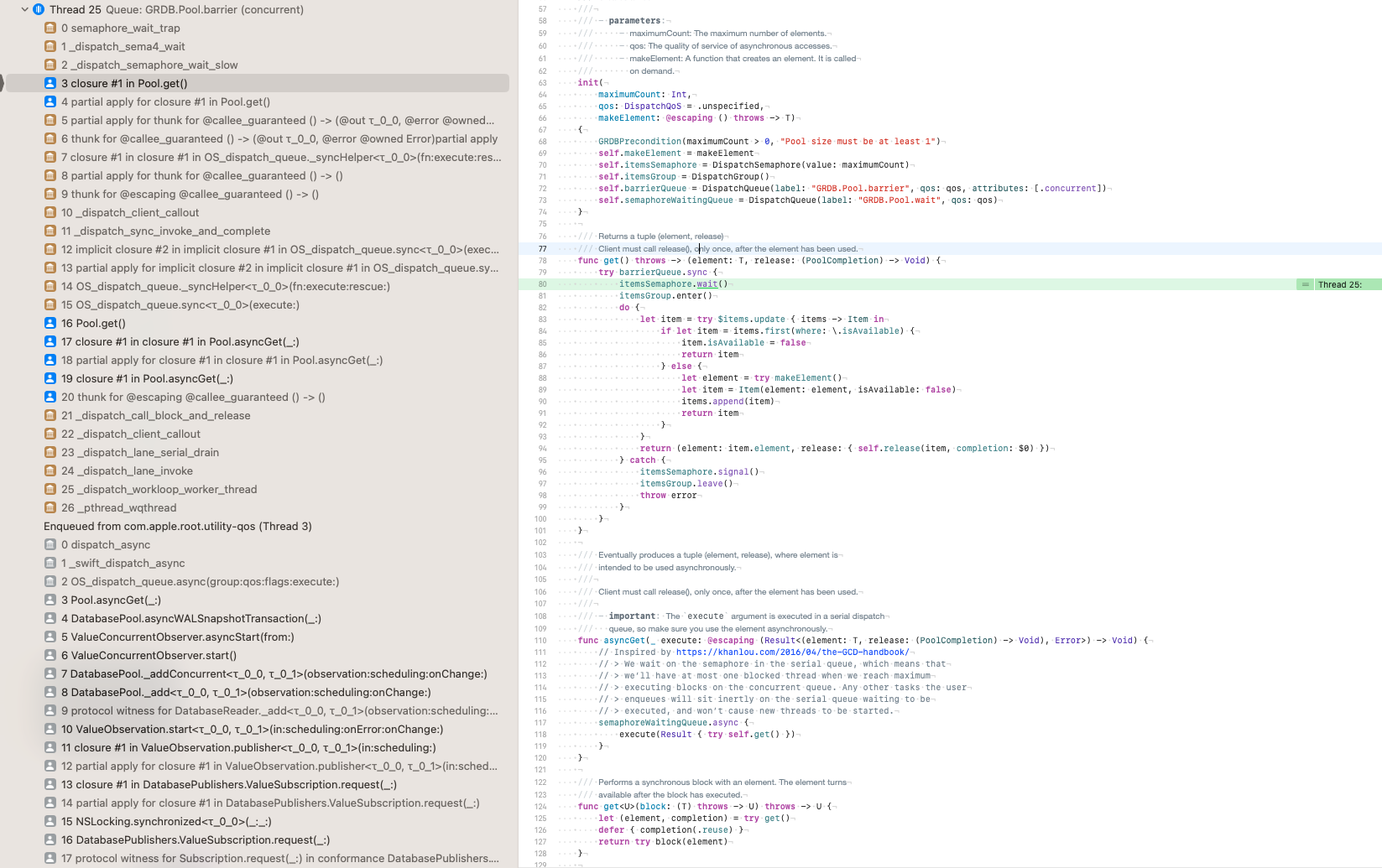

Since updating to 6.10, I’ve been seeing deadlocks with threads stuck at itemsSemaphore.wait() at Pool.swift line 80. The stack traces themselves aren’t hugely informative but I have a suspicion that something in v6.10 has lead to unbalanced calls to itemsSemaphore.signal() – possibly https://github.com/groue/GRDB.swift/pull/1350.

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 19 (13 by maintainers)

Commits related to this issue

- Attempts at finding regression tests for #1362 — committed to groue/GRDB.swift by groue a year ago

- Attempts at finding regression tests for #1362 — committed to groue/GRDB.swift by groue a year ago

- The scenario that leads to the #1362 deadlock — committed to groue/GRDB.swift by groue a year ago

- Fix #1362 — committed to groue/GRDB.swift by groue a year ago

- Fix #1362 — committed to groue/GRDB.swift by groue a year ago

- Fix #1362 — committed to groue/GRDB.swift by groue a year ago

I created a test that demonstrates the issue. I had to cheat the test timing by inserting a sleep (so it’s not for merging) but it demonstrates how observers can block the writing queue and vice versa, leading to a deadlock.

https://github.com/groue/GRDB.swift/pull/1364

It is definitely a deadlock (depending on your definition, it might also be called starvation/exhaustion but since the observers are blocked, it’s not a situation that will resolve). The writer is holding the

queueand waiting foritemSemaphorewhich it will never get. TheitemsSemaphoreis meanwhile held by the observers who are all waiting for thequeuewhich they will never get. The only solution is to enforce an ordering to these locks (e.g. you may not attempt to acquireitemSemaphoreafterqueue).I’ll try to construct a simple test case and follow up.