go: runtime: Simple HTTP server causes high thread count on macOS Monterey

What version of Go are you using (go version)?

$ go version go version go1.17.2 darwin/arm64

Does this issue reproduce with the latest release?

Cannot test 1.17.3 currently.

What operating system and processor architecture are you using (go env)?

Apple M1 Max MacBook Pro 16.

go env Output

$ go env GO111MODULE="" GOARCH="arm64" GOBIN="" GOCACHE="/Users/XXX/Library/Caches/go-build" GOENV="/Users/XXX/Library/Application Support/go/env" GOEXE="" GOEXPERIMENT="" GOFLAGS="" GOHOSTARCH="arm64" GOHOSTOS="darwin" GOINSECURE="" GOMODCACHE="/Users/XXX/go/pkg/mod" GONOPROXY="" GONOSUMDB="" GOOS="darwin" GOPATH="/Users/XXX/go" GOPRIVATE="" GOPROXY="https://proxy.golang.org,direct" GOROOT="/opt/homebrew/Cellar/go/1.17.2/libexec" GOSUMDB="sum.golang.org" GOTMPDIR="" GOTOOLDIR="/opt/homebrew/Cellar/go/1.17.2/libexec/pkg/tool/darwin_arm64" GOVCS="" GOVERSION="go1.17.2" GCCGO="gccgo" AR="ar" CC="clang" CXX="clang++" CGO_ENABLED="1" GOMOD="/dev/null" CGO_CFLAGS="-g -O2" CGO_CPPFLAGS="" CGO_CXXFLAGS="-g -O2" CGO_FFLAGS="-g -O2" CGO_LDFLAGS="-g -O2" PKG_CONFIG="pkg-config" GOGCCFLAGS="-fPIC -arch arm64 -pthread -fno-caret-diagnostics -Qunused-arguments -fmessage-length=0 -fdebug-prefix-map=/var/folders/06/1d1vrn6541133ymsn2s2vwvw0000gn/T/go-build3566489552=/tmp/go-build -gno-record-gcc-switches -fno-common"

What did you do?

- Spawn a simple http server

package main

import "net/http"

func main() {

http.HandleFunc("/", func(rw http.ResponseWriter, r *http.Request) {

rw.WriteHeader(200)

})

if err := http.ListenAndServe(":8080", nil); err != nil {

panic(err)

}

}

- Run some requests against it

wrk -c500 -d10 -t1 http://localhost:8080

What did you expect to see?

With the previous macOS versions (as with every other OS I’ve tested lately) the app uses a couple of threads (usually around 8-12 all the time).

What did you see instead?

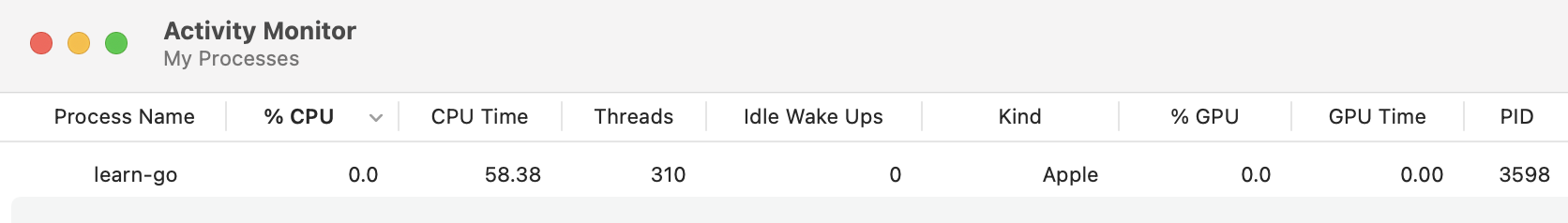

Thread count goes very high and never drops:

About this issue

- Original URL

- State: open

- Created 3 years ago

- Comments: 19 (7 by maintainers)

After the release 1.18 I did some more tests and found some interesting observations that I would like to share, maybe it helps.

The M1 Max (Pro) has 8 performance cores and 2 efficiency cores. When I limit the processor count using GOMAXPROCS, the behaviour changes completely.

If I set a value between 1-4 (GOMAXPROCS=1 … GOMAXPROCS=4), the problem disappears. The problem suddenly starts with GOMAXPROCS=5+. Also, with a value between 1-4 the performance is superb, as usual and expected. The numbers mean nothing, but the delta is interesting:

With 1-4 I get 175K req/s with wrk and the thread count stays under 10. With 5+ I get “only” 60-75K req/s and the thread count goes wild, 300+.

I am not too much into how Go’s scheduler works exactly but I do know that slow syscalls might cause other threads to spawn to take over. Now, maybe the efficiency cores are too slow to take over and Go thinks the syscall takes too long and therefore simply spawns another thread?

If this is true, even modern Alder Lake CPUs with Big.Little might have similar issues. Again, it’s a guess, but I have the feeling that it is related to this architecture design.

Let me know your thoughts.

@odeke-em @ianlancetaylor

Just tested lately with latest 1.19 and up-to-date M1 Pro device, same result. The simple HTTP server listed above still generates hundreds of threads on macOS.

I’ve already mentioned that only a value of GOMAXPROCS=5+ causes issues. An M1 Pro/Max has 8 performance cores and 2 efficiency cores. A value of 4 works perfectly, so half of the performance cores. Not sure, maybe it’s linked.

I really believe that the efficiency cores are the problem, maybe they take so much longer compared to high performance cores for certain operations that Go thinks it has to spawn a new thread to keep up with the load. Could this be related?

@davecheney It’s a stock device used in our lab. Based on the feedback it really sounds like an M1 Max issue.

Interesting, thank you @chrisprobst! Let me kindly ask a colleague @kirbyquerby who has an M1 to try to reproduce it, and then I shall go purchase an M1 Pro or M1 Max computer tomorrow morning too and try to reproduce it then debug it.