harbor: Postgres FATAL: sorry, too many clients already

Harbor Version v1.10.2-d0189bed deployed over k8s using helm chart version harbor-1.3.2.

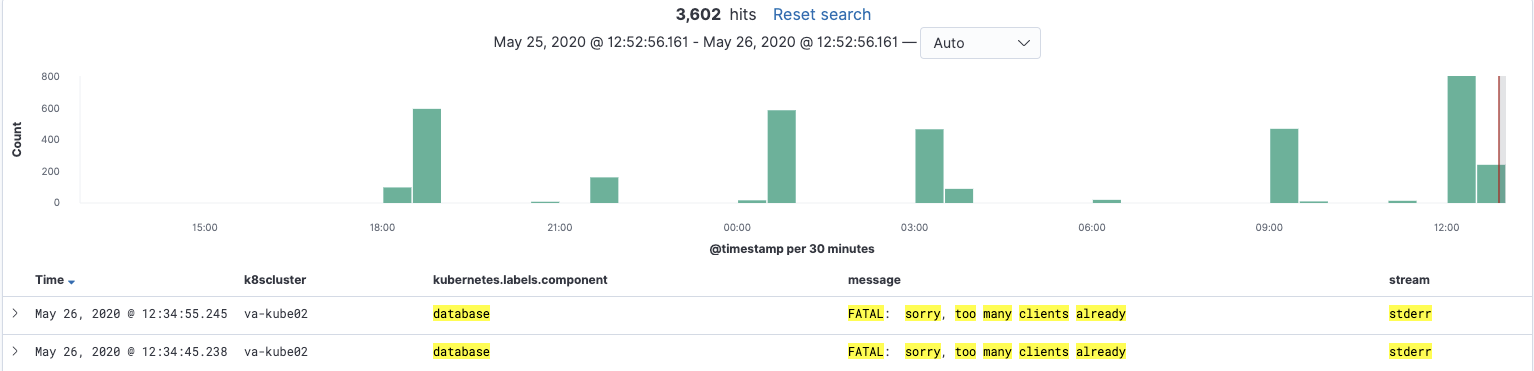

seems like every couple of hours harbor Postgres receiving

FATAL: sorry, too many clients already

after some time it will crash and restart.

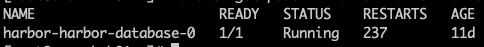

237 restarts in 11 Days.

237 restarts in 11 Days.

I tried to change the value of max_connections in postgresql.conf from 100 to 150,200,250,300. Also trying increasing harbor-core config map values

POSTGRESQL_MAX_IDLE_CONNS

POSTGRESQL_MAX_OPEN_CONNS

Every setting change may have delayed the issue, but eventually Postgres will crash and reload.

LOG: database system was interrupted; last known up at 2020-05-26 09:28:40 UTC

FATAL: the database system is starting up

LOG: database system was not properly shut down; automatic recovery in progress

LOG: redo starts at 0/60B84F60

LOG: invalid record length at 0/60C033C0: wanted 24, got 0

LOG: redo done at 0/60C03398

LOG: last completed transaction was at log time 2020-05-26 09:29:02.691594+00

FATAL: the database system is starting up

LOG: MultiXact member wraparound protections are now enabled

LOG: database system is ready to accept connections

LOG: autovacuum launcher started

LOG: incomplete startup packet

FATAL: sorry, too many clients already

FATAL: sorry, too many clients already

FATAL: sorry, too many clients already

FATAL: sorry, too many clients already

any suggestions?

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 1

- Comments: 17 (9 by maintainers)

harbor 1.10 introduced Quota function which will record the blob size in db, so it will consume more connections while replication. I think you can try edit harbor.yml, adjust database.max_open_conns, or edit common/config/core/env POSTGRESQL_MAX_OPEN_CONNS, to check if this can workaround

SHOW max_connections; indeed showed that max connection number changed. POSTGRESQL_MAX_IDLE_CONNS is smaller than POSTGRESQL_MAX_OPEN_CONNS

Tried to change the values of POSTGRESQL_MAX_OPEN_CONNS = 0 and POSTGRESQL_MAX_IDLE_CONNS =2 and the same error came after 2 hours.