fluent-bit: [http_client] cannot increase buffer on k8s

Bug Report

Describe the bug We occasionally get warnings like this thrown by fluent-bit 1.8.2:

[ warn] [http_client] cannot increase buffer: current=512000 requested=544768 max=512000

Note that we do not use kinesis firehose.

To Reproduce

- Indeterminable. It does not seem to depend on the number of pods or the general load of the cluster, and no source of this could be determined.

Expected behavior

- No warnings are thrown.

Your Environment

- Version used: 1.8.2 (installed via official helm chart)

- Configuration:

service: |

[SERVICE]

Flush 5

Daemon Off

Log_Level info

Parsers_File parsers.conf

Parsers_File custom_parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

storage.path /var/log/fluentbit-buffer/

storage.metrics On

inputs: |

[INPUT]

Name tail

Path /var/log/containers/*.log

Exclude_Path /var/log/containers/calico-node*.log

storage.type filesystem

Parser my-parser

Tag kube.*

Mem_Buf_Limit 5MB

Skip_Long_Lines On

filters: |

[FILTER]

Name kubernetes

Match kube.*

Merge_Log On

Keep_Log Off

Annotations Off

K8S-Logging.Parser On

K8S-Logging.Exclude On

outputs: |

[OUTPUT]

Name es

Match kube.*

Host <redacted>

Logstash_Format On

Retry_Limit 10

Trace_Error On

Replace_Dots On

Suppress_Type_Name On

customParsers: |

[PARSER]

Name my-parser

Format regex

Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<log>.*)$

- Environment name and version (e.g. Kubernetes? What version?): Kubernetes 1.19.11

- Server type and version: MS AKS cluster

- Filters and plugins: tail, kubernetes, es

Additional context I’m starting to think the fluent-bit respone buffer might be a little too small for some of the responses elasticsearch sends, especially when an error occurs - elasticsearch’s JSON responses can get quite large…

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 9

- Comments: 20 (4 by maintainers)

you’re hitting this error in the

flb_http_clientcode:https://github.com/fluent/fluent-bit/blob/651e330609182970f11d14603bf6d58ae64f513e/src/flb_http_client.c#L1199-L1202

flb_http_clientis used in a few different places by various fluentbit components (e.g. when shipping logs to stackdriver/splunk/newrelic/etc. or when interrogating the kubernetes api).only a few of the modules that use

flb_http_clientoverride the default buffer size (of 4k):https://github.com/fluent/fluent-bit/search?q=flb_http_buffer_size

one of them is the

filter_kubernetesmodule:https://github.com/fluent/fluent-bit/blob/1fa0e94a09e4155f8a6d8a0efe36a5668cdc074e/plugins/filter_kubernetes/kube_meta.c#L387

in our case the problem was that we have a fairly large kubernetes cluster. so when retrieving pod metadata from the kubernetes api, the

flb_http_clientran out of buffer space.the solution was:

(

512kis probably a bit overkill but it’ll allow us to grow the cluster without ever having to worry about this again)@gabileibo - yep! We noticed that and also that

INPUT.tailaccepts both aBuffer_Chunk_SizeandBuffer_Max_Size. We ended up with:Also found the upstream

values.yamlhelpful.Not ideal since it involves some code duplication, but it makes the warnings & errors go bye-bye.

This should be reopened - still persists on fluent-bit helm chart 0.19.20 (app version 1.8.13).

I don’t believe that OUTPUT.Buffer_Size is the culprit here.

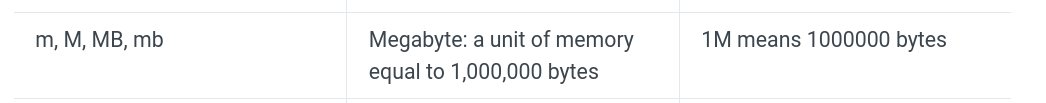

From https://docs.fluentbit.io/manual/pipeline/outputs/elasticsearch, Elasticsearch output Buffer_Size is

It seems apparent the buffer in question is a different one.

For example, we have set

Buffer_Size 64KBfor Elasticsearch output, re-deployed and still see:all over the logs.

Notice that

max=32000is displayed here which indicates it’s not influenced by the Elasticsearch output Buffer_Size at all…on a related note i’d like to give kudos to the maintainers/contributors of fluentbit! 💪

i found the fluentbit source code to be very well organized and therefore very easy to understand.

it took me no more than half an hour to track down what our problem was by looking through the source code… and i’m an absolute n00b when it comes to fluentbit.

I don’t understand. Isn’t

2MBvalid?Looks correct to me: