extensions: Firebase Biq Query extension - Exceeded rate limits: too many api requests per user per method for this user_method

Configuration firebase-admin: 9.12.0 firebase-functions: 3.24.1 firebase/firestore-bigquery-export@0.1.24

Issue I have the firebase extension for streaming data to Big Query installed https://extensions.dev/extensions/firebase/firestore-bigquery-export.

Each month I run a job to import data into my Firestore collection in batches. This month I imported 2706 rows but only 2646 made it into Big Query (60 less).

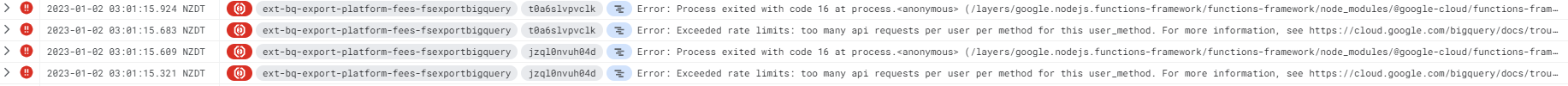

I am getting the following errors from the extension:

Error: Exceeded rate limits: too many api requests per user per method for this user_method. For more information, see https://cloud.google.com/bigquery/docs/troubleshoot-quotas Error: Process exited with code 16 at process. I contacted Firebase support and they suggested I upgrade to the latest firebase admin and function packages but these have breaking changes. Updating the latest version of firebase-admin gave me errors. I have not got any more help from them and it is still happening for multiple collections.

The options I see are:

- Update to the latest firebase-admin and firebase-functions packages and change my code to work with the breaking changes. I think this is unlikely to help.

- Update the firebase extension to the latest version from 0.1.24 to 0.1.29 which now includes a flag called “Use new query syntax for snapshots” which can be turned on. I can’t find much information about this. I have now done this but will not find out until next month if it works as it is hard to replicate in dev.

- Increase the Big Query quota somehow.

- Slow down the data being entered into Firestore or add it daily/weekly rather than monthly.

If I look in the GCP logs using the query:

protoPayload.status.code ="7"

protoPayload.status.message: ("Quota exceeded" OR "limit")

Here is my code in Nodejs:

const platformFeesCollectionPath = `platformFees`;

const limit = 500;

let batch = db.batch();

let totalFeeCount = 0;

let counter = 0;

for (const af of applicationFees) {

const docRef = db.collection(platformFeesCollectionPath).doc();

batch.set(docRef, { ...af, dateCreated: getTimestamp(), dateModified: getTimestamp() })

counter++;

if (counter === limit || counter === applicationFees.length) {

await batch.commit();

console.log(`Platform fees batch run for ${counter} platform fees`);

batch = db.batch();

totalFeeCount = totalFeeCount + counter;

counter = 0;

}

}

if (applicationFees.length > limit) {

// Need this commit if there are multiple batches as the applicationFees.length does not work

await batch.commit();

totalFeeCount = totalFeeCount + counter;

}

if (counter > 0) {

console.log(`Platform fees batch run for ${totalFeeCount} platform fees`);

}

About this issue

- Original URL

- State: closed

- Created a year ago

- Reactions: 1

- Comments: 17 (6 by maintainers)

Thanks @dackers86. This update helped a lot. Seeing much less errors from BQ now, and even when we do get some, it’s still fine because the extension is now integrated with Cloud Tasks, meaning the records don’t get lost on failure anymore. It’s funny, we were about to start implementing our own solution with Pub/Sub, to fix these issue and to ensure 100% eventual data consistency, and then you pushed this update, which already covers it both 😃

This appears to be working fine now I have updated. I have increased the batch size and will report back in a couple of weeks.

@dackers86 My apologies. It turns out I was using v0.1.30. I was testing the updated version on other collections before upgrading my largest collection.

I have now upgraded to the latest version and will keep an eye on it. Thanks for the quick response.

Yes thanks @dackers86 and your team for getting this fix sorted. Really appreciate the work and great to know we are being listened too!

Onwards and upwards for Firebase extensions 🚀

A new update has been released this week to fix the above issues, detailed can found here https://github.com/firebase/extensions/pull/1616.

To summarise:

Lifecycle events now exist which means we no longer need to run the table/view initialisation on every sync.

Additionally, an Cloud Task functions has now been included to provide configurable throttling of tasks ensuring that too many sync requests do not overload the BQ APi.

Please upgrade to

v0.1.33to access this new update.@dackers86 we are using the latest extension version - 0.1.32, and it was installed with the following configuration:

We never tweaked it manually, aside from lowering “cloud function max instances” to see if it improves the situation, but it didn’t.

It’s important to note that we are not doing batch inserts on our side to the related Firestore collection. Instead, we do singular insertions with ~3 TPS. And even with such a low TPS we are seeing these exceptions every now and then:

Again, what strikes our eyes is that we are getting “Exceeded rate limits: too many table update operations for this table.” error, which technically should not happen if the extension is using streaming inserts, as per https://cloud.google.com/bigquery/quotas#streaming_inserts (if my understanding is correct).