fairseq: RuntimeError: "LayerNormKernelImpl" not implemented for 'Half'

🐛 Bug

After following the Roberta pretraining documentation with the exact same steps and data. When running fairseq-train I get an error

To Reproduce

TOTAL_UPDATES=125000 # Total number of training steps

WARMUP_UPDATES=10000 # Warmup the learning rate over this many updates

PEAK_LR=0.0005 # Peak learning rate, adjust as needed

TOKENS_PER_SAMPLE=512 # Max sequence length

MAX_POSITIONS=512 # Num. positional embeddings (usually same as above)

MAX_SENTENCES=16 # Number of sequences per batch (batch size)

UPDATE_FREQ=16 # Increase the batch size 16x

DATA_DIR=data-bin/wikitext-103

fairseq-train --fp16 $DATA_DIR \

--task masked_lm --criterion masked_lm \

--arch roberta_base --sample-break-mode complete --tokens-per-sample $TOKENS_PER_SAMPLE \

--optimizer adam --adam-betas '(0.9,0.98)' --adam-eps 1e-6 --clip-norm 0.0 \

--lr-scheduler polynomial_decay --lr $PEAK_LR --warmup-updates $WARMUP_UPDATES --total-num-update $TOTAL_UPDATES \

--dropout 0.1 --attention-dropout 0.1 --weight-decay 0.01 \

--max-sentences $MAX_SENTENCES --update-freq $UPDATE_FREQ \

--max-update $TOTAL_UPDATES --log-format simple --log-interval 1

Then I get the following stack trace:

2020-08-03 09:13:25 | INFO | fairseq_cli.train | begin training epoch 1

Traceback (most recent call last):

File "/usr/local/bin/fairseq-train", line 33, in <module>

sys.exit(load_entry_point('fairseq', 'console_scripts', 'fairseq-train')())

File "/fairseq/fairseq_cli/train.py", line 350, in cli_main

distributed_utils.call_main(args, main)

File "/fairseq/fairseq/distributed_utils.py", line 189, in call_main

main(args, **kwargs)

File "/fairseq/fairseq_cli/train.py", line 121, in main

valid_losses, should_stop = train(args, trainer, task, epoch_itr)

File "/usr/lib/python3.6/contextlib.py", line 52, in inner

return func(*args, **kwds)

File "/fairseq/fairseq_cli/train.py", line 217, in train

log_output = trainer.train_step(samples)

File "/usr/lib/python3.6/contextlib.py", line 52, in inner

return func(*args, **kwds)

File "/fairseq/fairseq/trainer.py", line 457, in train_step

raise e

File "/fairseq/fairseq/trainer.py", line 431, in train_step

ignore_grad=is_dummy_batch,

File "/fairseq/fairseq/tasks/fairseq_task.py", line 347, in train_step

loss, sample_size, logging_output = criterion(model, sample)

File "/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/fairseq/fairseq/criterions/masked_lm.py", line 52, in forward

logits = model(**sample['net_input'], masked_tokens=masked_tokens)[0]

File "/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/fairseq/fairseq/models/roberta/model.py", line 119, in forward

x, extra = self.encoder(src_tokens, features_only, return_all_hiddens, **kwargs)

File "/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/fairseq/fairseq/models/roberta/model.py", line 337, in forward

x, extra = self.extract_features(src_tokens, return_all_hiddens=return_all_hiddens)

File "/fairseq/fairseq/models/roberta/model.py", line 345, in extract_features

last_state_only=not return_all_hiddens,

File "/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/fairseq/fairseq/modules/transformer_sentence_encoder.py", line 250, in forward

x = self.emb_layer_norm(x)

File "/.local/lib/python3.6/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "/.local/lib/python3.6/site-packages/torch/nn/modules/normalization.py", line 170, in forward

input, self.normalized_shape, self.weight, self.bias, self.eps)

File "/.local/lib/python3.6/site-packages/torch/nn/functional.py", line 2049, in layer_norm

torch.backends.cudnn.enabled)

RuntimeError: "LayerNormKernelImpl" not implemented for 'Half'

Environment

- fairseq Version : master

- PyTorch Version : 1.6.0

- OS : Linux (Ubuntu)

- How you installed fairseq (

pip, source): source - Build command you used (if compiling from source):

git clone https://github.com/pytorch/fairseq

cd fairseq

pip install --editable .

- Python version: 3.6.9

- CUDA/cuDNN version: 11.0

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 33

I get the same exception even when running on cpu. Did you find a solution?

try using these arguments in the webui-user.bat if you are running on CPU:

set COMMANDLINE_ARGS=--skip-torch-cuda-test --precision full --no-halfTry setting env variable NVIDIA_VISIBLE_DEVICES=‘all’ or NVIDIA_VISIBLE_DEVICES=all

this worked for me, I am running code through docker

MacBook Pro vim webui-macos-env.sh

export COMMANDLINE_ARGS=“–skip-torch-cuda-test --upcast-sampling --no-half-vae --use-cpu interrogate --precision full --no-half”

is OK

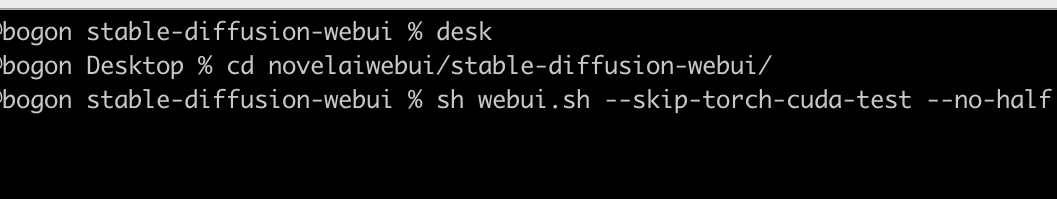

running like this: sh webui.sh --skip-torch-cuda-test --no-half --use-cpu all

TORCH_COMMAND='pip install torch torchvision --extra-index-url https://download.pytorch.org/whl/rocm5.1.1' python launch.py --precision full --no-halftry?I think that I found solution. In fact, what we need to do is to remove the parameter: --fp16. Then, the whole command line will look like: python3.7 train.py Quora/data-bin --restore-file bart.large/model.pt --max-tokens 1024 --task translation --source-lang source --target-lang target --truncate-source --share-decoder-input-output-embed --reset-optimizer --reset-dataloader --reset-meters --required-batch-size-multiple 1 --arch bart_large --criterion label_smoothed_cross_entropy --label-smoothing 0.1 --dropout 0.1 --attention-dropout 0.1 --weight-decay 0.01 --optimizer adam --adam-betas “(0.9, 0.999)” --adam-eps 1e-08 --clip-norm 0.1 --lr-scheduler polynomial_decay --lr 3e-05 --total-num-update 200000 --warmup-updates 500 --update-freq 4 --skip-invalid-size-inputs-valid-test --find-unused-parameters --save-interval-updates 20000 --cpu --device-id 0 --distributed-world-size 1 --distributed-no-spawn --pipeline-chunks 1 --dataset-impl mmap

try this command if u use

jupyterorKaggle notebookorcolabIm not using Nvidia so i cannot reinstall CUDA, any solution?

window

mac 打开终端,cd到指定目录,sh webui.sh --skip-torch-cuda-test --no-half --use-cpu all

这里是参数列表 https://github.com/AUTOMATIC1111/stable-diffusion-webui/wiki/Command-Line-Arguments-and-Settings

Solved after reinstalling CUDA and NCCL

Change " torch_dtype = torch.float16 " to " torch_dtype = torch.float32 "

Half becomes whole.

If you want to run it on gpu you have to set the default floating point to float32 by adding

torch.set_default_dtype(torch.float64)in your code. I was running the stable diffusion model, I was facing the same error, The issue is fixed when I set the floating point to float32.got the same error, restarting the notebook solved it for me notebook - 39_tutorial.transformers.ipynb

pipe = pipe.to(“cuda”)

RuntimeError: “LayerNormKernelImpl” not implemented for ‘Half’

I have successfully solved this problem by way of

Start the webUI with the following Mac OS Terminal command.

cd stable-diffusion-webui ./webui.sh --precision full --no-half

Special Thank >> https://stable-diffusion-art.com/install-mac/comment-page-1/

I’m using a Macbook Pro running Windows 11 and these COMMANDLINE_ARGS worked for me. –autolaunch --skip-torch-cuda-test --disable-nan-check --no-half --use-cpu all

I have a similar issue, as I run on my CPU I get the error:

If I run on my GPU, I get:

Any ideas?