roslyn: 'workspace/symbol' is slow to respond to cancellation

Version Used: VS 2019 int.master 16.6

Steps to Reproduce:

- Open a large solution with lots of projects (in this scenario, I used internal Editor.sln).

- Search for a symbol.

Expected Behavior: Any searches queued should be canceled by subsequent TYPECHARs.

Actual Behavior: I typed ‘CreateAsync’ and Ctrl+Q queues one search for ‘C’… and then attempts to cancel it on the next TYPECHAR but Roslyn ‘workspace/symbol’ didn’t yield/respond to cancellation for ~6 seconds.

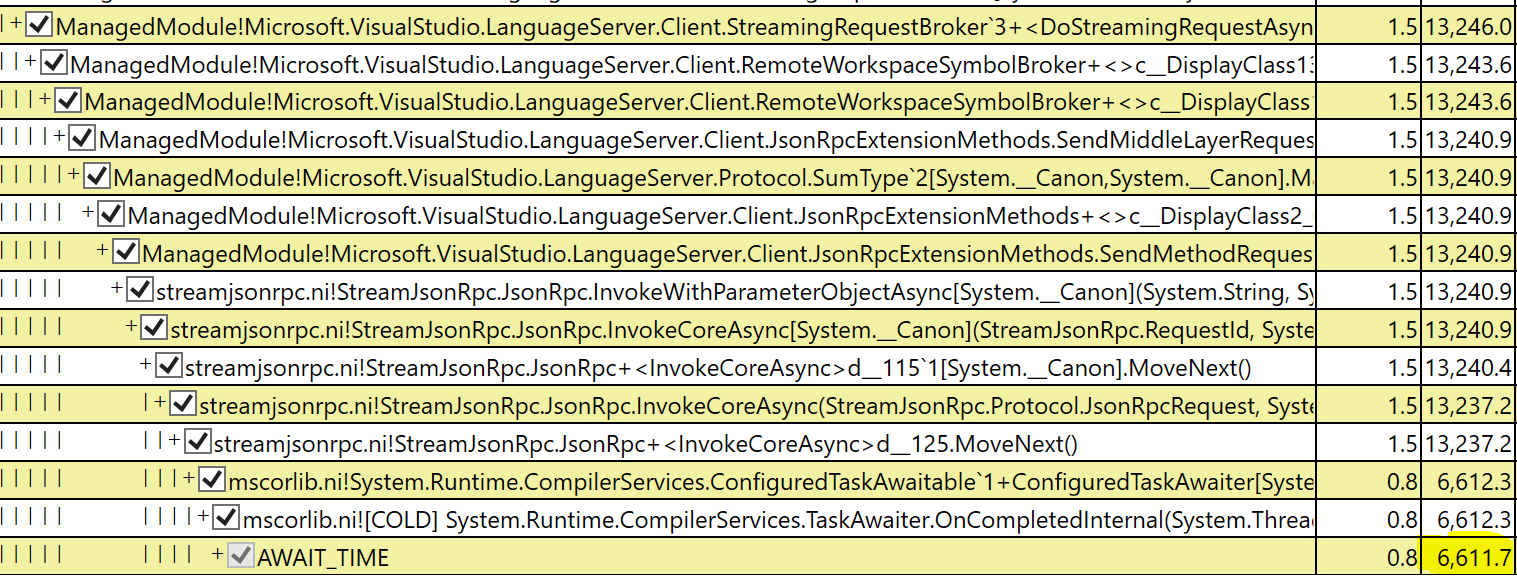

It’s not entirely clear to me what’s happening, I debugged through the search task scheduler, LSP language client extension, and Roslyn and found:

- Cancellation token is canceled immediately on subsequent TYPECHAR.

- Cancellation message makes it to

WorkspaceSymbolAsync’s cancellationToken immediately on the server side. - There’s a six second delay…

- Then ‘workspace/symbol’ seems to return at that point with a canceled token.

Sequence of events:

** User types "C" {3/19/2020 2:31:44 PM}

** Start search for "C" {3/19/2020 2:31:44 PM}

** User types "reateAsync" {3/19/2020 2:31:46 PM}

** Client cancel {3/19/2020 2:31:45 PM}

*** SERVER recv. cancel message {3/19/2020 2:31:45 PM}

[6 second delay]

** SERVER WorkspaceSymbolAsync Returns {3/19/2020 2:31:51 PM}

** Client returns {3/19/2020 2:31:52 PM}

** Start search for "CreateAsync" {3/19/2020 2:31:52PM}

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 32 (32 by maintainers)

@dibarbet FWIW, can you see if this change makes a positive impact: https://devdiv.visualstudio.com/DevDiv/_git/VS/pullrequest/239467

I have a change to batch per document rather than per project. Testing out to see if it seems any better right now. https://github.com/dotnet/roslyn/pull/42649

We’ll probably need to discuss messagepack support in the VS LSP client, also tagging @tinaschrepfer

Yes, switching from JSON to MessagePack is very easy – at the streamjsonrpc level. But two gotchas that may getcha:

Solution: make your types serializable both by newtonsoft.json and messagepack. Then initialize your RPC stream with StreamJsonRpc passing in the switch for either messagepack or JSON based on whether you know the remote party can speak messagepack. So you’ll be fast when you can, but still interop with others when necessary.

It definitely should. The delay is because we’re serializing out a huge project graph and sending that over. so we only finish once the full graph is serialized out. Having this be doc-level instead gives us much finer grained cancellation.