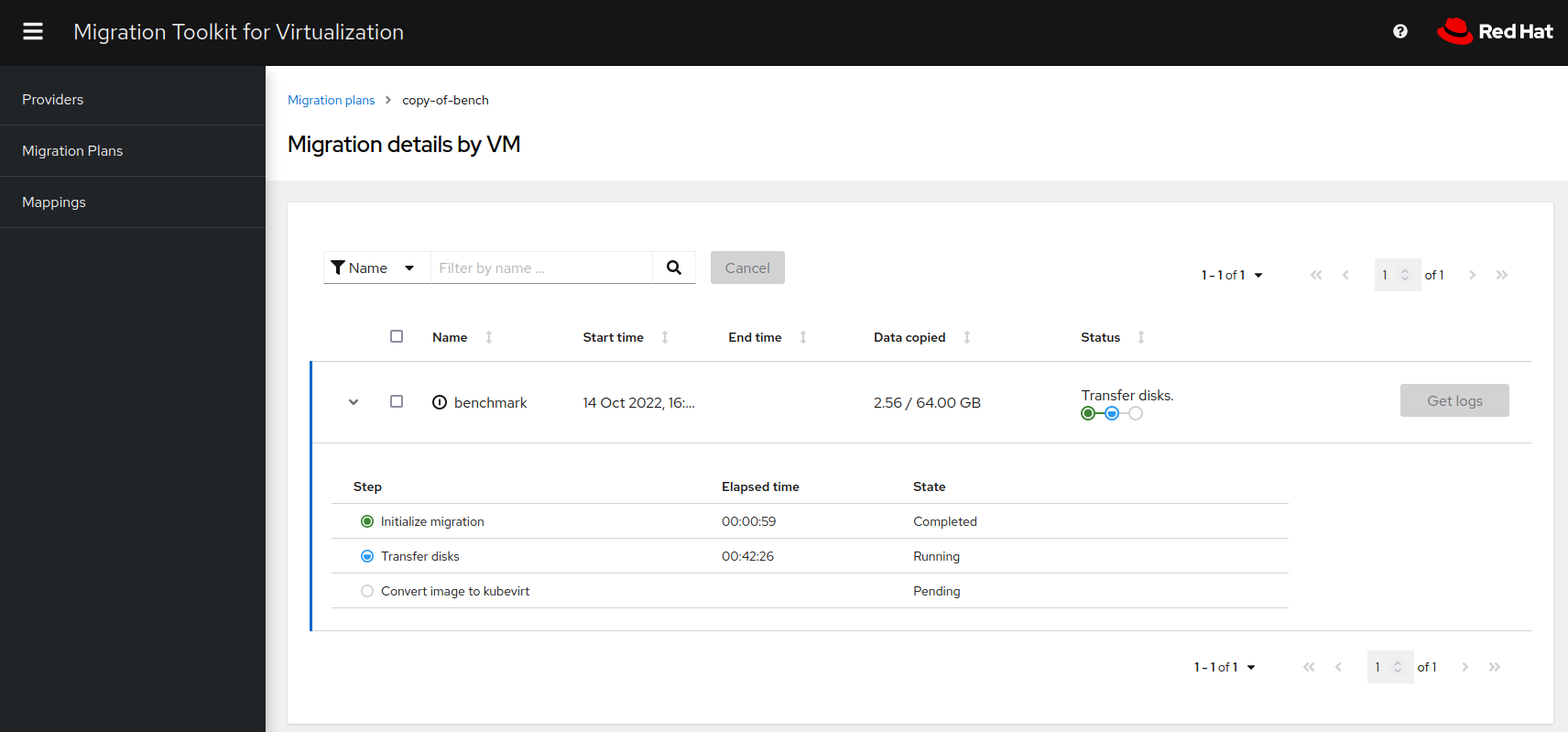

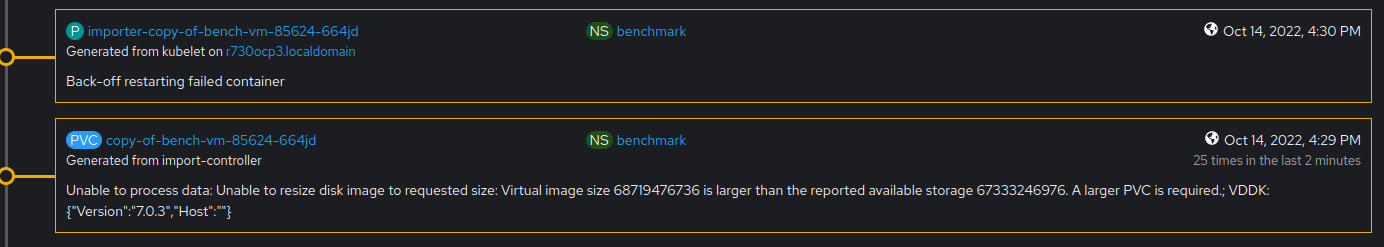

democratic-csi: Unable to resize disk image to requested size (Ext4)

Issue happens on SCALE (TrueNAS-SCALE-22.02.4), but not CORE (TrueNAS-13.0-U2). This is when running a VM migration from ESXi to OpenShift.

Maybe this is related? https://github.com/kubevirt/kubevirt/issues/6586

I haven’t tried the new VDDK version (7.0.3.2) here as I don’t think that is contributing to the issue: https://vdc-repo.vmware.com/vmwb-repository/dcr-public/b677d84a-d0a2-46ab-99bc-590c2f6281b9/d1496e6a-0687-4e13-afdd-339a11e33fab/VDDK-703c-ReleaseNotes.html

SCALE

csiDriver:

name: "org.democratic-csi.iscsi"

storageClasses:

- name: freenas-api-iscsi-csi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: ext4

mountOptions: []

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

driver:

config:

driver: freenas-api-iscsi

instance_id:

httpConnection:

protocol: http

host: 172.16.x.x

port: 80

username: root

password: ********

allowInsecure: true

zfs:

datasetParentName: tank/k8s/iscsi/v

detachedSnapshotsDatasetParentName: tank/k8s/iscsi/s

zvolCompression: lz4

zvolDedup: off

zvolEnableReservation: true # Tried false here as well

zvolBlocksize:

iscsi:

targetPortal: "172.16.x.x:3260"

targetPortals: []

# leave empty to omit usage of -I with iscsiadm

interface:

namePrefix: csi-

nameSuffix: "-cluster"

targetGroups:

- targetGroupPortalGroup: 1

targetGroupInitiatorGroup: 1

targetGroupAuthType: None

extentInsecureTpc: true

extentXenCompat: false

extentDisablePhysicalBlocksize: false

extentBlocksize: 512

extentRpm: "SSD"

extentAvailThreshold: 0

CORE

csiDriver:

name: "org.democratic-csi.iscsi"

storageClasses:

- name: freenas-iscsi-csi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

fsType: ext4

mountOptions: []

secrets:

provisioner-secret:

controller-publish-secret:

node-stage-secret:

node-publish-secret:

controller-expand-secret:

driver:

config:

driver: freenas-iscsi

instance_id:

httpConnection:

protocol: http

host: 172.16.x.x

port: 80

username: root

password: **********

allowInsecure: true

apiVersion: 2

sshConnection:

host: 172.16.1.119

port: 22

username: root

password: ***********

zfs:

cli:

paths:

zfs: /usr/local/sbin/zfs

zpool: /usr/local/sbin/zpool

sudo: /usr/local/bin/sudo

chroot: /usr/sbin/chroot

datasetParentName: tank/k8s/iscsi/v

detachedSnapshotsDatasetParentName: tank/k8s/iscsi/s

zvolCompression: lz4

zvolDedup: off

zvolEnableReservation: false

zvolBlocksize: 16K

iscsi:

targetPortal: "172.16.x.x:3260"

targetPortals: []

# leave empty to omit usage of -I with iscsiadm

interface:

namePrefix: csi-

nameSuffix: "-cluster"

targetGroups:

- targetGroupPortalGroup: 1

targetGroupInitiatorGroup: 1

targetGroupAuthType: None

extentInsecureTpc: true

extentXenCompat: false

extentDisablePhysicalBlocksize: false

extentBlocksize: 512

extentRpm: "SSD"

extentAvailThreshold: 0

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 43 (16 by maintainers)

I would argue that this should be the default for ext4 in a containerized world, not having that set by default leads to the issue we figured out here.

I think a call is in order. Shout out to my github profile email and we’ll get something arranged.

Can you send the logs from the

csi-drivercontainer on a node pod on a relevant node (where the migration pod/process is running from)? My thinking is this (I think you are on to something with the filesystem calculation theory):democratic-csiis creating the volume as requested (adding padding to the size of the zvol as necessary)There’s a lot of supposition here without a better understanding on my part about how the importer works and what it’s doing. If I had to venture a guess when working in filesystem mode, a vmdk/qcow images is being exported from esxi and then copied to the ext4/xfs/whatever filesystem of the PV/PVC. When it tries to resize the virtual disk it realizes there’s not enough space (on the filesystem) to handle that and therefore pukes. Again, just a crude guess at this point.

Another guess, if you run it using raw block access mode vs filesystem it would work (no fs overhead involved). Of note here is that when using block access mode I only return the total size and not the available size as I have no way to know when using raw bits like that (the

sizeattribute of the block device from thelsblk -a -b -l -J -O <dev>command). For filesystem access mode I return relevant values as reported by thefindmntcommand (ie: filesystem data, not block device data) and additionally in certain circumstances (when available) also inode stats (df -i) in addition to byte stats.Basically what I’m looking for in the node logs are the

GetVolumeStatsresponses to see if the response values are corresponding to the errors in your screenshots.The sizes of the zvols in the storage system (without fs overhead) should be:

In short, I’m guessing it’s a discrepancy between the importer calculations for needed size, raw block/zvol size, and resulting fs size. Or said differently, the size for the requested PV/PVC by the importer may need to account for fs overhead when using filesystem access mode.

I am happy to arrange a call but I’m in Mountain Time USA and prefer to have it at a more ‘normal’ hour when I’m at my office 😃