democratic-csi: Odd iSCSI read performance, at or around 1MB block size (TrueNAS Core)

After figuring out the issue on #238 it seems there is an odd performance issue on iSCSI LUNs presented to OpenShift; at least on a Windows 10 VM running in OpenShift Virtualization.

The environment for the performance test is:

Dell R730xd OpenShift worker node (Bare Metal), 2x 40Gbps ConnectX-3 Pro NICs in a bond (LACP)

Dell R730xd TrueNAS-13.0-U2 (Bare Metal) with 24x SAMSUNG MZILS1T9HEJH0D3 SAS SSDs in RAID10 (With no tunables set)

Arista 7050QX switch

iSCSI presented to OpenShift with democratic-csi helm 0.13.5

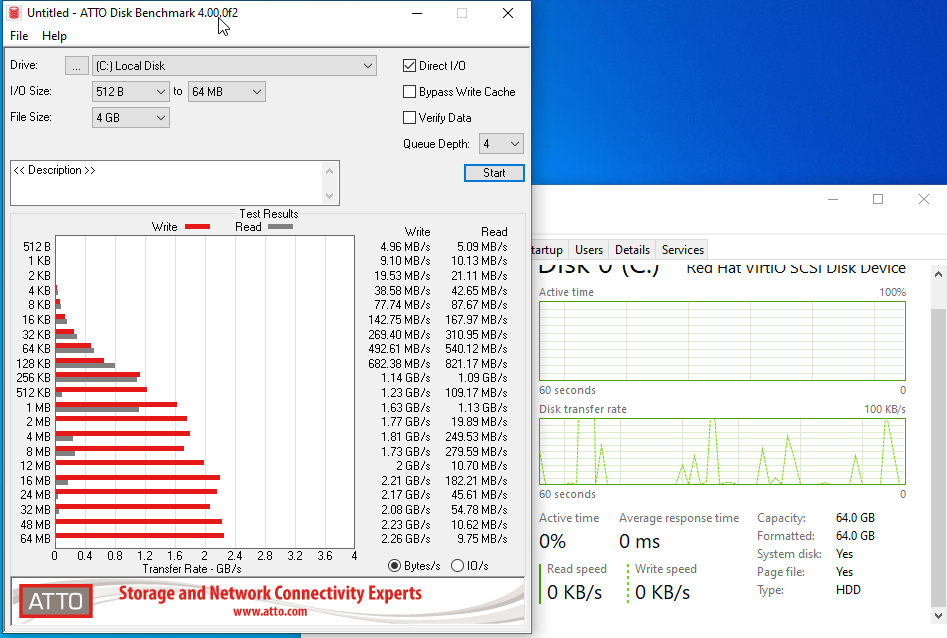

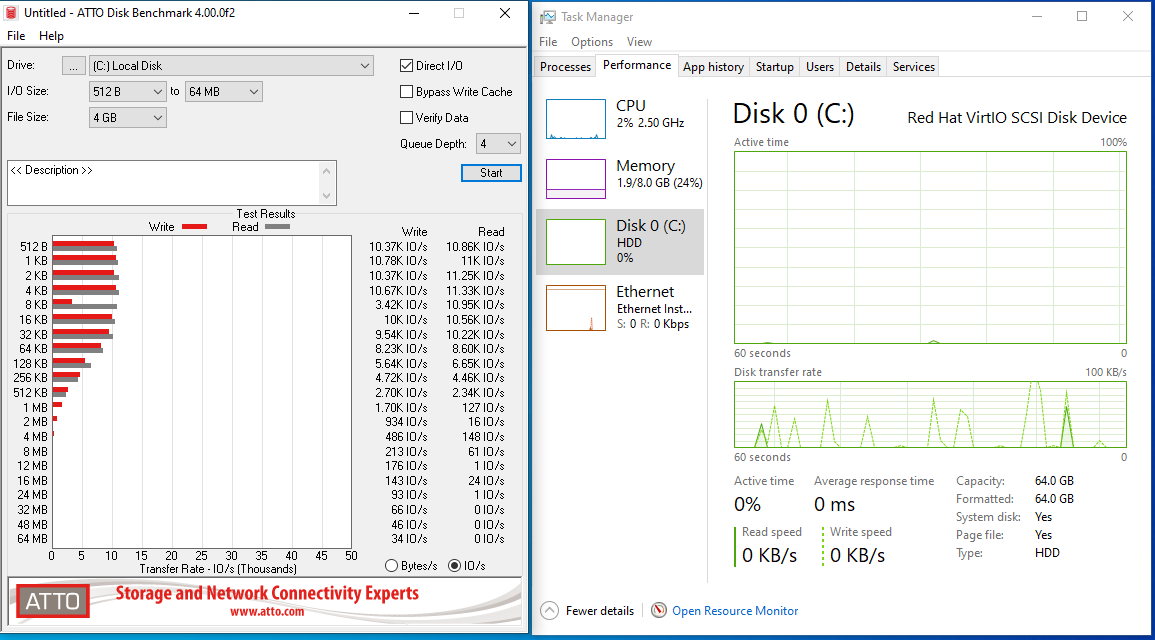

I have tried a number of combinations of storage class configuration with inconclusive results, but always read performance degradation somewhere above 512KB block size, with “Disk Active Time” pegged at 100% and very high disk average response time (Sometimes > 6000ms), CPUs are relatively idle during this time with 4vCPUs provisioned… I have not tried MPIO with multiple targets as I know it is best practice with iSCSI links but the LACP policy is set to layer 2 hash, and is primarily there for redundancy and ease of use as TrueNAS hosts NFS as well. Additionally the MTU on the network is 1500, not 9K.

There IS THIS that looks suspicious and is already merged into OpenZFS 2.1.6:

https://github.com/openzfs/zfs/discussions/13448?sort=new

https://github.com/openzfs/zfs/pull/13452

But current TrueNAS Core (13.0-U2) currently runs (And rightfully so, as 2.1.6 just dropped 15 days ago):

truenas# zpool -V

zfs-2.1.5-1

zfs-kmod-v2022081800-zfs_27f9f911a

Adjusting zfetch_array_rd_sz seems to have no effect as PR author points out.

Then again, I have no idea is the issue is in TrueNAS, or something OpenShift does while leveraging the CSI to present storage (ext4/xfs/block) and potentially a filesystem cache that might exist in some intermediary layer… though issue persists with volumeMode: Block. VirtIO drivers are loaded in guest VM:

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Comments: 44 (12 by maintainers)

This on the iX radar. I think 13.0-U3 should be out relatively soon and maybe the included 2.1.6 will help.

I’d like to chat about spdk with you if you’re willing…

Editing in an attempt at a bit more performance:

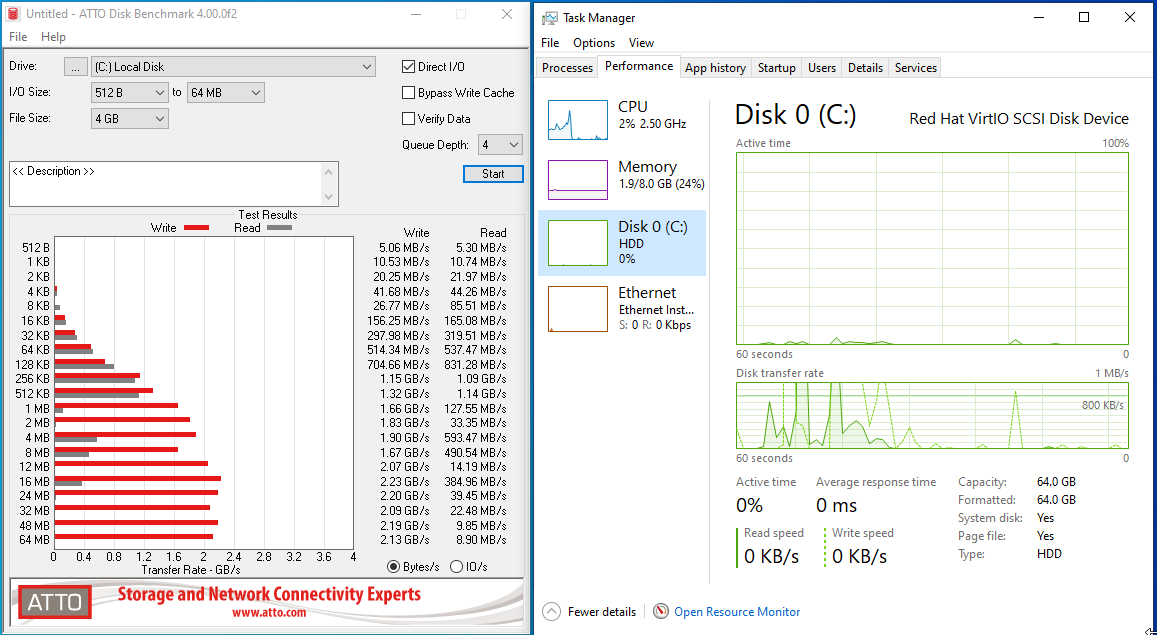

More testing tonight… good news and bad news:

Good news!

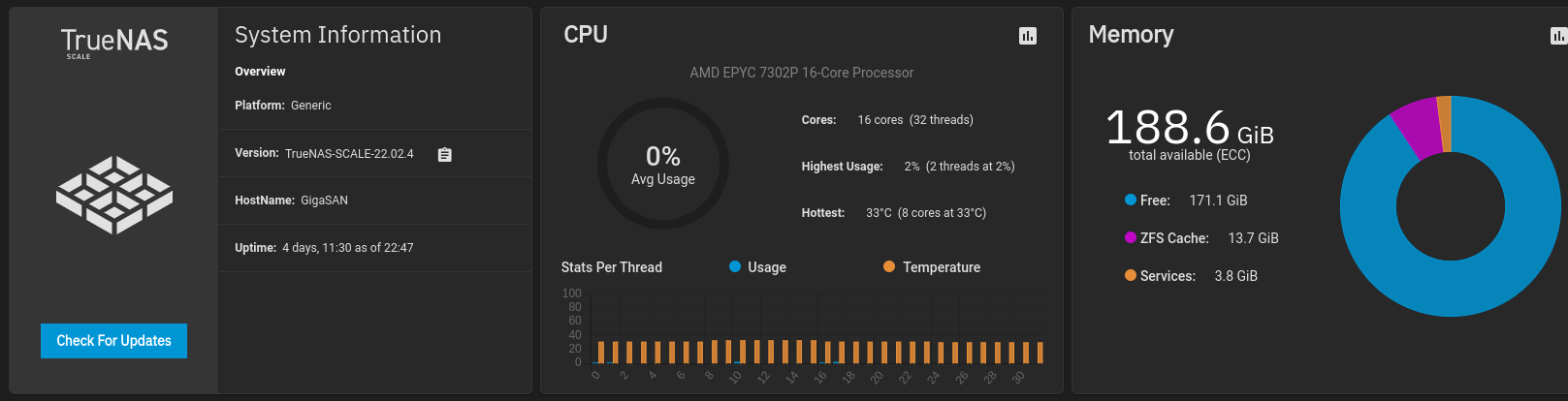

The read performance issue is NOT PRESENT on TrueNAS SCALE!

Bad news

TrueNAS SCALE doesn’t have nearly the performance as like-for-like driver/zvol settings as Core… but this isn’t new information and something I know iX Systems is working hard on!

Confirmed test on:

Gigabyte R272-Z32-00 (Rev. 100) TrueNAS-SCALE-22.02.4 (Bare Metal) with 12x INTEL SSDPE2KX010T8 NVMe SSDs in RAID10 (With no tunables set)

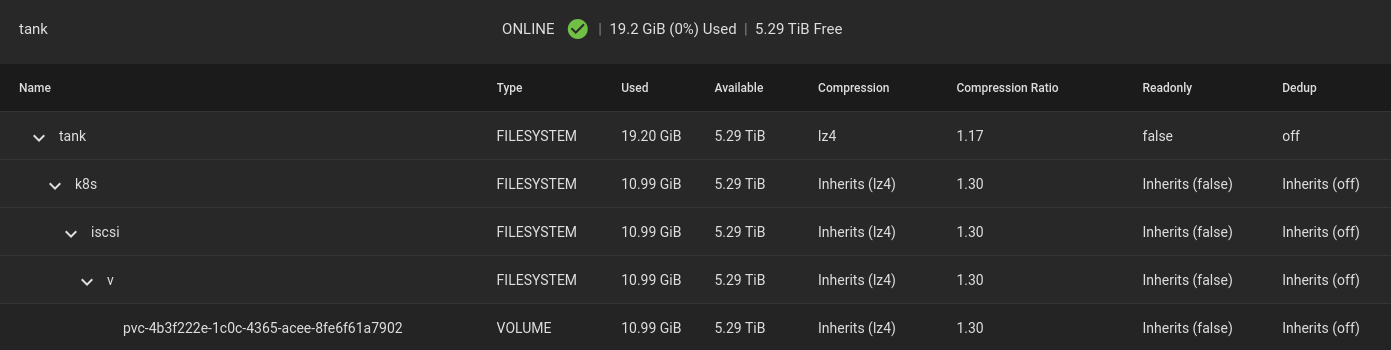

StorageProfile in openshift-cnv namespace, volumeMode: Block makes XFS irrelevant on Migration Toolkit import (As seen in PVC above).

Helm Command

truenas-scale-iscsi.yaml (Notice using SSH driver, not API driver)

Need higher block size…

Final Throughput:

Final IOPS (Ouch 0 IOPS!!! But at least the bench finished!):

@travisghansen thank you very much, happy to facilitate them getting hands on with the environment… it is very stock latest Core box and OCP 4.11.

That fs only kicks in if the volume access mode in k8s is filesystem vs block. If the volume access mode is block it will be ignored entirely.