cvat: Timeout: Incomplete Dataset Export Download

My actions before raising this issue

- Read/searched the docs

- Searched past issues

So I’ve been trying to export one of my datasets for the past several days but in vain. Dumping annotations seems to be working fine, but exporting the dataset always results in a timeout halfway through the download. I’ve attempted this on two different networks and browsers with no success.

Expected Behaviour

Trying to export a dataset (4.1 GB) should download successfully, without any timeouts or errors.

Current Behaviour

Download timeouts after 2GB have downloaded and the resulting file is unusable.

Possible Solution

I’ve tried circumventing the timeout in multiple ways, which have all resulted in failure.

1 - I tried using wget in Colab to get around what I thought was slow internet, but the downloaded file is empty and of Length = 0 .

!wget --no-check-certificate --load-cookies cookies.txt \

'https://cvat.org/api/v1/tasks/###/dataset?format=TFRecord%201.0&action=download'

2- Update: This only worked once and now times out at 2GB as well.

I tried using Selenium on Colab and it was successful:

from selenium import webdriver

options = webdriver.ChromeOptions()

options.add_argument('--headless')

options.add_argument('--no-sandbox')

options.add_argument('--disable-dev-shm-usage')

options.add_experimental_option("prefs", {

"download.default_directory": r"/content/",

"download.prompt_for_download": False,

"download.directory_upgrade": True,

"safebrowsing.enabled": True

})

wd = webdriver.Chrome('chromedriver',options=options)

wd.get("https://cvat.org/auth/login")

username = wd.find_element_by_id("username")

password = wd.find_element_by_id("password")

username.send_keys("username")

password.send_keys("password")

element = wd.find_element_by_css_selector('.login-form-button')

wd.execute_script("arguments[0].click();", element)

from selenium.webdriver import ActionChains

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

wait = WebDriverWait(wd, 10)

actions = ActionChains(wd)

open = wd.find_element_by_css_selector("#root > section > main > div > div:nth-child(3) > div > div:nth-child(1) > div:nth-child(4) > div:nth-child(1) > div > a")

wd.execute_script("arguments[0].click();", open)

action_button = wd.find_element_by_css_selector("#root > section > main > div > div > div.ant-row-flex.ant-row-flex-space-between.ant-row-flex-middle.cvat-task-top-bar > div:nth-child(2) > button")

actions.move_to_element(action_button).perform()

export_data = wd.find_element_by_css_selector("body > div:nth-child(9) > div > div > ul > li:nth-child(3) > div")

actions.move_to_element(export_data).perform()

data = wd.find_element_by_xpath("//*[@id='export_task_dataset$Menu']/li[1]")

wd.execute_script("arguments[0].click();", data)

Steps to Reproduce (for bugs)

- Create Task

- Annotate a few things

- Export dataset

- Download timeouts before successful download.

Context

I can’t export my data for use in research.

Your Environment

CVAT.org

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Reactions: 3

- Comments: 31 (5 by maintainers)

@memirerdol @ConstantSun For some odd reason,

curlworked for me today - I was able to download 64 GB worth of my annotated data. Regular downloads using the GUI still times out after 1GB@lgg, we discussed internally and I’m going to disable this limit for cvat.org today

I have the same issue when trying to export a dataset. Reports downloading a 4GB file via Chrome, but the resulting ‘dataset’ file is empty.

Was having the same issue on my local setup using the default docker-compose setup and I discovered that the problem seems to be with the cvat_proxy container which is running an nginx http proxy.

proxy_buffering is enabled in which case it will (try to) cache large reponses to a temporary local file in the cvat_proxy container, and the default setting for the maximum buffered file size is 1GB. I wasn’t sure if I should completely disable buffering to a file because I didn’t want to stall any worker threads in the backend, so I just bumped the setting to 10GB.

I should note, this patch is no longer relevant after release 1.5.0 because the cvat_proxy was changed from nginx to traefik.

@memirerdol I’ve been waiting for a solution for 10 months now while my data is being held hostage and my research paper is on hold because of it. I would’ve understood had they mentioned data above a certain size couldn’t be downloaded but they failed to mention it anywhere… It’s super frustrating but there’s nothing to be done. @azhavoro Please any updates on this?

I used https://cvat.org/ - so I don’t have access to the server to be able to set

--limit-request-body to 0@cyrilzakka Yea, me too. Even when I set: --limit-request-body 0 as suggested in

https://github.com/openvinotoolkit/cvat/blob/develop/supervisord.conf#L65 My downloading annotation file is stopped when it reaches about 1GB.

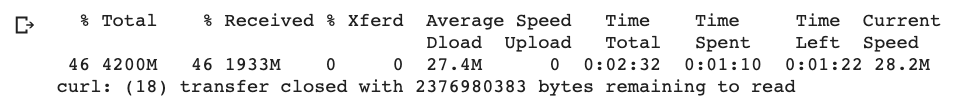

Sorry to bring this up again, but has this issue been fixed? As of today, I’m still receiving this error. Always around 46-48% of this dataset:

How to solve this problem in a running Docker hosted on a server. I have the access to the hosted server and i could end up loosing a lot of labelled images

Thanks a lot, @azhavoro . Using your advice,

1.I downloaded the annotations online (which has no issues downloading) then 2.uploaded the annotations to the offline installation and job 3.changed the local supervisord.conf code line 65

And I got my full dataset exported. Quite a workaround!😅

@JobCollins Hi, try to adjust

--limit-request-bodyvalue https://github.com/openvinotoolkit/cvat/blob/develop/supervisord.conf#L65. In the near future we plan to remove any data size limitations by default.Bug is still there. Even with blazing internet speed I’m only able to download 39-42%