cri-o: No metrics reported from CRI-O: Unable to account working set stats: total_inactive_file > memory usage

What happened?

On a 3-node cluster deployed with kubeadm and using CRI-O, I am seeing a situation where after some amount of workload is allocated to a node, the node stops reporting any metrics (CPU/memory usage is 0% or <unknown> in kubectl top nodes). In the system journal for CRI-O, I can see these warnings being emitted every second or so on the affected nodes:

Mar 18 16:09:13 ram.fuwafuwatime.moe crio[72618]: time="2023-03-18 16:09:13.067279194-04:00" level=warning msg="Unable to account working set stats: total_inactive_file (3827683328) > memory usage (3233787904)"

Mar 18 16:09:18 ram.fuwafuwatime.moe crio[72618]: time="2023-03-18 16:09:18.919610520-04:00" level=warning msg="Unable to account working set stats: total_inactive_file (3827683328) > memory usage (3233787904)"

Mar 18 16:09:24 ram.fuwafuwatime.moe crio[72618]: time="2023-03-18 16:09:24.932763766-04:00" level=warning msg="Unable to account working set stats: total_inactive_file (3827683328) > memory usage (3233746944)"

Mar 18 16:09:31 ram.fuwafuwatime.moe crio[72618]: time="2023-03-18 16:09:31.687808594-04:00" level=warning msg="Unable to account working set stats: total_inactive_file (3827683328) > memory usage (3233759232)"

If I execute kubectl top nodes on the cluster, I get this output:

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

frederica.fuwafuwatime.moe 1867m 23% 17545Mi 55%

ram.fuwafuwatime.moe <unknown> <unknown> <unknown> <unknown>

rem.fuwafuwatime.moe <unknown> <unknown> <unknown> <unknown>

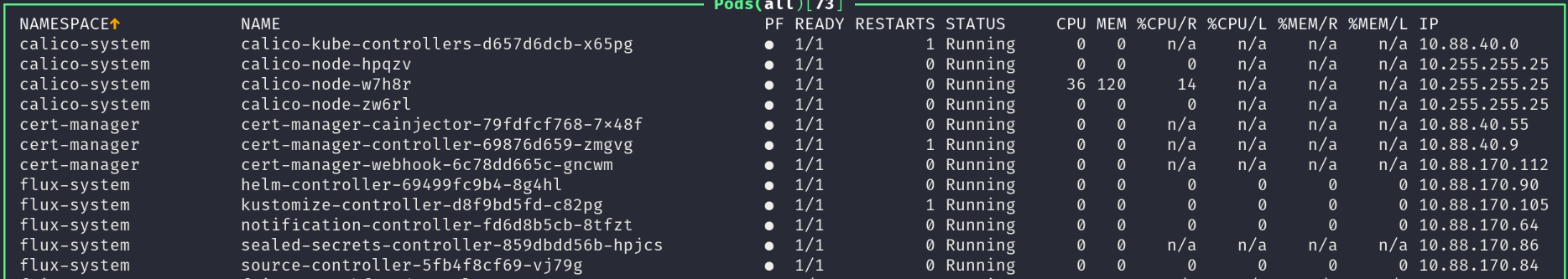

And in k9s, the pods running on these nodes report no CPU/memory usage (0%):

What did you expect to happen?

Metrics should be visible from these nodes, regardless of workload.

How can we reproduce it (as minimally and precisely as possible)?

This is what I have done:

- Deploy a 3-node kubernetes cluster using

kubeadmand CRI-O (one “master” node, 2 worker nodes, all 3 running control plane pods). - Deploy Calico.

- Deploy

metrics-server. - Run some workloads on the cluster.

It seems most of the time this issue occurs on nodes that have at least (about) 50% memory utilization, but I haven’t found a reliable reproducer yet.

Anything else we need to know?

SELinux is enabled on the hosts in this cluster using Gentoo’s fork of refpolicy, but I have been able to reproduce this issue even when SELinux is in permissive mode.

CRI-O and Kubernetes version

$ crio --version

crio version 1.26.0

Version: 1.26.0

GitCommit: unknown

GitCommitDate: unknown

GitTreeState: clean

BuildDate: 2022-12-25T22:53:52Z

GoVersion: go1.19.4

Compiler: gc

Platform: linux/amd64

Linkmode: dynamic

BuildTags:

containers_image_ostree_stub

exclude_graphdriver_btrfs

btrfs_noversion

containers_image_openpgp

seccomp

selinux

LDFlags: -s -w -X github.com/cri-o/cri-o/internal/pkg/criocli.DefaultsPath="" -X github.com/cri-o/cri-o/internal/version.buildDate=2022-12-25T22:53:52Z

SeccompEnabled: true

AppArmorEnabled: false

Dependencies:

$ kubectl version -o yaml

clientVersion:

buildDate: "2023-03-10T00:50:26Z"

compiler: gc

gitCommit: fc04e732bb3e7198d2fa44efa5457c7c6f8c0f5b

gitTreeState: archive

gitVersion: v1.26.2

goVersion: go1.20.1

major: "1"

minor: "26"

platform: linux/amd64

kustomizeVersion: v4.5.7

serverVersion:

buildDate: "2023-02-22T13:32:22Z"

compiler: gc

gitCommit: fc04e732bb3e7198d2fa44efa5457c7c6f8c0f5b

gitTreeState: clean

gitVersion: v1.26.2

goVersion: go1.19.6

major: "1"

minor: "26"

platform: linux/amd64

OS version

# On Linux:

$ cat /etc/os-release

NAME=Gentoo

ID=gentoo

PRETTY_NAME="Gentoo Linux"

ANSI_COLOR="1;32"

HOME_URL="https://www.gentoo.org/"

SUPPORT_URL="https://www.gentoo.org/support/"

BUG_REPORT_URL="https://bugs.gentoo.org/"

VERSION_ID="2.13"

$ uname -a

Linux ram.fuwafuwatime.moe 6.1.15-gentoo-hardened1 #1 SMP Tue Mar 7 19:50:39 EST 2023 x86_64 AMD EPYC 7313 16-Core Processor AuthenticAMD GNU/Linux

Additional environment details (AWS, VirtualBox, physical, etc.)

About this issue

- Original URL

- State: open

- Created a year ago

- Comments: 24 (10 by maintainers)

yeah this is a frequent source of confusion (and a piece of code I have issues about). The kubelet does a precise string match, not path match. so when the path is set to

/run/crio/crio.sock, kubelet chooses cri stats provider, which hits this problem.