watchtower: watchtower interferes with itself when upgrading itself (regression)

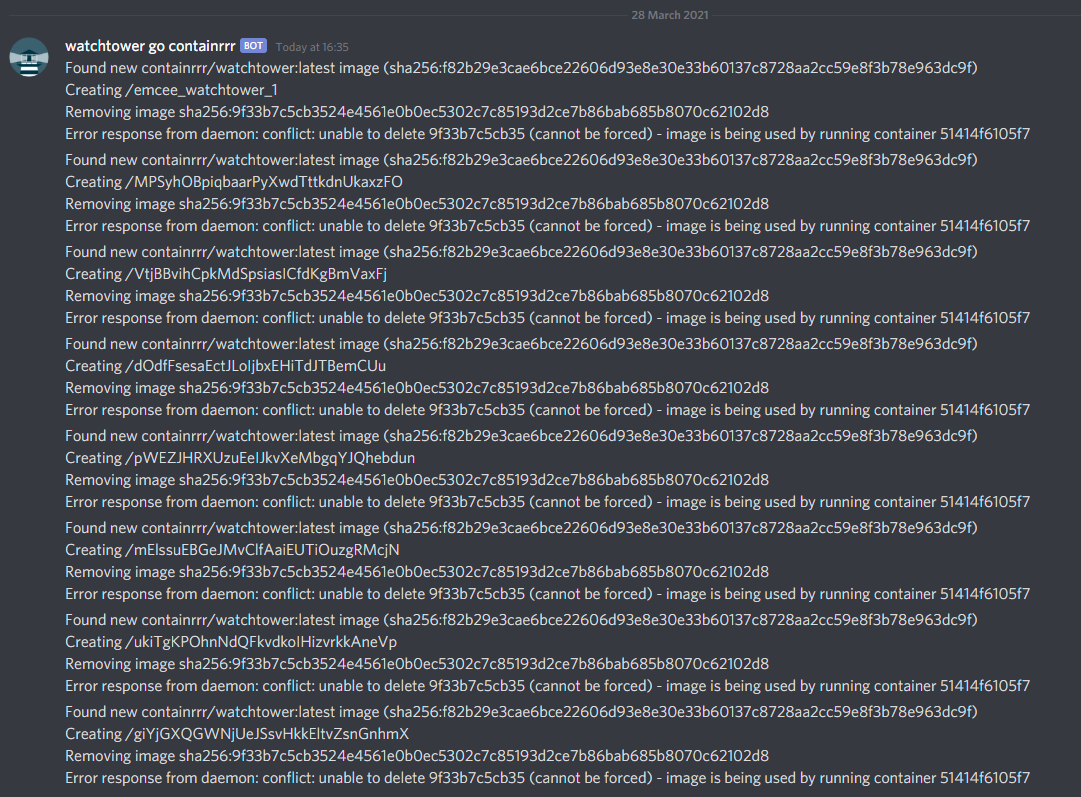

Describe the bug I have a Compose deployment with each container labelled with a watchtower scope, so the watchtower instance started is only responsible for the containers in this stack. Today at 16:35 Eastern (2021-03-28 20:35 UTC), a new latest was pushed to Docker Hub, and the error in #747 reoccurred. This is a regression as previous watchtower self-updates since have succeeded.

To Reproduce Simplified version of the actual compose file used is in the previous issue.

Expected behavior

I expected watchtower to update itself as it does with other containers, without sending a bunch of errors, fighting itself, and segfaulting. I also expected the updated container to have the same name as initialized by Compose (emcee-tournament-bot_watchtower_1).

Screenshots

Several containers were created and stuck in the restarting state before I ran docker-compose stop watchtower to stop them all.

$ docker-compose ps

Name Command State Ports

-----------------------------------------------------------------------------------------------------------

MPSyhOBpiqbaarPyXwdTttkdnUkaxzFO /watchtower Exit 1

VtjBBvihCpkMdSpsiasICfdKgBmVaxFj /watchtower Exit 1

dOdfFsesaEctJLoIjbxEHiTdJTBemCUu /watchtower Exit 1

dQSbCsgHvNfKmYmDLzsOkRfLdgaxEqzh /watchtower Exit 1

emcee_adminer_1 entrypoint.sh docker-php-e ... Up (healthy) 127.0.0.1:8080->8080/tcp

emcee_backup_1 /bin/sh -c exec /usr/local ... Up (healthy) 5432/tcp

emcee_bot_1 docker-entrypoint.sh node ... Up

emcee_postgres_1 docker-entrypoint.sh postgres Up (healthy) 127.0.0.1:5432->5432/tcp

emcee_watchtower_1 /watchtower Exit 1

giYjGXQGWNjUeJSsvHkkEltvZsnGnhmX /watchtower Exit 1

mElssuEBGeJMvClfAaiEUTiOuzgRMcjN /watchtower Exit 1

pWEZJHRXUzuEeIJkvXeMbgqYJQhebdun /watchtower Exit 1

qEvzDrawKPMoLHgYTzmYqJRZfoeiOWKH /watchtower Exit 1

ukiTgKPOhnNdQFkvdkoIHizvrkkAneVp /watchtower Exit 1

Environment Ubuntu 18.04.5 LTS, x86_64 Docker version 20.10.2, build 2291f61 docker-compose version 1.27.4, build 40524192

This happened in production so I’m not running with --debug. The logs match the webhook output to Discord.

Additional context Add any other context about the problem here.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 10

- Comments: 19 (10 by maintainers)

also, with the 1.2.1 update I get some weird looking notifications from watchtower upon first start:

Glad to hear!

Yeah, the message is intentional, although there still seem to be a couple of kinks to iron out.

Terribly sorry for the inconvenience this might have caused for you.

1.2.1 should resolve the self-replication issue. If not, please let me know.

I’m also having the same problem all my servers are not responding due to high amount of docker containers.