terraform-provider-confluent: Bug: KsqlAdmin role for ksqldb doesn't work

Steps to reproduce

- Use this example for create ksql cluster - > https://github.com/confluentinc/terraform-provider-confluent/blob/master/examples/configurations/ksql-acls/main.tf

- Connect to Ksql with “app-manager-kafka-api-key” key-secret

$CONFLUENT_HOME/bin/ksql -u $KSQL_API_KEY -p $KSQL_API_SECRET https://pksqlc-<ID>.eu-central-1.aws.confluent.cloud:443 - Check streams:

list streams;

Expected result

ksql> list streams;

Stream Name | Kafka Topic | Key Format | Value Format | Windowed

------------------------------------------------------------------------------------------

KSQL_PROCESSING_LOG | pksqlc-<ID>-processing-log | KAFKA | JSON | false

------------------------------------------------------------------------------------------

Actual result

ksql> list streams;

You are forbidden from using this cluster.

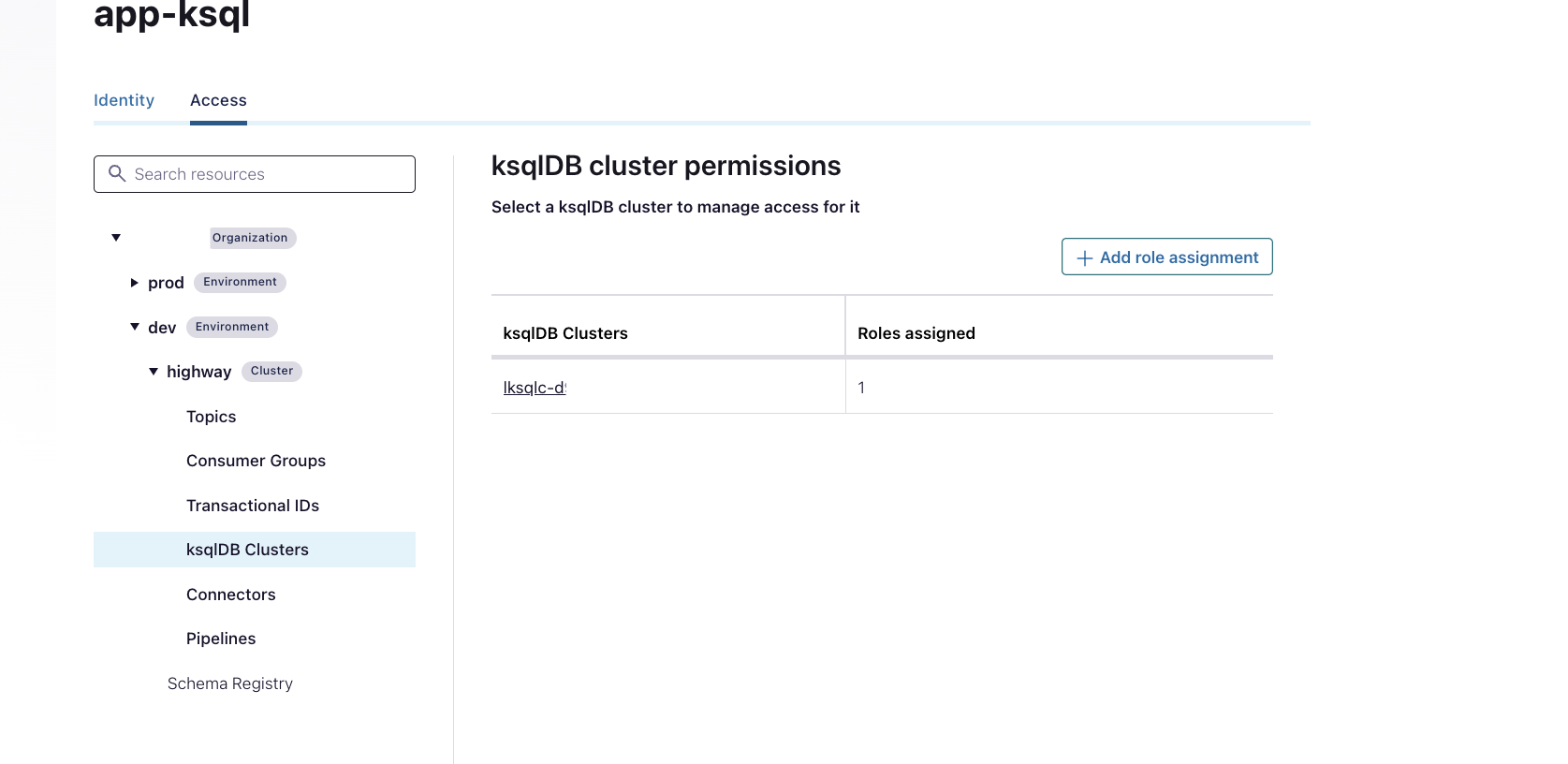

If you check GUi you will see that this role map to the ksql cluster id:

In the documentation it connects to the cluster name.

When you add this role in GUI by hand all work.

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 16 (3 by maintainers)

@Asvor @S1M0NM could you try again with

It seems to work now (after we released a backend fix).

If I understand you correctly, it’s probably because you’re using the wrong API key. The

app-manager-kafka-api-keybelongs to theapp-managerservice account.The

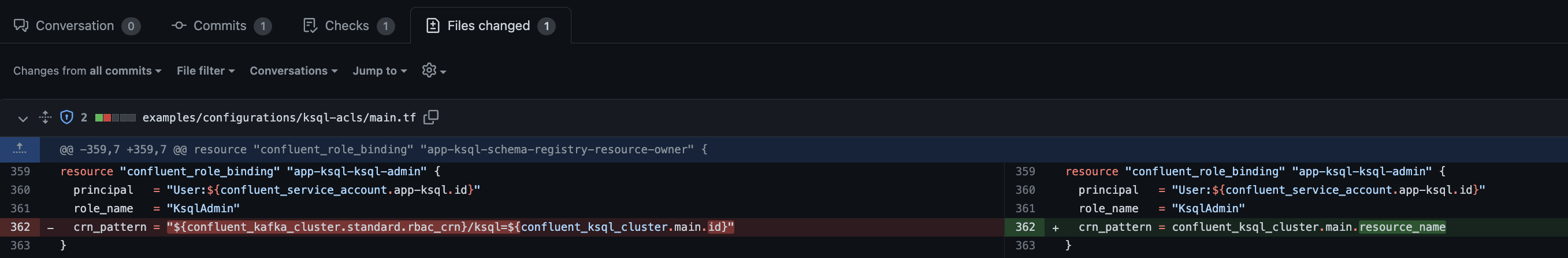

KsqlAdminrole, however, is assigned to theapp-ksqlservice account, which in turn gets theapp-ksqldb-api-keycreated. This key can then be used for the KSQL cluster.update: we updated ksql-rbac example to replace

CloudClusterAdminrole withResourceOwnerandKsqlAdminroles in 1.33.0.update: @Asvor @S1M0NM we’re working on a fix.

I tried it just now because i wasnt sure, and it also doesnt work:

The used crn pattern doesn’t look right… and also results in a failure:

Hi! Yes, I was confused about the name of the key name in the ticket. But, I checked this behaviour on our dev cluster with the right key-secret pair and I had the same problem with this KsqlAdmin role. (I had to give “CloudClusterAdmin” and it worked, but it was a big security issue) Could you, please, check this behaviour?