concourse: Performance regression with 'overlay' driver and privileged containers

Bug Report

- Concourse version: 3.3.1

- Deployment type (BOSH/Docker/binary): binary

- Infrastructure/IaaS: AWS

- Browser (if applicable): chrome

- Did this used to work? Yes

We are noticing that various tasks are hanging for 1-10m before executing. (the ui doesn’t show the ‘loading’ icon spinning, just hangs)

Our setup:

- 2 workers with 16gb RAM and 4 cores, 1 web with 8gb and 4 cores (IIRC)

- Postgres db lives on the web VM

- Workers and web never get above 10% CPU

- Three pipelines with < 5 jobs, 1 pipeline with ~20 jobs, some of which have 3000+ builds (most are low hundreds though)

Things we have tried:

- Set

build_logs_to_retain: 20per @jtarchie

This was also seen by @krishicks in the Slack concourse#general channel, I believe.

About this issue

- Original URL

- State: closed

- Created 7 years ago

- Comments: 24 (12 by maintainers)

Commits related to this issue

- bump baggageclaim concourse/concourse#1404 Submodule src/github.com/concourse/baggageclaim a6dbd0b8..71a864b0: > Change the default driver to btrfs Signed-off-by: Shash Reddy <sreddy@pivotal.io> — committed to concourse/concourse by deleted user 6 years ago

- bump etree tsa handlers mux clockwork goxmldsig genproto grpc Submodule src/github.com/beevik/etree 90dafc1e..4cd0dd97 (rewind): < add attribute sort support. < Release v1.0.1 < Update path doc... — committed to concourse/concourse by vito 6 years ago

For anyone not following along in #1966, we found and fixed the source of a lot of the

btrfsinstability that led us to switching tooverlayin the first place. We can now more easily recommend that people switch back to it with the next release of Concourse (3.9), and we’ll consider changing the default in the future. Until we either change the default or find a way to improve the overlay performance (not likely), I’ll leave this issue open.I see that issue on my concourse setup, version 4.2.1. Is there any information that I should provide?

We have the same issue with 4.2.1 with the binary release.

I still see this issue in 3.14.1 even after switching to btrfs driver… the job just keeps waiting and sometimes has ‘waiting for docker to come up…’ for more than 15 minutes…

We did see improvement when switching back.

I had forgotten about this issue and realized it was affecting me on a new Concourse deploy. Thanks for reminding me to switch drivers! On Thu, Dec 28, 2017 at 09:56 Timothy R. Chavez notifications@github.com wrote:

With the switch to

overlayby default in 3.1, the tradeoff is that privileged tasks/resources (i.e. the Docker Image resource) have a performance penalty.Unfortunately with container tech as it is today, you either get instability (

btrfs) or slowness (overlay). We chose the latter.Here are a few paths forward:

shiftfsgets merged and we can kill this nasty performance overhead.put, if it’s the case that it’s taking so long to transfer data that isn’t actually needed by the task/put. See https://github.com/concourse/concourse/issues/1202I was using the following and DID NOT see this issue:

I recently upgraded to Concourse 3.3.4, and I DO see this issue.

I upgraded to Concourse 3.1.1 in between 3.0.1 and 3.3.4 and I don’t believe I saw the issue, but I could be mistaken.

Note: we are using the default baggage claim driver: overlay fs in 3.3.4

It seems like certain Jobs are affected by it (more so than others).

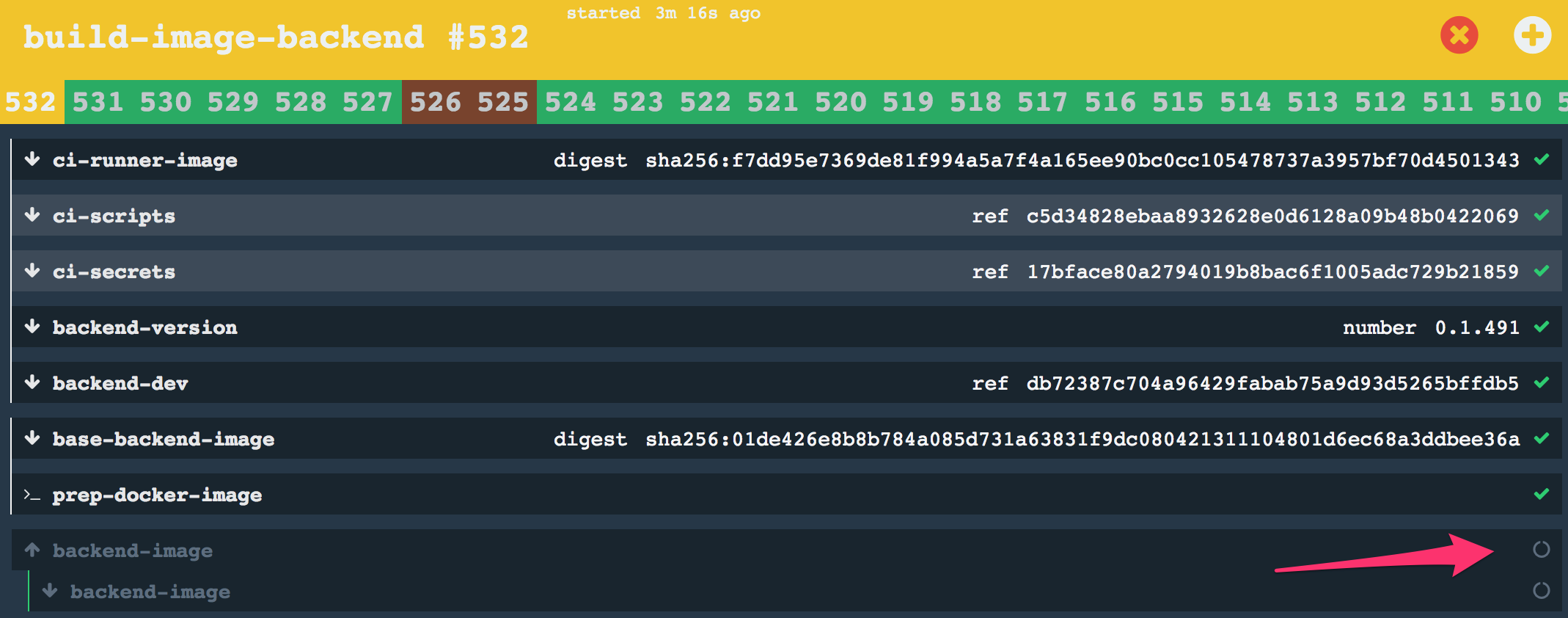

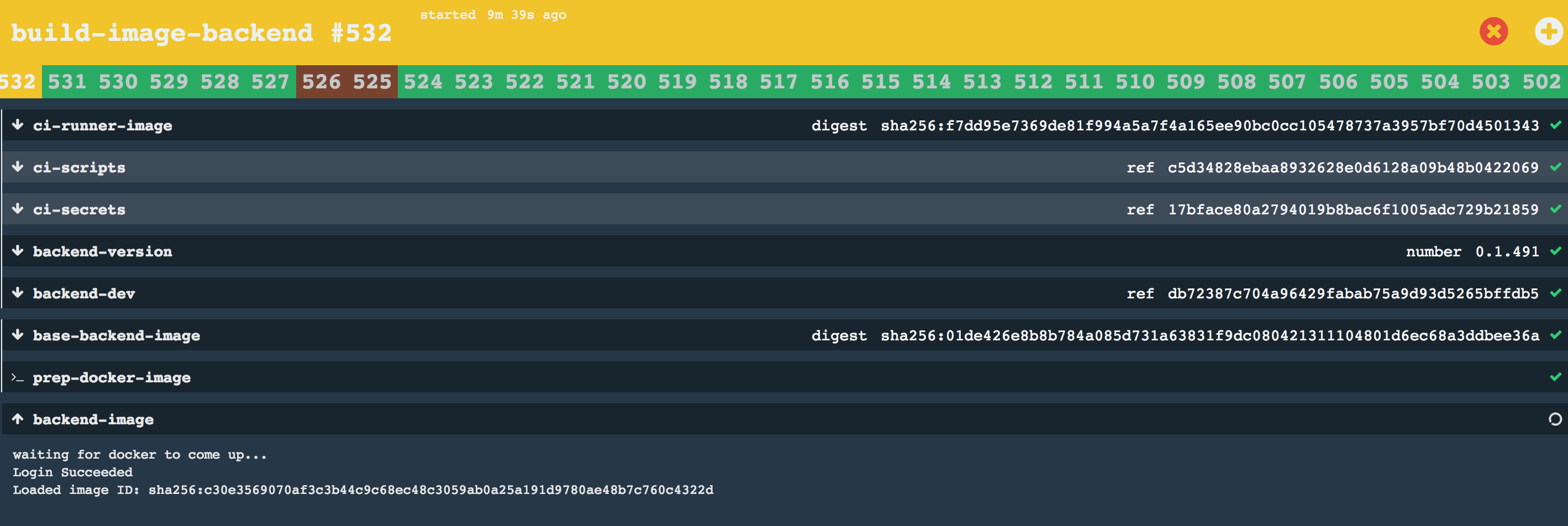

I have one job that builds a docker image (see below)

All the tasks run quick, with no lag… but when it gets to the docker-image-resource “put” task for “app-image” it takes 6+ minutes for it to start spinning…

During that 6+ minutes, it visually just looks like it has not started.

I also see the there is a container listed for that task when I do a

fly containersHowever I cannotinterceptinto it… (get a ssh bad handshake… so I assume its because it has NOT yet spun up the container ? )Before:

After:

During this 6+ minutes, I did a “watch” on fly volumes for the container handle that is associated with that put task. I see a few container entries for fly containers for that handle… then after about 6 minutes, I do see a new volume entry show up in the

watch fly volumes | grep the-container-handle… so seems like its related to fs or volumes?It would be great to get this fixed… also if there is something I could look into… logs or anything to help track down, would be appreciated.

We got alot of gains with the new

cachesfeature, but lost them with some CI pipelines w/ this noticeable lag 😃Cheers