ClickHouse: Possible memory leak

ClickHouse version: 21.6.6.51 (cannot update until https://github.com/ClickHouse/ClickHouse/issues/32744 is released).

Memory usage is increasing constantly and, when the RSS limit is reached, CH fails random queries (inserts into/selects from different tables). We cannot find where the memory is.

Additional information:

- All queries are done by users with

max_memory_usage=750MiB. - We insert rows in batches every 100ms into 32

Buffertables, totally ~50k rows/s. - We use live views and don’t use dictionaries.

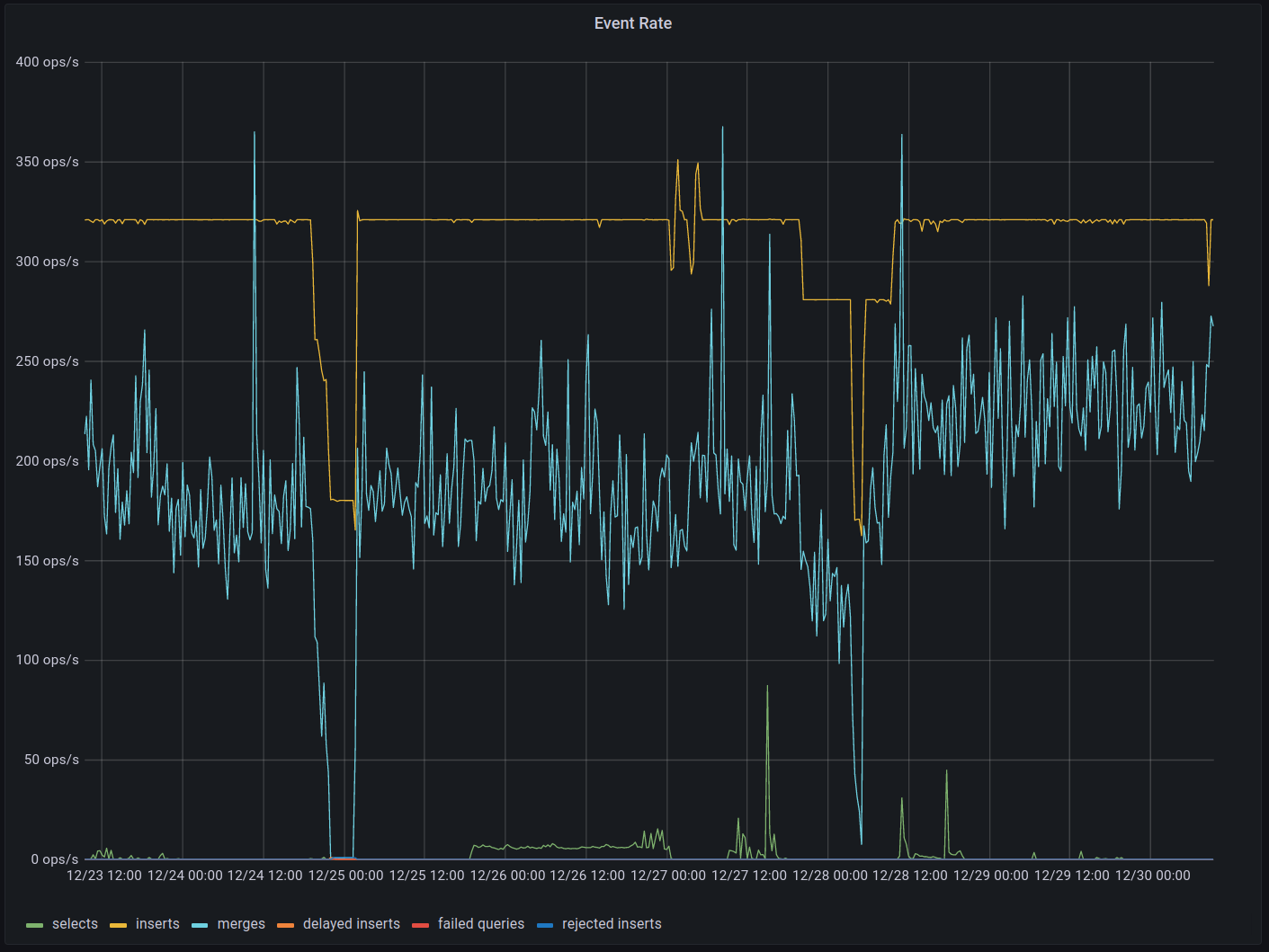

Metrics

Metrics (sharp drops are restarts). Here we set max_server_memory_usage_to_ram_ratio to 0.3 (37GiB) to show peaks. The same behavior remains with 0.9 (114GiB).

Suspiciously high values of purged metrics.

Don’t see any correlation with different events:

Attempts to find the reason

SELECT formatReadableSize(sum(total_bytes)) FROM system.tables WHERE engine IN ('Memory','Set','Join');

172.57 KiB

SELECT formatReadableSize(sum(memory_usage)) FROM system.merges;

249.99 MiB

SELECT formatReadableSize(sum(memory_usage)) FROM system.processes;

36.97 MiB

SELECT formatReadableSize(sum(memory_usage)), formatReadableSize(sum(peak_memory_usage)) FROM system.processes;

0.00 B │ 0.00 B

SELECT

metric,

formatReadableSize(value)

FROM system.asynchronous_metrics

WHERE metric IN ('UncompressedCacheBytes', 'MarkCacheBytes')

┌─metric─────────────────┬─formatReadableSize(value)─┐

│ UncompressedCacheBytes │ 0.00 B │

│ MarkCacheBytes │ 55.91 MiB │

└────────────────────────┴───────────────────────────┘

SELECT

formatReadableSize(sum(primary_key_bytes_in_memory)) AS primary_key_bytes_in_memory,

formatReadableSize(sum(primary_key_bytes_in_memory_allocated)) AS primary_key_bytes_in_memory_allocated

FROM system.parts

┌─primary_key_bytes_in_memory─┬─primary_key_bytes_in_memory_allocated─┐

│ 337.63 MiB │ 624.06 MiB │

└─────────────────────────────┴───────────────────────────────────────┘

Logs

Regularly (1-2 times per hour) wee see the following pattern in logs:

2021.12.28 10:22:33.397711 [ 1854971 ] {1822bef8-7c15-42d4-9113-a1bce442a4c0} <Error> executeQuery: Code: 24, e.displayText() = DB::Exception: Cannot write to ostream at offset 59798 (version 21.6.6.51 (official build)) (from 10.221.8.220:46046) (in query: WATCH lv_25bed493f7b3a63aade737679c0974f3f320bd99 FORMAT JSONEachRowWithProgress ), Stack trace (when copying this message, always include the lines below):

2021.12.28 10:22:33.400234 [ 1854971 ] {1822bef8-7c15-42d4-9113-a1bce442a4c0} <Error> DynamicQueryHandler: Code: 24, e.displayText() = DB::Exception: Cannot write to ostream at offset 59798, Stack trace (when copying this message, always include the lines below):

2021.12.28 10:22:33.400944 [ 1854971 ] {1822bef8-7c15-42d4-9113-a1bce442a4c0} <Error> DynamicQueryHandler: Cannot send exception to client: Code: 24, e.displayText() = DB::Exception: Cannot write to ostream at offset 59871, Stack trace (when copying this message, always include the lines below):

2021.12.28 10:22:33.402017 [ 1854971 ] {} <Error> ServerErrorHandler: Poco::Exception. Code: 1000, e.code() = 32, e.displayText() = I/O error: Broken pipe, Stack trace (when copying this message, always include the lines below):

This may be related to the client library (maybe doesn’t read the whole response).

LiveView is simple enough:

CREATE LIVE VIEW algo.lv_17cc0dbf4232839381bb35d6b65ee11f8b51b1bb WITH TIMEOUT 5

(`now64(9)` DateTime64(9)) AS

SELECT now64(9) FROM ... WHERE some_id IN

(

SELECT some_id

FROM subscribers

)

LIMIT 1

What can I check else?

I haven’t found related issues, except for https://github.com/ClickHouse/ClickHouse/issues/32750, but we don’t use cancel_http_readonly_queries_on_client_close.

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 4

- Comments: 15 (12 by maintainers)

That’s another problem (I’ve faced it in https://github.com/ClickHouse/ClickHouse/issues/22996), likely not related here, but also inconvenient.