cilium: Unexpected DROPPED with FQDN policies

Is there an existing issue for this?

- I have searched the existing issues

What happened?

I have faced some unexpected DROPPED alerts while they should always be allowed. Here is the rule definition:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: egress-volvo

namespace: default

spec:

endpointSelector:

matchLabels:

app: volvo

app.kubernetes.io/name: volvo

io.kubernetes.pod.namespace: default

workload.user.cattle.io/workloadselector: deployment-default-volvo

egress:

- toPorts:

- ports:

- port: "3306"

protocol: TCP

toFQDNs:

- matchName: cloudsql-prod-open-payment-mysql-0.tiki.services

- toPorts:

- ports:

- port: "443"

protocol: TCP

toFQDNs:

- matchName: api.tiki.vn

- matchName: confo.tiki.services

- matchName: es.logging.checkout.tiki.services

- matchName: sentry.tiki.com.vn

- toPorts:

- ports:

- port: "80"

protocol: TCP

toFQDNs:

- matchName: confo.tiki.services

- matchName: es.logging.checkout.tiki.services

- matchName: gcp-gw-mch.tiki.services

- matchName: themis.tiki.services

- toPorts:

- ports:

- port: "9092"

protocol: TCP

toFQDNs:

- matchPattern: vm-prod-core-kafka-*.svr.tiki.services

- matchPattern: vdc-kafka-*.svr.tiki.services

- toEntities:

- cluster

- health

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: kube-system

k8s:k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

rules:

dns:

- matchPattern: '*'

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: kube-system

k8s:k8s-app: tiki-node-local-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

rules:

dns:

- matchPattern: '*'

The application has worked well so far with the policy above, but I see that sometimes the Hubble alerts us that there were some dropped packets with the following flags:

- ACK or

- ACK,PSH

SYN flag is never found in this case.

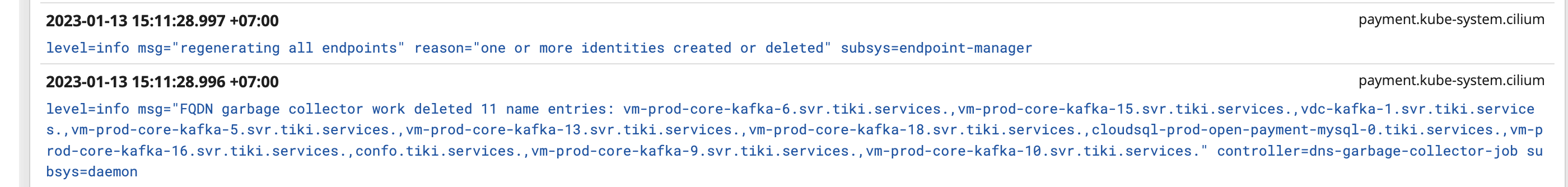

It often happens after the cilium agent cleaned up the related fqdn cache. Let’s look at the dropped payload and the cilium’s log.

Dropped payload:

{

"time": "2023-01-13T08:11:29.870129267Z",

"verdict": "DROPPED",

"drop_reason": 133,

"ethernet": {

"source": "06:0a:1f:94:83:02",

"destination": "c2:e6:fa:89:78:cd"

},

"IP": {

"source": "10.240.35.1",

"destination": "10.8.3.212",

"ipVersion": "IPv4"

},

"l4": {

"TCP": {

"source_port": 45476,

"destination_port": 9092,

"flags": {

"PSH": true,

"ACK": true

}

}

},

"source": {

"ID": 3299,

"identity": 6906664,

"namespace": "default",

"labels": [

"k8s:app.kubernetes.io/managed-by=spinnaker",

"k8s:app.kubernetes.io/name=volvo",

"k8s:app=volvo",

"k8s:io.cilium.k8s.namespace.labels.field.cattle.io/projectId=p-x6d9r",

"k8s:io.cilium.k8s.namespace.labels.istio.io/rev=1-9",

"k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default",

"k8s:io.cilium.k8s.namespace.labels.sidecar-injection=enabled",

"k8s:io.cilium.k8s.policy.cluster=k8s-prod-payment",

"k8s:io.cilium.k8s.policy.serviceaccount=default",

"k8s:io.kubernetes.pod.namespace=default",

"k8s:version=main",

"k8s:workload.user.cattle.io/workloadselector=deployment-default-volvo"

],

"pod_name": "volvo-ddbf85bfc-g9wdm",

"workloads": [

{

"name": "volvo",

"kind": "Deployment"

}

]

},

"destination": {

"identity": 2,

"labels": [

"reserved:world"

]

},

"Type": "L3_L4",

"node_name": "k8s-prod-payment/gke-k8s-prod-payment-default-b425db48-cbzx",

"event_type": {

"type": 5

},

"traffic_direction": "EGRESS",

"drop_reason_desc": "POLICY_DENIED",

"Summary": "TCP Flags: ACK, PSH"

}

And the related cilium log:

Note the destination IP

Note the destination IP 10.8.3.212 is resolvable to vm-prod-core-kafka-15.svr.tiki.services

And application seems to have failed to fetch the Kafka message:

2023-01-13 15:11:59.458 [confo-kafka-pooling-thread] INFO org.apache.kafka.clients.FetchSessionHandler (FetchSessionHandler.java:481) - [Consumer clientId=consumer-confo-client-hotcache-269af686-980e-4bde-a292-b81bb3e41adc-1, groupId=confo-client-hotcache-269af686-980e-4bde-a292-b81bb3e41adc] Error sending fetch request (sessionId=1275092103, epoch=7241) to node 15

The similar issue found: https://github.com/cilium/cilium/issues/15445

Cilium Version

root@gke-k8s-prod-payment-default-b425db48-cbzx:/home/cilium# cilium version Client: 1.12.2 c7516b9 2022-09-14T15:25:06+02:00 go version go1.18.6 linux/amd64 Daemon: 1.12.2 c7516b9 2022-09-14T15:25:06+02:00 go version go1.18.6 linux/amd64

Kernel Version

root@gke-k8s-prod-payment-default-b425db48-cbzx:/home/cilium# uname -a Linux gke-k8s-prod-payment-default-b425db48-cbzx 5.4.202+ #1 SMP Sat Jul 16 10:06:38 PDT 2022 x86_64 x86_64 x86_64 GNU/Linux

Kubernetes Version

kubectl version WARNING: This version information is deprecated and will be replaced with the output from kubectl version --short. Use --output=yaml|json to get the full version. Client Version: version.Info{Major:“1”, Minor:“24”, GitVersion:“v1.24.0”, GitCommit:“4ce5a8954017644c5420bae81d72b09b735c21f0”, GitTreeState:“clean”, BuildDate:“2022-05-03T13:36:49Z”, GoVersion:“go1.18.1”, Compiler:“gc”, Platform:“darwin/arm64”} Kustomize Version: v4.5.4 Server Version: version.Info{Major:“1”, Minor:“21”, GitVersion:“v1.21.14-gke.4300”, GitCommit:“348bdc1040d273677ca07c0862de867332eeb3a1”, GitTreeState:“clean”, BuildDate:“2022-08-17T09:22:54Z”, GoVersion:“go1.16.15b7”, Compiler:“gc”, Platform:“linux/amd64”} WARNING: version difference between client (1.24) and server (1.21) exceeds the supported minor version skew of +/-1

Sysdump

No response

Relevant log output

No response

Anything else?

No response

Code of Conduct

- I agree to follow this project’s Code of Conduct

About this issue

- Original URL

- State: closed

- Created a year ago

- Reactions: 1

- Comments: 22 (8 by maintainers)

Do we have any workarounds for this? We like Cilium, but having our applications often lose connection to the databases is preventing us from adopting Cilium in our broader organization.

@jaydp17 Yes

tofqdns-idle-connection-grace-periodcould help here as the default is0s. Withtofqdns-min-ttl, it would force all entries that are below the configured min TTL to be set to for example1hifmin-ttlis1h. I thinktofqdns-idle-connection-grace-periodis a more direct way to achieve what you want. As fortofqdns-max-deferred-connection-deletes, the default is already 10k IPs. Unless you are having this problem with over 10k IPs, then I don’t think increasing this option will have any impact.@luanphantiki I’m fairly confident it’s related to https://github.com/cilium/cilium/pull/22252. Can you upgrade to the latest RC of 1.13 or at least run with the PR’s changes on top of your version?