cert-manager: Error upgading cert-manager to v1.7

Hi, I’ve been struggling with this issue for a couple of weeks now.

To give some background to the error, one of out AKS clusters was upgraded from 1.19.11 to 1.22.6. I know that support for v1alpha2 has been removed from this version of AKS but unfortunately, the cluster was upgraded before Cert-Manager was. Before the upgrade, the cluster was running v0.13.0 of cert-manager (a very old version, I know).

I’ve tried removing all the Cert-Manager resources using the instructions for Helm in the Cert-Manager documentation; this seems to clean everything up ok.

I installed a newer version of cert manager using helm upgrade --install cert-manager --namespace cert-manager --create-namespace --set installCRDs=true --set extraArgs=‘{–dns01-recursive-nameservers-only,–dns01-recursive-nameservers=x.x.x.x:53}’ --version v1.7.1 jetstack/cert-manager

The message I get back indicates that cert-manage has been installed successfully Release “cert-manager” does not exist. Installing it now. NAME: cert-manager LAST DEPLOYED: Thu Feb 24 16:56:00 2022 NAMESPACE: cert-manager STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: cert-manager v1.7.1 has been deployed successfully!

In order to begin issuing certificates, you will need to set up a ClusterIssuer or Issuer resource (for example, by creating a ‘letsencrypt-staging’ issuer).

More information on the different types of issuers and how to configure them can be found in our documentation:

https://cert-manager.io/docs/configuration/

For information on how to configure cert-manager to automatically provision

Certificates for Ingress resources, take a look at the ingress-shim

documentation:

https://cert-manager.io/docs/usage/ingress/

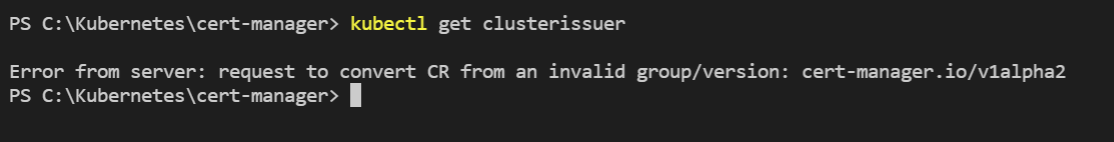

But when I go to look at the cluster issuer I get the following error

I have tried creating a new cluster issuer using the following code apiVersion: cert-manager.io/v1 kind: ClusterIssuer metadata: name: letsencrypt-prod spec: acme: server: https://acme-v02.api.letsencrypt.org/directory email: someone@somewhere.com privateKeySecretRef: name: sisca-challenge solvers: - selector: {} dns01: rfc2136: nameserver: x.x.x.x:53 tsigKeyName: aks-apicluster-11114349-vmss-mkpv-grp-dnssv1.somewhere.com. tsigAlgorithm: HMACMD5 tsigSecretSecretRef: name: tsig-secret key: tsig-secret-key

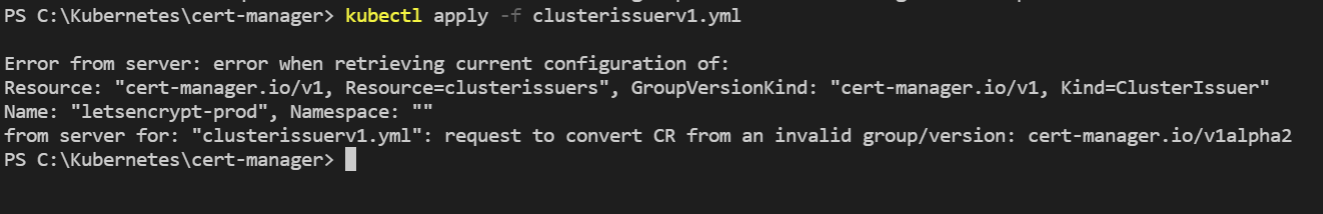

Again I get an error related to cert-manager.io/v1alpha2

Any help would be greatly appreciated.

About this issue

- Original URL

- State: closed

- Created 2 years ago

- Reactions: 5

- Comments: 22

I faced the same issue @RobinJ1995 .

We upgrade to 1.8. and weren’t able to issue new certificates.

I tried to patch the CRDs and run the cmctl upgrade like mentioned. After downgrading to 1.5 and running

cmctl upgrade migrate-api-versionit prompted withNothing to do, as well as patching the CRDs. I still faced the conversion fromacme.cert-manager.io/v1alpha2issue.I figured based on the conversation above we had an issue on one dangling

challengetoo. There were no challenges displayed even though we had some open certificate requests and orders.In our case I knew which namespace was problematic, as I wasn’t able to list the challenges in the particular namespace. The same convert problem with v1alpha2 was prompted also on

--all-namespace. In my case, I knew which namespace was problematic so this might not apply to the issue you are facing Robin.After running

I was able to resolve this by installing the old CRDs (from v1.4.0) while being on Version 1.5 of cert-manager via crds-managed-separately.

After installing the old CRDs the dangling challenge was finally displayed (it was pretty old… 🙄). And I was able to remove it simply via

After the old challenges was removed new challenges were immediatly issued, which now also use the correct v1 CRDs. I hope the upgrade will now work for us smoothly.

Maybe this will helps someone. Cheers guys for the help so far 💚

You don’t need cert-manager v0.11 to re-instate v1alpha2 API. I’d suggest that you re-install cert-manager v1.5 that still understands v1alpha2 API, re-run the upgrade instructions as per the docs and re-attempt the upgrade then.

Thanks @irbekrm and @Sasuke0713 for your time posting on this issue.

I followed @irbekrm steps and installed v1.5.4 then ran through the steps in the resource upgrade instructions - That fixed my initial issue.

I also used the verification steps posted by @Sasuke0713; these were really helpful in proving it was all working again properly.

Thanks a lot guys!!!

Thanks for that suggestion. I uninstalled cert-manager 1.7 via Helm and removed its namespace, and installed cert-manager 1.5.

And upon uninstalling 1.5 and installing 1.7 again I am once again greeted with the familiar

The issue is not that I wasn’t able to delete the resources, because they were gone according to the API (otherwise it would not have allowed me to delete the associated CRDs, either). The issue is that now I’m running a newer version of cert-manager and it is somehow still encountering

v1alpha2resources in my cluster even though they were all removed before proceeding with installing the new version of cert-manager.Re-installing cert-manager 0.11 is also not an option, as Kubernetes 1.22 does not supply most of the APIs anymore that were used for cert-manager 0.11’s resources.

This one helped me. Thanks @MarkWarneke

I also downgrade the cert-managet to v1.5.5 and then again downgrade by installing old CRD’s (from v1.4.0) while being on Version 1.5 of cert-manager via crds-managed-separately.

The output of applying CRD’s of v1.4.0

and when trying to list old dangling clusterissuers resources. I am getting below error

PROBLEM In case this helps anyone, we were seeing the following in the cert-manager logs after upgrading (1.5.3 -> 1.8.0):

Running

cmctl upgrade migrate-api-version --qps 5 --burst 10showed that everything was fine:Running the finalizer patches below also made no changes:

CAUSE Turns out we had a ton of leftover/old certificate requests that had the following in them as found with

kubectl describe certificaterequests -A(Many that did not have “Approved” asTrue.):And also

kubectl describe orders -Aresulted in the same amount of orders that had the samev1alpha2in them.RESOLUTION We resolved the issue by:

cmctl upgrade migrate-api-version --qps 5 --burst 10certificaterequestandorderrecords withkubectl delete certificaterequest -n NAMESPACE ID1 ID2andkubectl delete order -n NAMESPACE ID1 ID2(We deleted anything over 90 days or that hadv1alpha2.)Ok so I ran into this recently upgrading this system helm chart. I can probably give someone some help here if they run into this… Its easier to use the

cmctlthan running thecurlcommand back to the api to update the underlying crd’s. It looks like thecmctl upgrade migrate-api-versionalso upgrades the underlying objects along with the crd’s. Here’s a workplan I followed(I use reckoner currently which runs helm3 under the hood).Cert-manager upgrade from

0.16->1.5.5->1.7.1. The reason to go from 0.16 to 1.5.5 is because thecert-manager.io/v1alpha2was fully removed in1.6and the etcd data must reflect the newer api version ofcert-manager.io/v1before you can then upgrade to 1.6 or higher.Upgrade step

0.16->1.5.5Before upgrading in a

stableenvironment renew the vault certificate first so if something unexpected happens and breaks we have time to fix it without certificates expiring… Check the verifications steps section for renewal steps.BEFORE running this, ensure you backup these two objects below at the very least. https://cert-manager.io/docs/tutorials/backup/

Change the

apiVersionin theclusters/*/crdsfiles fromcert-manager.io/v1alpha2tocert-manager.io/v1Change the versions in the reckoner.yaml to 1.5.5 then:Verification steps

Download

cmctl:cert-managernamespace are up and running correctly. Check the logs for further verification.cmctlto renew certificate manually.Upgrade step

1.5.5->1.7.1Before running

reckoner plotyou must upgrade theapiVersionin thestatus.storedVersions.BEFORE running this, ensure you backup these two objects below at the very least. https://cert-manager.io/docs/tutorials/backup/

On your local machine run the below command to upgrade the

apiVersionsstored:A successful run of the migrate command looks like:

Please check to see if you get this type of error when you do a

kubectl get ordersorkubectl get certificaterequests. Search for whatever k8s objects you would need to access for your functional use of cert-manager.We may need to run what we call a

kubectl patchshown below:Now run:

PLEASE go back through the Verification Step Section!!!