amazon-vpc-cni-k8s: AWS EKS - add cmd: failed to assign an IP address to container

What happened: Anytime we try to launch a pod with a security group it fails to provision an ENI with the below error. Also have the cni support bundle if someone would like that via email.

failed to allocate branch ENI to pod: cannot create new eni entry already exist, older entry : [0xc0002c2310]

kubectl:

networkPlugin cni failed to set up pod "busybox-test-sg-pod_default" network: add cmd: failed to assign an IP address to container

ipamd.log:

{"level":"info","ts":"2020-10-01T19:58:56.001Z","caller":"rpc/rpc.pb.go:504","msg":"Received DelNetwork for Sandbox 1097c78a91b063665bed41ce09ca6d4be7cda0375f5546efe4084ce775f040e4"} {"level":"info","ts":"2020-10-01T19:58:56.004Z","caller":"rpc/rpc.pb.go:504","msg":"Send DelNetworkReply: IPv4Addr , DeviceNumber: 0, err: datastore: unknown pod"}

What you expected to happen: CNI to attach ENI to pod with Security group How to reproduce it (as minimally and precisely as possible): Anytime we try to launch a pod with a security group Anything else we need to know?:

Environment:

- Kubernetes version (use

kubectl version): 1.17 - CNI Version: 1.7.3

- OS (e.g:

cat /etc/os-release): Amazon Linux 2 - Kernel (e.g.

uname -a): 4.14.193-149.317.amzn2.x86_64

About this issue

- Original URL

- State: closed

- Created 4 years ago

- Comments: 47 (18 by maintainers)

Issue Summary: EKS worker nodes has limitations for running number of pod based on the instance type. In our case we use instance type-c5.2xlarge which allows 58 pods per instance. If the pods are scheduled more than that number on the same instance, then those pods are getting stuck in ContainerCreating status. Describing the pods gives us following errors: " Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container “8be3026cc6bb2b311570f118dfef8ab93ae491e6d6fc20e12a46e9b814cff716” network for pod “pod-name”: networkPlugin cni failed to set up pod “pod-name” network: add cmd: failed to assign an IP address to container "

As part of solution we tried adding --use-max-pods false --kubelet-extra-args ‘–max-pods=110’ into our EKS worker node bootstrap script. Still the issue remains same. Please guide us the solutions if there are any to remove pods restriction based on instance type and run max number of pods in a worker node.

Note: Based on the AWS premium support they suggest us to consider greater instance types to achieve our use-case(which is to run max number of pods in a worker node). This solution provided by AWS is absolutely weird in many ways as listed below:

If we run maximum number of pods in a EKS worker node, Still we can see worker nodes are being underutilized in terms of CPU and memory. In this case using higher instance type will cost us more. Even if i run max number of pods in a worker node consuming the total % of CPU will not cross double digits. In this use case again having greater instance type is not the cost optimization strategy.

If the pods are stuck in ContainerCreating state, the same pod can’t move to running state unless it’s being killed and restarted. So our requirement is kube-scheduler should know if the node have the sufficient IPs, Then only it should schedule the pod on that particular node. Indeed kube-scheduler should know if IPs are exhausted in the worker node before placing the pods.

If we use aws vpc custom cni then worker nodes cant make use of all the NIC available for that instance type. For Ex: We use c5.2xlarge which supports max 4 NIC. Since we use a different subnets each for nodes and pods. 1 NIC will be assigned to our node which will have only 1 IP and rest of the 14 IPS will be wasted. other 3 NIC*15=45 pods only we can run instead of 58 pods. Please give us a justification on this.

If pods stuck in Container creating status any auto scaling option available to bring new nodes as like cluster autoscaler will monito CPU and memory.

Not sure if this will help anyone, but might as well comment here as I spent hours trying to debug this annoying error, regardless of knowing that my subnets do have enough addresses.

You need to use an instance type that has IsTrunkingCompatible: true in it in this source file: https://github.com/aws/amazon-vpc-resource-controller-k8s/blob/master/pkg/aws/vpc/limits.go

Once you’re using a compatible instance type you’ll need to re-create the node group and then restart any failed deployments. If for example coredns failed to start (shows as “degraded” on the EKS dashboard), you can restart it with

kubectl rollout restart -n kube-system deployment/corednsWe experience this issue quite often. We do have ENABLE_POD_ENI and DISABLE_TCP_EARLY_DEMUX enabled. There’s enough IPs in subnets Actually issue has begun to appear when we enabled pod eni. amazon-k8s-cni:v1.7.5

Any solution yet so far that doesn’t involve increasing node sizes? We have ample IPs remaining in our subnets. The sole issue seems to be we’re hitting a max IPs per node that has nothing to do with any underlying constraints.

This is a debilitating problem for us. We previously used GKE for our clusters and moved to AWS recently. The very fact that this is not treated as a serious issue with EKS is revealing about how little interest they have in making EKS attractive to AWS users. We will need to find a solution to this, but it is inconceivable that users should start paying for larger machines that they don’t really need.

So the issue occurs in the situation you are using the CNI AWS plugin. From the start I can tell you is not a technical issue but more a planning issue. You did not take into consideration that the growth of your cluster will drain out the subnet(s) of the cluster.

How this error appears:

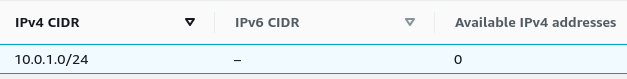

The node that is the pod scheduled for is on the cluster for a certain period of time, let’s say 1 day. In the meantime, your cluster drains out all your IPS from subnet(s). A new pod is scheduled on the node into the discussion and the error will pop up, because you don’t have any more IPs on the subnet(s). The pod is asking for IPs from the VPC/subnet(s).

I had the same issue when I did overscale my cluster over 1k nodes.

Solution: Create a second subnet, label the subnet with the right labels for EKS ( don’t try to modify the master will ask for recreation and you don’t want to do that). All you need is to put the Kubernetes labels on the subnets. Then you need to create a lunch configuration to lunch instances from the newly added subnet. Here you can play with some logic, to spread the load over your subnets. Anyhow, the issue is not the CNI but the lack of IP on the AWS subnet that is used by the Kubernetes cluster.

omg i have found the problem, but there is no good logs to fast understand the problem

@jayanthvn

i changed this settings in aws-node DaemonSet pods from

1to2and pods have started, maybe it’s helped?@djschnei21 What instance type is this? Not all instance types support branch ENIs. From the AWS docs:

If you run

kubectl get nodes -oyaml | grep 'vpc.amazonaws.com/has-trunk-attached', what does it say?Hi @jayanthvn Reg point 2, Could you please tell us why kube-scheduler doesn’t schedule the pods by considering the number of IPs available in the nodes. Give us justification or is it will be part of improvements in the upcoming release.

@cslovell - There is an ENI and IP limit per instance type and that dictates the the max IPs per node limits - https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html#AvailableIpPerENI. You can enable prefix delegation (https://aws.amazon.com/blogs/containers/amazon-vpc-cni-increases-pods-per-node-limits/) from CNI 1.9.0+ to achieve higher pod density per node - https://aws.amazon.com/blogs/containers/amazon-vpc-cni-increases-pods-per-node-limits/.

Hi @rohith-mr-rao

Kube-scheduler that is running in EKS is vanilla. Factors that need taken into account for scheduling decisions include individual and collective resource requirements, hardware / software / policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and so on but does not known AWS EKS specific implementations like number of IP address available on the node. There is also a feature request which would solve your use case - https://github.com/aws/amazon-vpc-cni-k8s/issues/1160.

Hi @jayanthvn Our main concern is that sometimes pods are scheduled on nodes where no IP is available (or reported as it) and get stuck with

ContainerCreatingstatus. Pods are not automatically rescheduled on another node in that case and get stuck until they’re manually killed and automatically redeployed on another node (if there’s enough room to do that).I checked the node status on our production cluster this morning and allocated IPs has increased from 48 to 55 on the node I gave you the logs, even if errors are still reported:

The two other nodes reporting errors have also 55 IPs allocated (no change), as well as other nodes with 55 IPs allocated but not reporting any error.

I’ve done some extra tests. As new pods have been created since this morning our cluster is now nearly full (only 1 IP available). I restarted two instances of one of our application (14 new pods created) to test if some pods got stuck at creation. Pods remained with

Pendingstatus until the cluster autoscaler created a new node to schedule the pods, which is the expected behavior, but no pod got stuck withContainerCreatingstatus. Unfortunately it seems I’m not able to reproduce the issue, as things behave as expected, even if no change has been done to the cluster (tests on aws-node were done on the preprod cluster).I have updated my cni to 1.7.9 and still seeing this error -

Environment is ẸKS 1.19 AWS-CNI v1.7.9 Uses Fargate + NodeGroups

Error:

Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "89a7c4d0455147ea9eeece8b9bd12059835b40cec28be6b1b4988ce69fc312a8" network for pod "insta-service-6b959c8fdd-cw5pm": networkPlugin cni failed to set up pod "insta-service-6b959c8fdd-cw5pm_insta-service" network: add cmd: failed to assign an IP address to containerDo I need to do anything more after upgrading CNI following this guide - https://docs.aws.amazon.com/eks/latest/userguide/cni-upgrades.html to force it to cleanup stale IPs?

@SaranBalaji90 it’s strange and may be a coincidence but upgrading aws-cni to v1.7.8 has solved the issue. I’ll keep an eye and keep you updated whether or not problem really went away.

Thanks @djschnei21 support ticket link helped. Let me go over the logs attached.

Hey @SaranBalaji90 - the describe pod is showing this error:

Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up sandbox container "c54935a5c5982d95ef9391fdfff08b86bbf6b8c4b6568b4ee7bb81fce8a0f424" network for pod "busybox-test-sg-pod": networkPlugin cni failed to set up pod "busybox-test-sg-pod_default" network: add cmd: failed to assign an IP address to containerunfortunately slack is blocked on our work network… but a call would be much appreciated! any chance I can invite you to a teams meeting?