configure-aws-credentials: Failures occur with OIDC Method During Parallel Requests

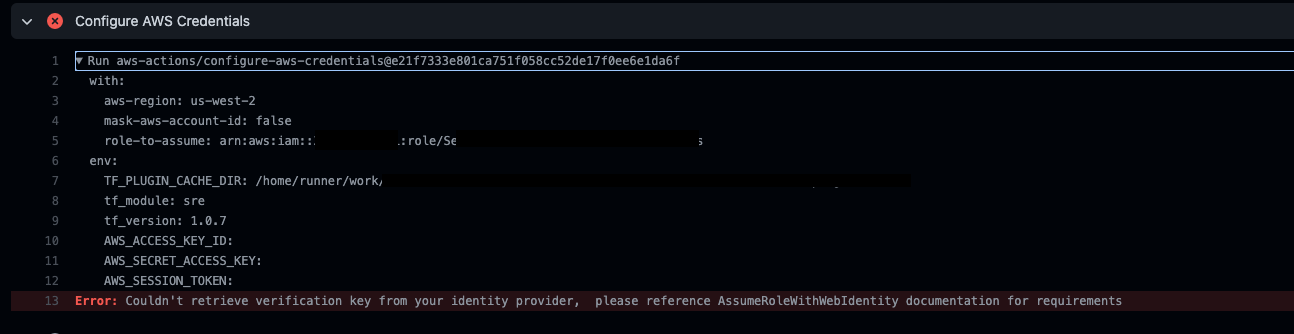

I have a repo in which I am testing out the new GitHub OIDC provider functionality. I have about 20 workflows in the repo. Typically these workflows are run individually since they are configured to be triggered by different events. However, in my particular scenario I have a PR open which is making changes to all of these workflows, thus, they are all triggering at the same time. When this occurs I see the following error in some, but not all of the workflows:

Couldn’t retrieve verification key from your identity provider, please reference AssumeRoleWithWebIdentity documentation for requirements

Simply clicking the Re-run all jobs button causes the error to resolve itself when the workflow runs a second time.

Are there any known limits with how many workflows can be run in parallel with the GitHub OIDC provider? Or is this a bug?

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 27

- Comments: 26 (3 by maintainers)

Commits related to this issue

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

- ci: workaround for configure-aws-credentials "Couldn't retrieve verification key from your identity provider" error https://github.com/aws-actions/configure-aws-credentials/issues/299 — committed to cloudbeds/workflows by steven-prybylynskyi 2 years ago

OIDC fix has been released, if you have any questions then feel free to reach out to us. Thank you for your patience 🙏🏼

This has been a huge blocker for us, we regularly need to deploy a number of stacks in parallel. The previous solution was not robust enough, so we rolled our own credentials action with retry + exponential backoff. If you’re interested, I’m including it here. Note, we used a hard-coded upper limit of retries.

I’m currently testing backoff retry logic with

async-retrynpm package. Seems to be working … https://github.com/blz-ea/configure-aws-credentials/blob/feature/role-ec2-identity/index.js#L433-L461I tried to spread the OIDC login across 10x identical IAM roles. It did not help. It looks like the rate-limit is per AWS account and does not include the role.

This is the minimal reproducer:

From the 40 jobs, the success rate is only ~10-20 jobs.

I met same issue and avoid via reuse aws auth in workflow. This is another approch to avoid retry attempt too long or failed.

Here is how I add the reuse. AWS Auth action run before any jobs. Then aws auth required job can run parallel.

I’ve come up with a work around that is fairly reliable for this issue by adding conditional retry steps in my workflows. Ideally this is something that would be handled directly by this action.

Here is how I add the conditional retries. Between each invocation of the

aws-actions/configure-aws-credentialsaction is a step which performs a sleep for a random amount of time between 15 and 65 seconds. The idea with the random sleep is this will hopefully cause the various workflows which are running in parallel to retry at different points in time.This example only tries 3 times to authenticate. In theory any number of attempts could be done, but as you can see it gets quite verbose adding additional retries.

Great solution! I would recommend adding a check to validate if the

aws sts assume-role-with-web-identitycommand succeeds. In my workflow even with 5 retries (the way you have your example setup) I still get occasional failures, but without a validation this step succeeds even though the role was not assumed. Something like this works for me:Just as another possibility of a workaround (that does not deal with the cross-account issue that we expect is happening), let me add this here. For a large job matrix, you can add a sleep based upon the index of the job, i.e.:

YMMV, but it may be an easy enough of a solution to get us through until this issue is dealt with.

You are probably right, I am leaning towards between AWS and Github. IIRC the flow used here does have a server-to-server validation step. Adding the stack trace output (via setting the

SHOW_STACK_TRACE: 'true'in theenvvariables) adds the additional information that the STS API returns aInvalidIdentityTokenerror (see e.g. here). In the issue you linked, there’s mention of a timeout of 5 secs (presumably on Github’s OIDC server response in the validation step), though I’m a little skeptical this is what’s happening here, because then I would expect an error likeIDPCommunicationError.According to the docs referenced above, a number of errors could be classified as retry-able. These could be used to decide to retry or not (perhaps with exponential backoff).

For us, though, the only viable path forward for now (other than dropping back to using tokens in GH Secrets) is to obtain the credentials once in a step, and export them using job outputs/

needs, etc…You are right. Although token will be disable in 900sec (minimum) or 1hour (default), caching senstive data is no recommended. Looking into https://github.com/actions/runner/issues/1466#issuecomment-966122092 and if it is JS SDK issue, I hope configure-aws-credentials will fix 10+ parallel requests limitation.

I like this solution for solving the scenario where multiple jobs are running in parallel and each job needs to authenticate. However, I don’t know if it is considered good practice to store credentials in the github actions cache. There is a disclaimer here that states that sensitive data should NOT be stored in the cache for public repos. There is no recommendation for private repos.