crawlee: "RangeError: Map maximum size exceeded" when uploading file

Which package is this bug report for? If unsure which one to select, leave blank

Issue description

Hi there I have an issue with uploading a zip file to my Actor’s key value store using Apify version 3.1 “apify@3.1.1” and Crawlee version 3.1 “crawlee@3.1.3” when uploading a file over 9 MB in size.

Attached example.

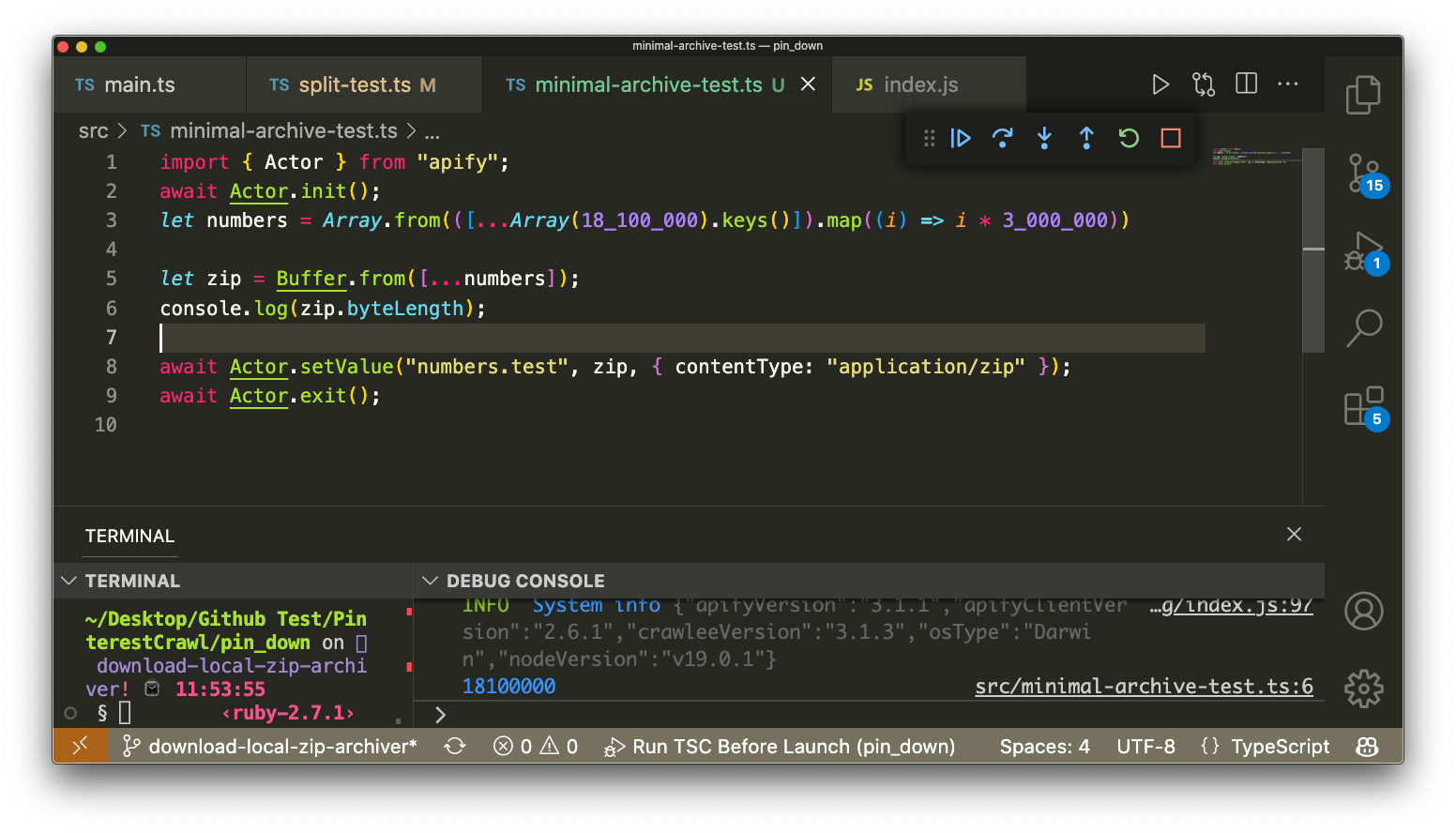

Code sample

import { Actor } from "apify";

await Actor.init();

let numbers = Array.from(([...Array(18_100_000).keys()]).map((i) => i * 3_000_000))

let zip = Buffer.from([...numbers]);

console.log(zip.byteLength);

await Actor.setValue("numbers.test", zip, { contentType: "application/zip" });

await Actor.exit();

Package version

apify@3.1.1, crawlee@3.1.3

Node.js version

v18.7.0

Operating system

Mac OS 10.15

Apify platform

- Tick me if you encountered this issue on the Apify platform

I have tested this on the next release

No response

Other context

No response

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 38 (11 by maintainers)

I had updated after the issue was closed thinking that a fix was pushed, I’ll update and re-try. Thanks!!

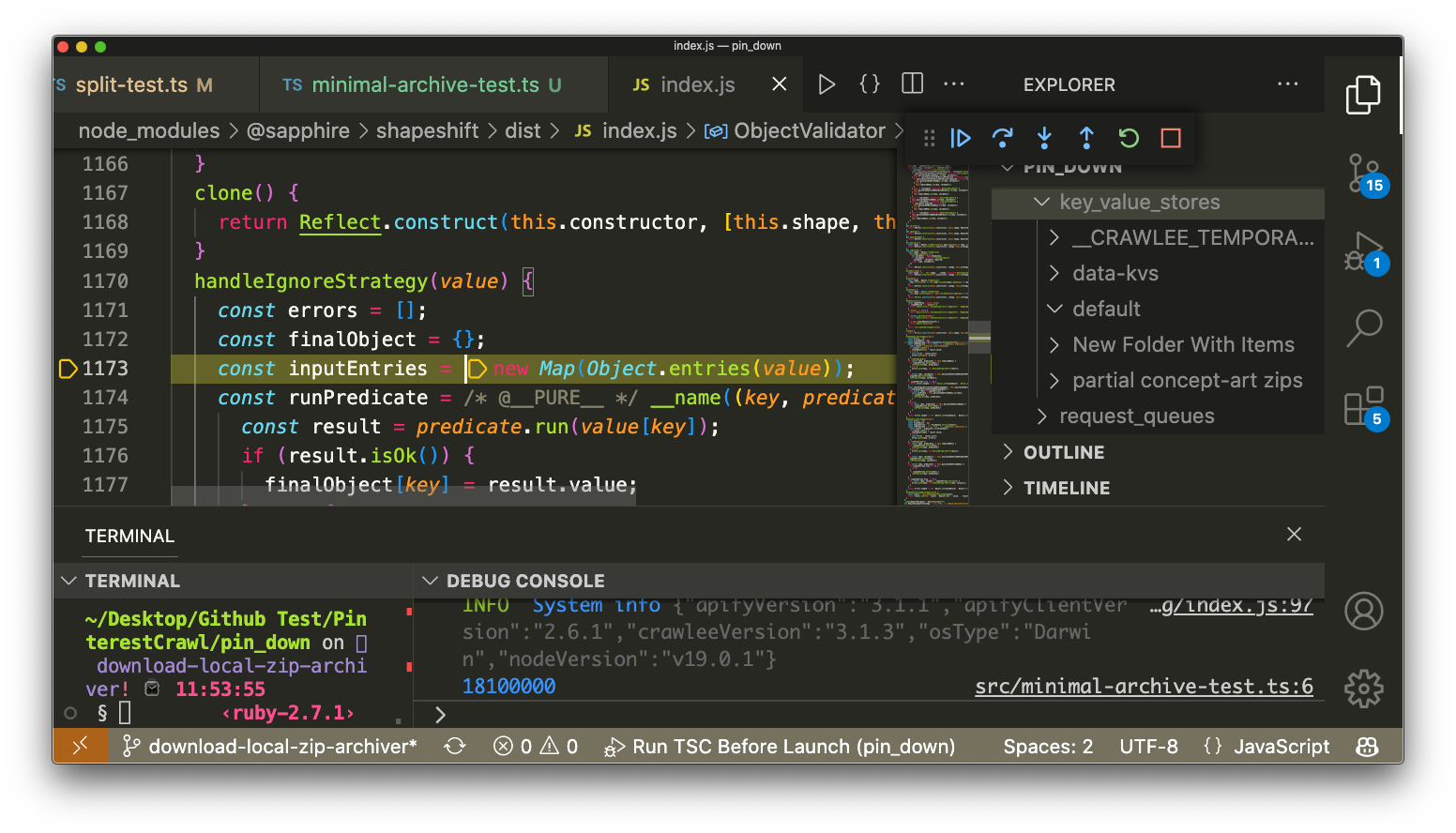

@B4nan definitely something to fix upstream. I’ll take a look, but its probably an easy fix temporarily on our side by making the validator check for instances of buffers before everything else