seatunnel: org.apache.seatunnel.connectors.seatunnel.clickhouse.exception.ClickhouseConnectorException: ErrorCode:[COMMON-10], ErrorDescription:[Flush data operation that in sink connector failed] - Clickhouse execute batch statement error

Search before asking

- I had searched in the issues and found no similar issues.

What happened

Synchronize clickhouse from hive,When hive has a large amount of data, it cannot write clickhouse

SeaTunnel Version

2.3.0

SeaTunnel Config

env {

# You can set spark configuration here

# see available properties defined by spark: https://spark.apache.org/docs/latest/configuration.html#available-properties

spark.app.name = "SeaTunnel"

spark.executor.instances = 4

spark.executor.cores = 2

spark.executor.memory = "1g"

spark.network.timeout = 600s

}

source {

Hive {

parallelism = 2

table_name = "tmp.XXXX"

metastore_uri = "thrift://XXXXX"

}

}

transform {

}

sink {

Clickhouse {

host = "172.17.5.XX:XXX"

database = "dws_yunpos"

table = "dws_yunpos_city_product"

username = "default"

password = "XXXXXX"

}

}

Running Command

./bin/start-seatunnel-spark-connector-v2.sh \

--master local[4] \

--deploy-mode client \

--config ./config/spark.batch.conf.template

Error Exception

Caused by: org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0, localhost, executor driver): org.apache.seatunnel.connectors.seatunnel.clickhouse.exception.ClickhouseConnectorException: ErrorCode:[COMMON-10], ErrorDescription:[Flush data operation that in sink connector failed] - Clickhouse execute batch statement error

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.ClickhouseSinkWriter.flush(ClickhouseSinkWriter.java:121)

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.ClickhouseSinkWriter.write(ClickhouseSinkWriter.java:77)

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.ClickhouseSinkWriter.write(ClickhouseSinkWriter.java:43)

at org.apache.seatunnel.translation.spark.sink.SparkDataWriter.write(SparkDataWriter.java:58)

at org.apache.seatunnel.translation.spark.sink.SparkDataWriter.write(SparkDataWriter.java:37)

at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask$$anonfun$run$3.apply(WriteToDataSourceV2Exec.scala:118)

at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask$$anonfun$run$3.apply(WriteToDataSourceV2Exec.scala:116)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1394)

at org.apache.spark.sql.execution.datasources.v2.DataWritingSparkTask$.run(WriteToDataSourceV2Exec.scala:146)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:67)

at org.apache.spark.sql.execution.datasources.v2.WriteToDataSourceV2Exec$$anonfun$doExecute$2.apply(WriteToDataSourceV2Exec.scala:66)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

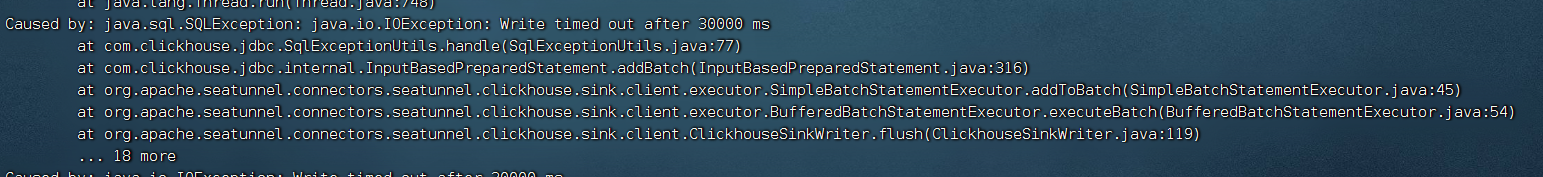

Caused by: java.sql.SQLException: java.io.IOException: Write timed out after 30000 ms

at com.clickhouse.jdbc.SqlExceptionUtils.handle(SqlExceptionUtils.java:77)

at com.clickhouse.jdbc.internal.InputBasedPreparedStatement.addBatch(InputBasedPreparedStatement.java:316)

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.executor.SimpleBatchStatementExecutor.addToBatch(SimpleBatchStatementExecutor.java:45)

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.executor.BufferedBatchStatementExecutor.executeBatch(BufferedBatchStatementExecutor.java:54)

at org.apache.seatunnel.connectors.seatunnel.clickhouse.sink.client.ClickhouseSinkWriter.flush(ClickhouseSinkWriter.java:119)

... 18 more

Flink or Spark Version

Spark 2.4.6

Java or Scala Version

java1.8

Screenshots

Are you willing to submit PR?

- Yes I am willing to submit a PR!

Code of Conduct

- I agree to follow this project’s Code of Conduct

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 15 (7 by maintainers)

I reinstalled it. The problem has been solved. Thank you very much