hudi: [SUPPORT] org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file

Describe the problem you faced

Using Hudi 0.10.0-rc3, inserting into an external COW table fails.

Table was created with the same Hudi version 0.10.0-rc3.

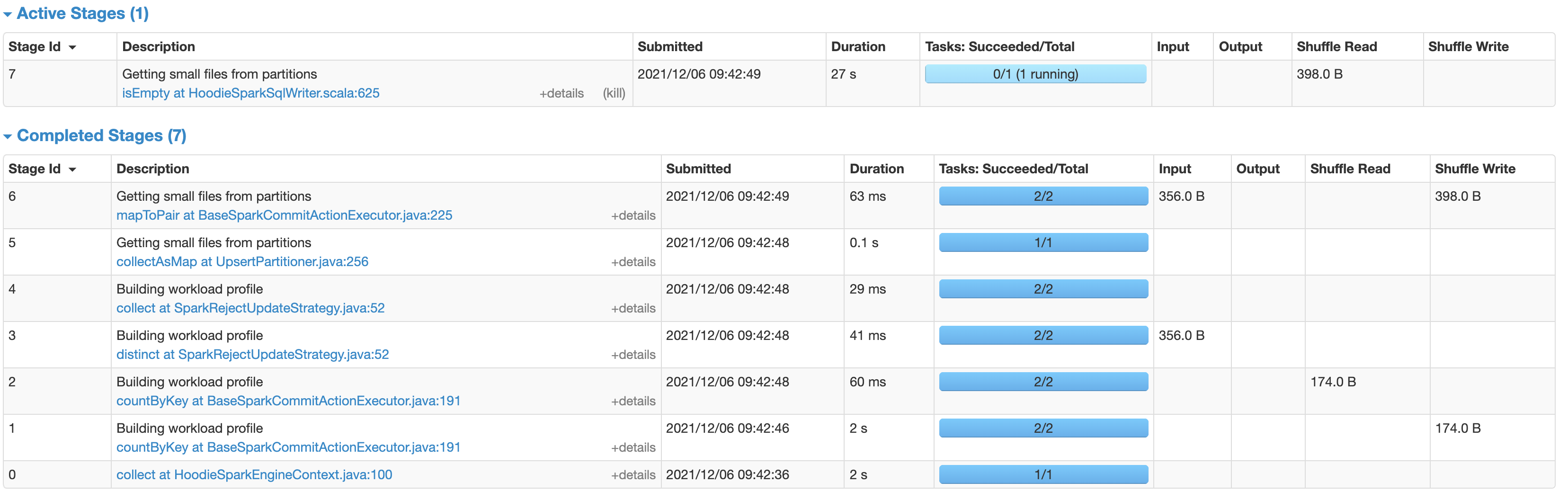

It’s failing during the stage

Getting small files from partitions

isEmpty at HoodieSparkSqlWriter.scala:625

To Reproduce

Steps to reproduce the behavior:

- Create table

CREATE TABLE IF NOT EXISTS public.test_table (

id bigint,

name string,

dt string

) USING hudi

LOCATION 's3a://<bucket>/<path>/test_table'

OPTIONS (

type = 'cow',

primaryKey = 'id'

);

- Insert into the table

insert into public.test_table

values

(1, 'a1', '2021-12-06'),

(2, 'a2', '2021-12-06')

;

- Job fails

Expected behavior

Data is correctly inserted

Environment Description

-

Hudi version : 0.10.0-rc3

-

Spark version : 2.4.4

-

Hive version : 2.3.5

-

Hadoop version : 2.7.3

-

Storage (HDFS/S3/GCS…) : S3

-

Running on Docker? (yes/no) : No

Additional context

Add any other context about the problem here.

Stacktrace

11162 [main] ERROR org.apache.hudi.common.config.DFSPropertiesConfiguration - Error reading in properties from dfs

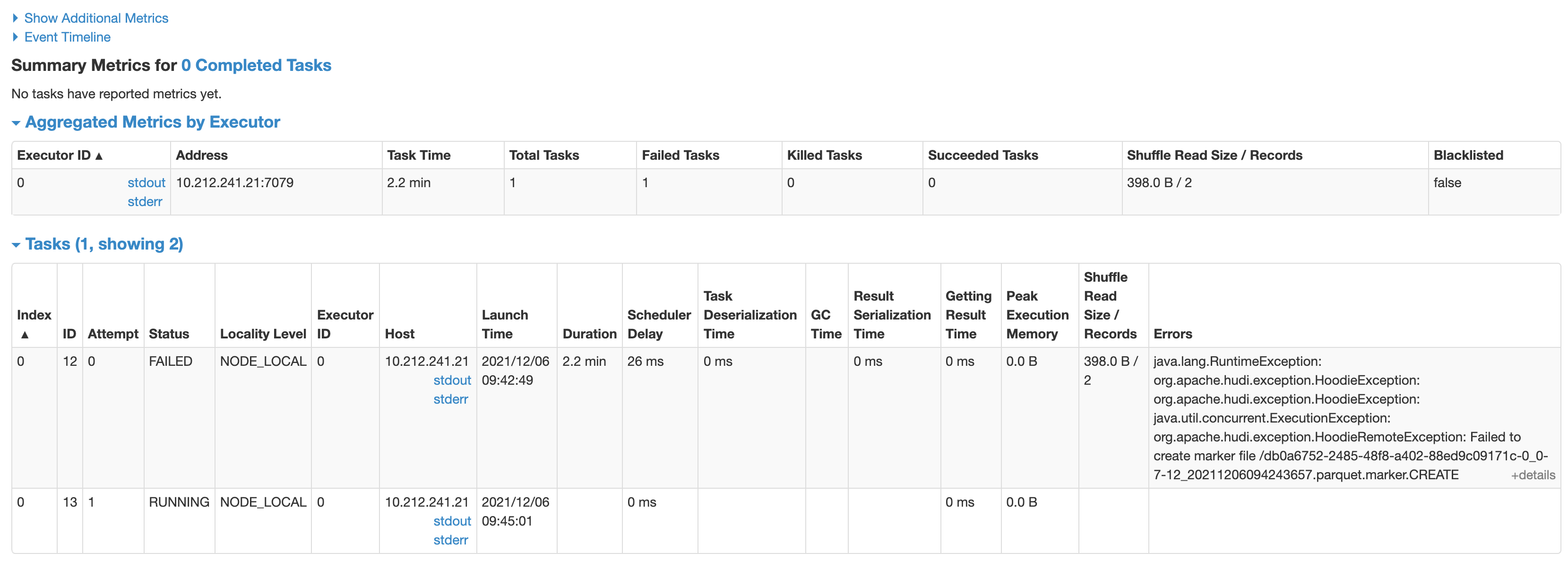

542978 [task-result-getter-0] ERROR org.apache.spark.scheduler.TaskSetManager - Task 0 in stage 7.0 failed 4 times; aborting job

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 7.0 failed 4 times, most recent failure: Lost task 0.3 in stage 7.0 (TID 15, 10.212.241.21, executor 0): java.lang.RuntimeException: org.apache.hudi.exception.HoodieException: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.client.utils.LazyIterableIterator.next(LazyIterableIterator.java:121)

at scala.collection.convert.Wrappers$JIteratorWrapper.next(Wrappers.scala:43)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at org.apache.spark.storage.memory.MemoryStore.putIterator(MemoryStore.scala:221)

at org.apache.spark.storage.memory.MemoryStore.putIteratorAsBytes(MemoryStore.scala:349)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1182)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.doPut(BlockManager.scala:1091)

at org.apache.spark.storage.BlockManager.doPutIterator(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.getOrElseUpdate(BlockManager.scala:882)

at org.apache.spark.rdd.RDD.getOrCompute(RDD.scala:335)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:286)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hudi.exception.HoodieException: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:93)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:36)

at org.apache.hudi.client.utils.LazyIterableIterator.next(LazyIterableIterator.java:119)

... 23 more

Caused by: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.execute(BoundedInMemoryExecutor.java:147)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:89)

... 25 more

Caused by: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.execute(BoundedInMemoryExecutor.java:141)

... 26 more

Caused by: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to<IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.create(TimelineServerBasedWriteMarkers.java:149)

at org.apache.hudi.table.marker.WriteMarkers.create(WriteMarkers.java:65)

at org.apache.hudi.io.HoodieWriteHandle.createMarkerFile(HoodieWriteHandle.java:181)

at org.apache.hudi.io.HoodieCreateHandle.<init>(HoodieCreateHandle.java:100)

at org.apache.hudi.io.HoodieCreateHandle.<init>(HoodieCreateHandle.java:74)

at org.apache.hudi.io.CreateHandleFactory.create(CreateHandleFactory.java:46)

at org.apache.hudi.execution.CopyOnWriteInsertHandler.consumeOneRecord(CopyOnWriteInsertHandler.java:83)

at org.apache.hudi.execution.CopyOnWriteInsertHandler.consumeOneRecord(CopyOnWriteInsertHandler.java:40)

at org.apache.hudi.common.util.queue.BoundedInMemoryQueueConsumer.consume(BoundedInMemoryQueueConsumer.java:37)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.lambda$null$2(BoundedInMemoryExecutor.java:121)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

... 3 more

Caused by: org.apache.http.conn.HttpHostConnectException: Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:159)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:373)

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:394)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:237)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:185)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:108)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56)

at org.apache.http.client.fluent.Request.execute(Request.java:151)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.executeRequestToTimelineServer(TimelineServerBasedWriteMarkers.java:177)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.create(TimelineServerBasedWriteMarkers.java:145)

... 13 more

Caused by: java.net.ConnectException: Connection timed out (Connection timed out)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:75)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142)

... 26 more

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1876)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.rdd.RDD$$anonfun$take$1.apply(RDD.scala:1364)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.take(RDD.scala:1337)

at org.apache.spark.rdd.RDD$$anonfun$isEmpty$1.apply$mcZ$sp(RDD.scala:1472)

at org.apache.spark.rdd.RDD$$anonfun$isEmpty$1.apply(RDD.scala:1472)

at org.apache.spark.rdd.RDD$$anonfun$isEmpty$1.apply(RDD.scala:1472)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.isEmpty(RDD.scala:1471)

at org.apache.hudi.HoodieSparkSqlWriter$.commitAndPerformPostOperations(HoodieSparkSqlWriter.scala:625)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:282)

at org.apache.spark.sql.hudi.command.InsertIntoHoodieTableCommand$.run(InsertIntoHoodieTableCommand.scala:110)

at org.apache.spark.sql.hudi.command.InsertIntoHoodieTableCommand.run(InsertIntoHoodieTableCommand.scala:63)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:79)

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:194)

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:194)

at org.apache.spark.sql.Dataset$$anonfun$52.apply(Dataset.scala:3370)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3369)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:194)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:79)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:642)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:694)

at com.twilio.optimustranformer.OptimusTranformer$$anonfun$main$1$$anonfun$apply$mcV$sp$1.apply(OptimusTranformer.scala:74)

at com.twilio.optimustranformer.OptimusTranformer$$anonfun$main$1$$anonfun$apply$mcV$sp$1.apply(OptimusTranformer.scala:72)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at com.twilio.optimustranformer.OptimusTranformer$$anonfun$main$1.apply$mcV$sp(OptimusTranformer.scala:71)

at scala.util.control.Breaks.breakable(Breaks.scala:38)

at com.twilio.optimustranformer.OptimusTranformer$.main(OptimusTranformer.scala:70)

at com.twilio.optimustranformer.OptimusTranformer.main(OptimusTranformer.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:845)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:920)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:929)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.RuntimeException: org.apache.hudi.exception.HoodieException: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.client.utils.LazyIterableIterator.next(LazyIterableIterator.java:121)

at scala.collection.convert.Wrappers$JIteratorWrapper.next(Wrappers.scala:43)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at org.apache.spark.storage.memory.MemoryStore.putIterator(MemoryStore.scala:221)

at org.apache.spark.storage.memory.MemoryStore.putIteratorAsBytes(MemoryStore.scala:349)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1182)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.doPut(BlockManager.scala:1091)

at org.apache.spark.storage.BlockManager.doPutIterator(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.getOrElseUpdate(BlockManager.scala:882)

at org.apache.spark.rdd.RDD.getOrCompute(RDD.scala:335)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:286)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hudi.exception.HoodieException: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:93)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:36)

at org.apache.hudi.client.utils.LazyIterableIterator.next(LazyIterableIterator.java:119)

... 23 more

Caused by: org.apache.hudi.exception.HoodieException: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.execute(BoundedInMemoryExecutor.java:147)

at org.apache.hudi.execution.SparkLazyInsertIterable.computeNext(SparkLazyInsertIterable.java:89)

... 25 more

Caused by: java.util.concurrent.ExecutionException: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.execute(BoundedInMemoryExecutor.java:141)

... 26 more

Caused by: org.apache.hudi.exception.HoodieRemoteException: Failed to create marker file /db0a6752-2485-48f8-a402-88ed9c09171c-0_0-7-15_20211206094243657.parquet.marker.CREATE

Connect to <IP_ADDRESS>:35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.create(TimelineServerBasedWriteMarkers.java:149)

at org.apache.hudi.table.marker.WriteMarkers.create(WriteMarkers.java:65)

at org.apache.hudi.io.HoodieWriteHandle.createMarkerFile(HoodieWriteHandle.java:181)

at org.apache.hudi.io.HoodieCreateHandle.<init>(HoodieCreateHandle.java:100)

at org.apache.hudi.io.HoodieCreateHandle.<init>(HoodieCreateHandle.java:74)

at org.apache.hudi.io.CreateHandleFactory.create(CreateHandleFactory.java:46)

at org.apache.hudi.execution.CopyOnWriteInsertHandler.consumeOneRecord(CopyOnWriteInsertHandler.java:83)

at org.apache.hudi.execution.CopyOnWriteInsertHandler.consumeOneRecord(CopyOnWriteInsertHandler.java:40)

at org.apache.hudi.common.util.queue.BoundedInMemoryQueueConsumer.consume(BoundedInMemoryQueueConsumer.java:37)

at org.apache.hudi.common.util.queue.BoundedInMemoryExecutor.lambda$null$2(BoundedInMemoryExecutor.java:121)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

... 3 more

Caused by: org.apache.http.conn.HttpHostConnectException: Connect to <IP_ADDRESS>35329 [<IP_ADDRESS>] failed: Connection timed out (Connection timed out)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:159)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:373)

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:394)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:237)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:185)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:108)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56)

at org.apache.http.client.fluent.Request.execute(Request.java:151)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.executeRequestToTimelineServer(TimelineServerBasedWriteMarkers.java:177)

at org.apache.hudi.table.marker.TimelineServerBasedWriteMarkers.create(TimelineServerBasedWriteMarkers.java:145)

... 13 more

Caused by: java.net.ConnectException: Connection timed out (Connection timed out)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at org.apache.http.conn.socket.PlainConnectionSocketFactory.connectSocket(PlainConnectionSocketFactory.java:75)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142)

... 26 more

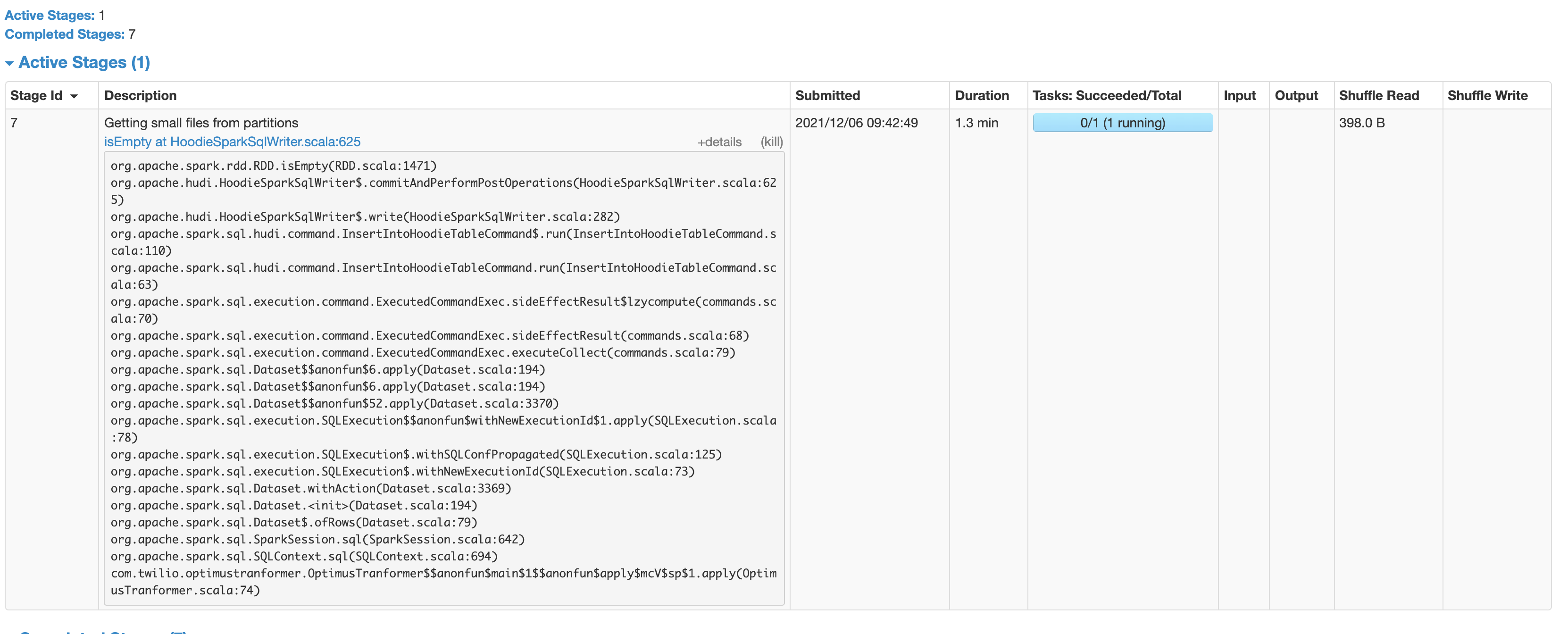

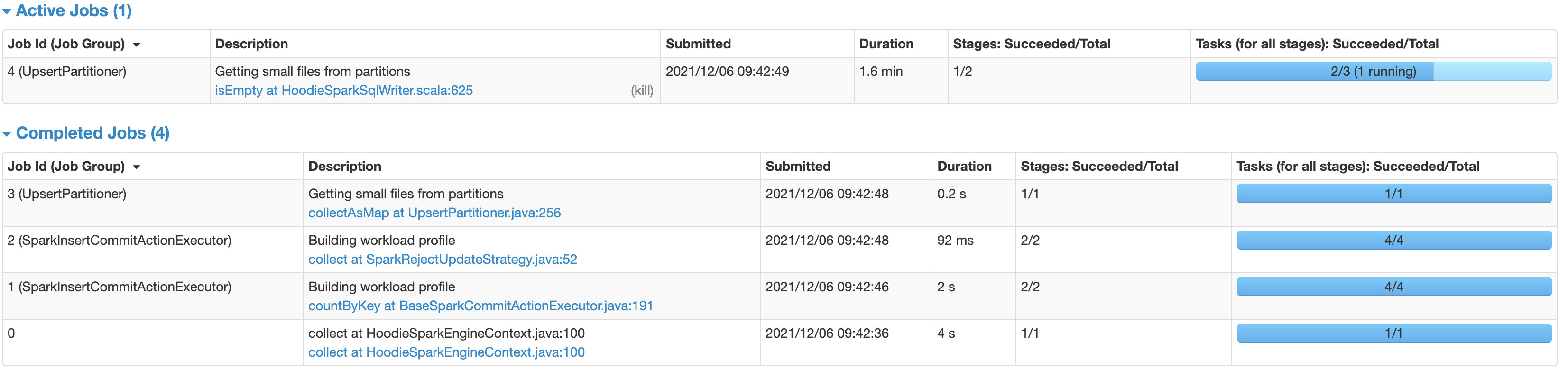

Some screenshots of the spark app:

About this issue

- Original URL

- State: closed

- Created 3 years ago

- Reactions: 1

- Comments: 39 (30 by maintainers)

hello @BalaMahesh I met the same problem, I tried to set these config options when creating table, and it worked !