hudi: [SUPPORT] Clustering is not happening on Flink Hudi

Trying to cluster small files into large clustered files to reduce the IO and number of logs and parquet

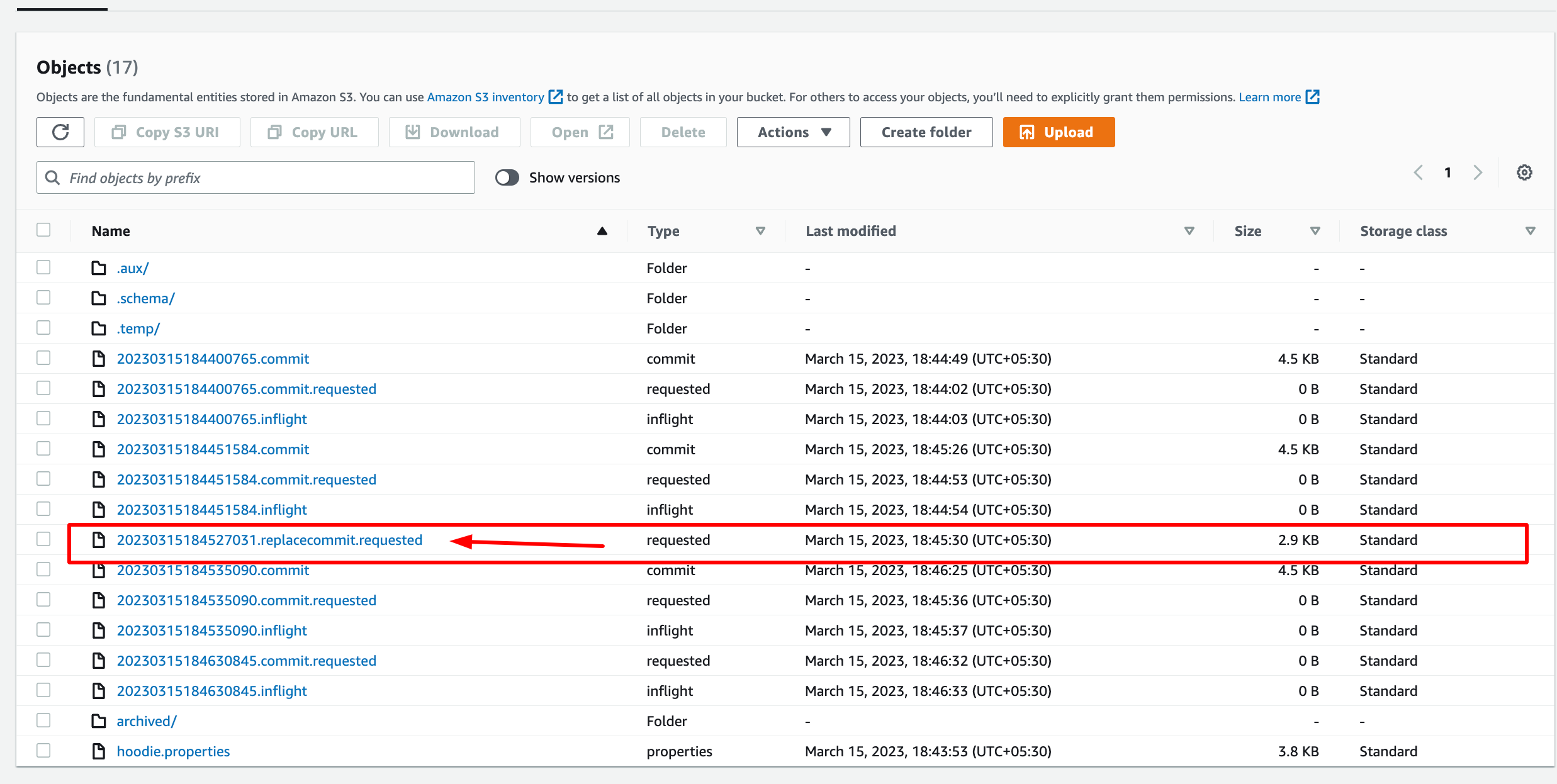

to overcome with small files problem in HUDI we are trying to create the cluster file but it is just creating the .replacecommit.requested file and is not producing the .replacecommit.inflight and .replace files to complete the clustering process.

Expected behavior

it should create the clustered file after the request is created.

Environment Description

-

Hudi version : 12.1

-

Flink version : 1.14.6

-

Storage (HDFS/S3/GCS…) : s3

-

Running on Docker? (yes/no) : Yes Config of Job

'hoodie.cleaner.commits.retained' = '3',

'hoodie.clean.async'='true',

'hoodie.cleaner.policy' = 'KEEP_LATEST_COMMITS',

'hoodie.parquet.small.file.limit'='104857600',

'hoodie.clustering.inline'= 'true',

'hoodie.clustering.inline.max.commits'= '2',

'hoodie.clustering.plan.strategy.max.bytes.per.group'= '107374182400',

'hoodie.clustering.plan.strategy.max.num.groups'= '1'

I am attaching a screenshot for the same.

Stacktrace

No error on hudi job

you can see in the screenshot it is creating the .replacecommit.requested file but it is not generating the .replacecommit.inflight and .replacement.

This is blocking our progress toward completing the project.

About this issue

- Original URL

- State: closed

- Created a year ago

- Comments: 18 (8 by maintainers)

The clustering only works for COW table with INSERT operation.

Seems you are using default write.operation which is UPSERT.

upsert with default state index will try to write new data to file group which base file is smaller than this config hoodie.parquet.small.file.limit (default 100MB) first.